-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

@bonfire62 can you post some screenshots, so other users get a grasp what you did?

Sure, these may have different disk names, but this is the procedure:

Once you see the disk with the different numbers as shown in my previous screenshot, you hit the replace button:

on that disk.

Then run a smartctl -i --all on whatever new disk showed up, until you find the drive with the same serial number as the one you wiped. There's probably a better way to list then grep for it. Once that's found, select the disk in the dropdown menu:

note: I did not have to force. The resilver happened automatically. I showed errors on the disk, and did a:

zpool clear main_pool

to remove the errors, then a scrub.

Once you see the disk with the different numbers as shown in my previous screenshot, you hit the replace button:

on that disk.

Then run a smartctl -i --all on whatever new disk showed up, until you find the drive with the same serial number as the one you wiped. There's probably a better way to list then grep for it. Once that's found, select the disk in the dropdown menu:

note: I did not have to force. The resilver happened automatically. I showed errors on the disk, and did a:

zpool clear main_pool

to remove the errors, then a scrub.

Hey, ok I have followed through the steps up to the DIF Format command,Data Integrity Field

DIF extends the disk sector from its traditional 512-byte, to 520-byte, by adding 8 additional protection bytes. You might also find also disk sectors extended to 528-byte by custom firmware. OEM rebranded HDDs or SSDs from major storage vendors are plagued with this "enhancement". Linux does not support 520-byte sectors, unless the drive is formatted with DIF and installed into a DIF-capable HBA.

Example of branded disk:

Code:

# sg_scan -i /dev/sda

/dev/sda: scsi0 channel=0 id=0 lun=0

NETAPP X287_S15K5288A15 NA00 [rmb=0 cmdq=1 pqual=0 pdev=0x0]

Example of unbranded disk:

Code:

# sg_scan -i /dev/sda

/dev/sda: scsi0 channel=0 id=0 lun=0

ATA HUH537060BKD702 0003 [rmb=0 cmdq=1 pqual=0 pdev=0x0]

DIF format command:

Code:

# sg_format -vFs 512 /dev/sda

Since Linux cannot normally see disks with 520-byte sectors, is safe to try formatting the disk with 512-byte sectors. See the detailed formatting process, below.

The drives are running 512-byte sectors as shown by the output below.

Code:

Disk /dev/sdb: 5.46 TiB, 6001175126016 bytes, 11721045168 sectors Disk model: MG04SCA60EE Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: FDE2DAA6-87D5-4C38-A3BD-CADE5652B16A Device Start End Sectors Size Type /dev/sdb1 128 4194304 4194177 2G Linux swap /dev/sdb2 4194432 11721045134 11716850703 5.5T Solaris /usr & Apple ZFS

If I run # sg_format -vFs 512 /dev/sda on the SAS drive I am having issues with, this will wipe the drive completely correctly but will leave the other drives intact correct?

Error being shown on the drive when trying to replace in pool

Error: [EFAULT] Unable to GPT format the disk "sda": Warning! Read error 5; strange behavior now likely! Warning: Partition table header claims that the size of partition table entries is 0 bytes, but this program supports only 128-byte entries. Adjusting accordingly, but partition table may be garbage. Warning! Read error 5; strange behavior now likely! Warning: Partition table header claims that the size of partition table entries is 0 bytes, but this program supports only 128-byte entries. Adjusting accordingly, but partition table may be garbage. Unable to save backup partition table! Perhaps the 'e' option on the experts' menu will resolve this problem. Warning! An error was reported when writing the partition table! This error MIGHT be harmless, or the disk might be damaged! Checking it is advisable.

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

That means nothing, Linux will show 512-byte sectors on 520-byte sector formatted disks because it does not understand it.The drives are running 512-byte sectors as shown by the output below.

If the command listed below fails, at least you know where is the issue, but I'm pretty sure you will be successful. First, you need to make sure you format the correct hard drive, do you know which one you need to fix?If I run # sg_format -vFs 512 /dev/sda on the SAS drive I am having issues with, this will wipe the drive completely correctly but will leave the other drives intact correct?

Running:

Code:

# sg_format -v -F -s 512 /dev/sda

Will properly format that specific disk (it will not touch other disks), which is exactly what you want. Obviously, you need to offline the disk, like explained into OP, prior formatting. Scale will take care of the resilvering for you, after. You just need to make sure you format the right disk. Is it part of raidz2 array? Having a raidz1 array is like playing with fire, you can lose all data if another disk fails during the resilvering of your broken disk.

Last edited:

According to TrueNAS it is Disk sda, as this is the only disk I am not able to use and is not apart of the array.do you know which one you need to fix?

currently running a Raid Z3 with one drive reporting as failed (the drive in question.it part of raidz2 array?

Is there a better way of determiningwhat drive I need to format or go be the drive that is not in the pool?

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

Great stuff, you care about your data. The easiest way to see all relations between your pool disks and actual system disks is by running:currently running a Raid Z3 with one drive reporting as failed (the drive in question.

Code:

# zpool status # lsblk -o NAME,PARTUUID,PATH,FSTYPE

This will give you the list of pool disk partuuid's, which you can crosscheck with list of disk partuuid's seen by Linux. If the disk is not part of the pool, it will only show into

lsblk command output. This way, you'll know for sure the correct /dev/sdX name.How do I offline the disk?Obviously, you need to offline the disk, like explained into OP, prior formatting

When I go into the pool all I see for that disk is Details for3869088571791395513, disk is unavaliable, Then it only gives me to option to replace with no option to offline the drive.

Am I also going to need to run this for every disk? E.G format 1, let it resliver and repeat for all 12 drives?

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

Looks like you already ran the formatting command and your disk is okay now, see the instructions posted by @bonfire62. Ping him here in the thread, if you have any questions related to his procedure.When I go into the pool all I see for that disk is Details for3869088571791395513, disk is unavaliable, Then it only gives me to option to replace with no option to offline the drive.

Definitely not, I mentioned in an earlier post that you only format the affected disks. If Bluefin does not reports errors, you are good.Am I also going to need to run this for every disk?

Cool, thanks for the help,Looks like you already ran the formatting command and your disk is okay now, see the instructions posted by @bonfire62. Ping him here in the thread, if you have any questions related to his procedure.

Definitely not, I mentioned in an earlier post that you only format the affected disks. If Bluefin does not reports errors, you are good.

I will give it a shoot and see how it goes

mattyv316

Dabbler

- Joined

- Sep 13, 2021

- Messages

- 27

Hi @Daisuke. I have read through this thread as I am getting the same DIF unsupported error. My scenario is a little different. My 2 drives throwing this error are 2 mirrored boot drives with multiple partitions and I am wondering if there is anything I need to do different.

I am not seeing how to take a disk offline for the boot-pool. Here are the results of the commands from the first post.

Message: Disk(s): sdc, sdb are formatted with Data Integrity Feature (DIF) which is unsupported.

# sg_scan -i /dev/sdb

# sg_readcap -l /dev/sdb

# zpool status -v boot-pool

No results from #grep --color 'sector size' /var/log/messages

# lsblk -o NAME,PARTUUID,PATH,FSTYPE /dev/sdb

# sg_map

Any guidance you can provide is really appreciated. I don't want to start formatting boot-pool drives without being completely sure of what to do.

Thanks

I am not seeing how to take a disk offline for the boot-pool. Here are the results of the commands from the first post.

Message: Disk(s): sdc, sdb are formatted with Data Integrity Feature (DIF) which is unsupported.

# sg_scan -i /dev/sdb

Code:

/dev/sdb: scsi0 channel=0 id=12 lun=0 [em]

HGST HSCAC2DA4SUN400G A29A [rmb=0 cmdq=1 pqual=0 pdev=0x0]# sg_readcap -l /dev/sdb

Code:

Read Capacity results: Protection: prot_en=1, p_type=0, p_i_exponent=0 [type 1 protection] Logical block provisioning: lbpme=1, lbprz=1 Last LBA=781422767 (0x2e9390af), Number of logical blocks=781422768 Logical block length=512 bytes Logical blocks per physical block exponent=3 [so physical block length=4096 bytes] Lowest aligned LBA=0 Hence: Device size: 400088457216 bytes, 381554.1 MiB, 400.09 GB

# zpool status -v boot-pool

Code:

pool: boot-pool

state: ONLINE

status: Some supported and requested features are not enabled on the pool.

The pool can still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(7) for details.

scan: scrub repaired 0B in 00:00:40 with 0 errors on Wed Dec 14 03:45:42 2022

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdb3 ONLINE 0 0 0

sdc3 ONLINE 0 0 0

errors: No known data errorsNo results from #grep --color 'sector size' /var/log/messages

# lsblk -o NAME,PARTUUID,PATH,FSTYPE /dev/sdb

Code:

NAME PARTUUID PATH FSTYPE sdb /dev/sdb ├─sdb1 88647042-9740-46e7-b48e-ea65e4d4b372 /dev/sdb1 ├─sdb2 61de13cc-8960-4a38-8622-d674fe442d99 /dev/sdb2 vfat ├─sdb3 ca179f5a-2cf6-47b1-a880-809f69def2a6 /dev/sdb3 zfs_member └─sdb4 9f04c168-0750-9948-94f5-48f9feaa7119 /dev/sdb4 zfs_member

# sg_map

Code:

/dev/sg0 /dev/sg1 /dev/sg2 /dev/sdai /dev/sg3 /dev/sds /dev/sg4 /dev/sdak /dev/sg5 /dev/sdr /dev/sg6 /dev/sdv /dev/sg7 /dev/sdab /dev/sg8 /dev/sdad /dev/sg9 /dev/sdy /dev/sg10 /dev/sdw /dev/sg11 /dev/sdaa /dev/sg12 /dev/sdac /dev/sg13 /dev/sdaj /dev/sg14 /dev/sdb * /dev/sg15 /dev/sdc * /dev/sg16 /dev/sg17 /dev/sdah /dev/sg18 /dev/sdx /dev/sg19 /dev/sdh /dev/sg20 /dev/sdz /dev/sg21 /dev/sdal /dev/sg22 /dev/sdk /dev/sg23 /dev/sdu /dev/sg24 /dev/sdae /dev/sg25 /dev/sdag /dev/sg26 /dev/sdaf /dev/sg27 /dev/sda /dev/sg28 /dev/sdd /dev/sg29 /dev/sde /dev/sg30 /dev/sdj /dev/sg31 /dev/sdf /dev/sg32 /dev/sdp /dev/sg33 /dev/sdo /dev/sg34 /dev/sdq /dev/sg35 /dev/sdl /dev/sg36 /dev/sdi /dev/sg37 /dev/sdg /dev/sg38 /dev/sdm /dev/sg39 /dev/sdt /dev/sg40 /dev/sdn /dev/sg41

Any guidance you can provide is really appreciated. I don't want to start formatting boot-pool drives without being completely sure of what to do.

Thanks

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

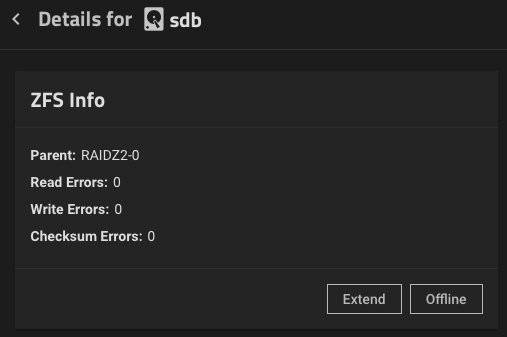

Easy fix @mattyv316. First, take the disk offline in your pool:

Then run the formatting procedure for the affected

Let us know how it went and please post UI screenshots, before and after.

For other people, I know this looks a little scary but you can confidently run the

Then run the formatting procedure for the affected

/dev/sdb disk. I strongly recommend you to use tmux, as detailed into OP. If you lose ssh connectivity, the interrupted format process might affect your disk, it takes several hours to format the disk. Unless you run the command directly from Scale console, which is the safest way.Code:

# time sg_format -v -F /dev/sdb

Let us know how it went and please post UI screenshots, before and after.

For other people, I know this looks a little scary but you can confidently run the

sg_format command as listed into Formatting Procedure section, after you did your troubleshooting. If the disk is not repairable, the format command will tell you where is the issue. Also, only the commands listed into Formatting Procedure section are relevant.

Last edited:

mattyv316

Dabbler

- Joined

- Sep 13, 2021

- Messages

- 27

Thank you for the quick reply. I will give this a shot tomorrow.Easy fix @mattyv316. First, take the disk offline in your pool:

View attachment 61244

Then run the formatting procedure for the affected/dev/sdbdisk. I strongly recommend you to usetmux, as detailed into OP. If you lose ssh connectivity, the interrupted format process might affect your disk, it takes several hours to format the disk. Unless you run the command directly from Scale console, which is the safest way.

Code:# time sg_format -vFf 0 -s 512 /dev/sdb

Let us know how it went and please post UI screenshots, before and after.

For other people, I know this looks a little scary buy you can confidently run thesg_formatcommand as listed into Formatting Procedure section, after you did your troubleshooting. If the disk is not repairable, the format command will tell you where is the issue.

mattyv316

Dabbler

- Joined

- Sep 13, 2021

- Messages

- 27

Easy fix @mattyv316. First, take the disk offline in your pool:

View attachment 61244

Then run the formatting procedure for the affected/dev/sdbdisk. I strongly recommend you to usetmux, as detailed into OP. If you lose ssh connectivity, the interrupted format process might affect your disk, it takes several hours to format the disk. Unless you run the command directly from Scale console, which is the safest way.

Code:# time sg_format -vFf 0 -s 512 /dev/sdb

Let us know how it went and please post UI screenshots, before and after.

For other people, I know this looks a little scary but you can confidently run thesg_formatcommand as listed into Formatting Procedure section, after you did your troubleshooting. If the disk is not repairable, the format command will tell you where is the issue. Also, only the commands listed into Formatting Procedure section are relevant.

I attempted to format the one /dev/sdb drive, so I marked it offline, then ran sg_format, but not sure it did anything. It says it only took 1 minute and 15 seconds. It wasn't running long at all

Code:

# time sg_format -vFf 0 -s 512 /dev/sdb

HGST HSCAC2DA4SUN400G A29A peripheral_type: disk [0x0]

PROTECT=1

<< supports protection information>>

Unit serial number: 001517JQWN8A 0QVBWN8A

LU name: 5000cca04e159f90

mode sense(10) cdb: [5a 00 01 00 00 00 00 00 fc 00]

Mode Sense (block descriptor) data, prior to changes:

Number of blocks=781422768 [0x2e9390b0]

Block size=512 [0x200]

A FORMAT UNIT will commence in 15 seconds

ALL data on /dev/sdb will be DESTROYED

Press control-C to abort

A FORMAT UNIT will commence in 10 seconds

ALL data on /dev/sdb will be DESTROYED

Press control-C to abort

A FORMAT UNIT will commence in 5 seconds

ALL data on /dev/sdb will be DESTROYED

Press control-C to abort

Format unit cdb: [04 18 00 00 00 00]

Format unit has started

FORMAT UNIT Complete

sg_format -vFf 0 -s 512 /dev/sdb 0.00s user 0.00s system 0% cpu 1:15.00 totalzpool status now looks like this

Code:

# zpool status -v boot-pool

pool: boot-pool

state: DEGRADED

status: One or more devices are faulted in response to persistent errors.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Replace the faulted device, or use 'zpool clear' to mark the device

repaired.

scan: scrub repaired 0B in 00:00:40 with 0 errors on Wed Dec 14 03:45:42 2022

config:

NAME STATE READ WRITE CKSUM

boot-pool DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

sdb3 FAULTED 3 262 0 too many errors

sdc3 ONLINE 0 0 0

errors: No known data errorsDaisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

Did you checked theI attempted to format the one /dev/sdb drive

sg_readcap after format? Is the only way to see if the disk is fixed. These steps are detailed into OP, there is no need to wait for instructions how to check your disk. If the disk is not fine, please post the command output here. Maybe

sg_format does not like to run both actions together. If that’s the case, I’ll update the guide.If the disk is fine, reboot the server, then check the pool if is resilvering with

zpool status. From there treat the issue as a degraded pool, you can start your own thread for that. The goal is to limit this thread to disk formatting issues only.

Last edited:

mattyv316

Dabbler

- Joined

- Sep 13, 2021

- Messages

- 27

I apologize for forgetting that step. sg_readcap showed no protection or problems. I was able replace the drive in my pool, and I thank you for all the help and patience.Did you checked thesg_readcapafter format? Is the only way to see if the disk is fixed. These steps are detailed into OP, there is no need to wait for instructions how to check your disk.

If the disk is not fine, please post the command output here. Maybesg_formatdoes not like to run both actions together. If that’s the case, I’ll update the guide.

If the disk is fine, reboot the server, then check the pool if is resilvering withzpool status. From there treat the issue as a degraded pool, you can start your own thread for that. The goal is to limit this thread to disk formatting issues only.

It does look like I will need to start another thread as replacing the disk only worked for one of the partitions. The mirrored pair should contain my boot drive (boot-drive) and an additional storage partition (ssd-storage). I offlined, reformatted, and replaced the drive as part of the ssd-storage pool since the UI doesn't seem to have an offline option for the boot pool status screen (only gave replace option.

Now, my ssd-storage pool is fixed, but boot-pool is still degraded and no option for drives when I select replace. Not sure how to do this with a partitioned boot pool.

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

No worries, glad everything is fixed!I apologize for forgetting that step.

I suggest starting your own thread for this issue. Ping me in that thread and I will help there.Now, my ssd-storage pool is fixed, but boot-pool is still degraded and no option for drives when I select replace. Not sure how to do this with a partitioned boot pool.

Hey @Daisuke and @bonfire62

Followed the guide and your advice Daisuke, so far so good.

The drive has been resilvered and no more errors have poped up related to DIF,

Currently running a scrub task.

Followed the guide and your advice Daisuke, so far so good.

The drive has been resilvered and no more errors have poped up related to DIF,

Currently running a scrub task.

Since all 4 of the drives in my pool are affected, would there be any problem resilvering from them after formatting each one? Wondering if it would it be better to just backup all the data, format all the drives, and start over with the pool. I suspect I may possibly have 2 or 3 other pools with drives that have the same issue, but running on TrueNAS core so haven't seen the error yet.

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

If you want, you can destroy the pool and format all disks in one shot, withWondering if it would it be better to just backup all the data, format all the drives, and start over with the pool.

tmux. Is all about how long it takes to format a disk. It can be between few minutes of few hours. Honestly, I would start with one disk and see how long it takes. Is a pain to backup, destroy pool, redo the pool etc. Plus during this time your Scale server is not usable.Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Troubleshooting disk format warnings in TrueNAS SCALE"

Similar threads

- Replies

- 4

- Views

- 7K

- Replies

- 13

- Views

- 21K