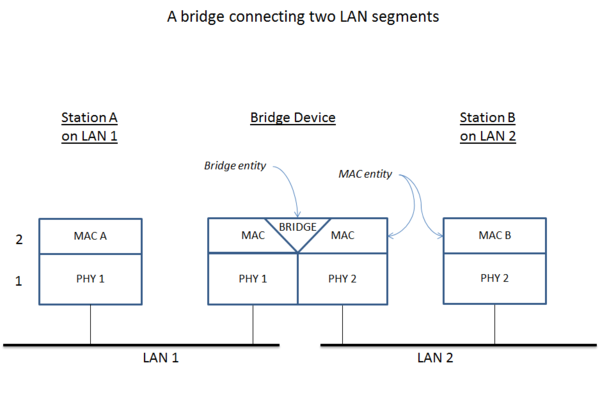

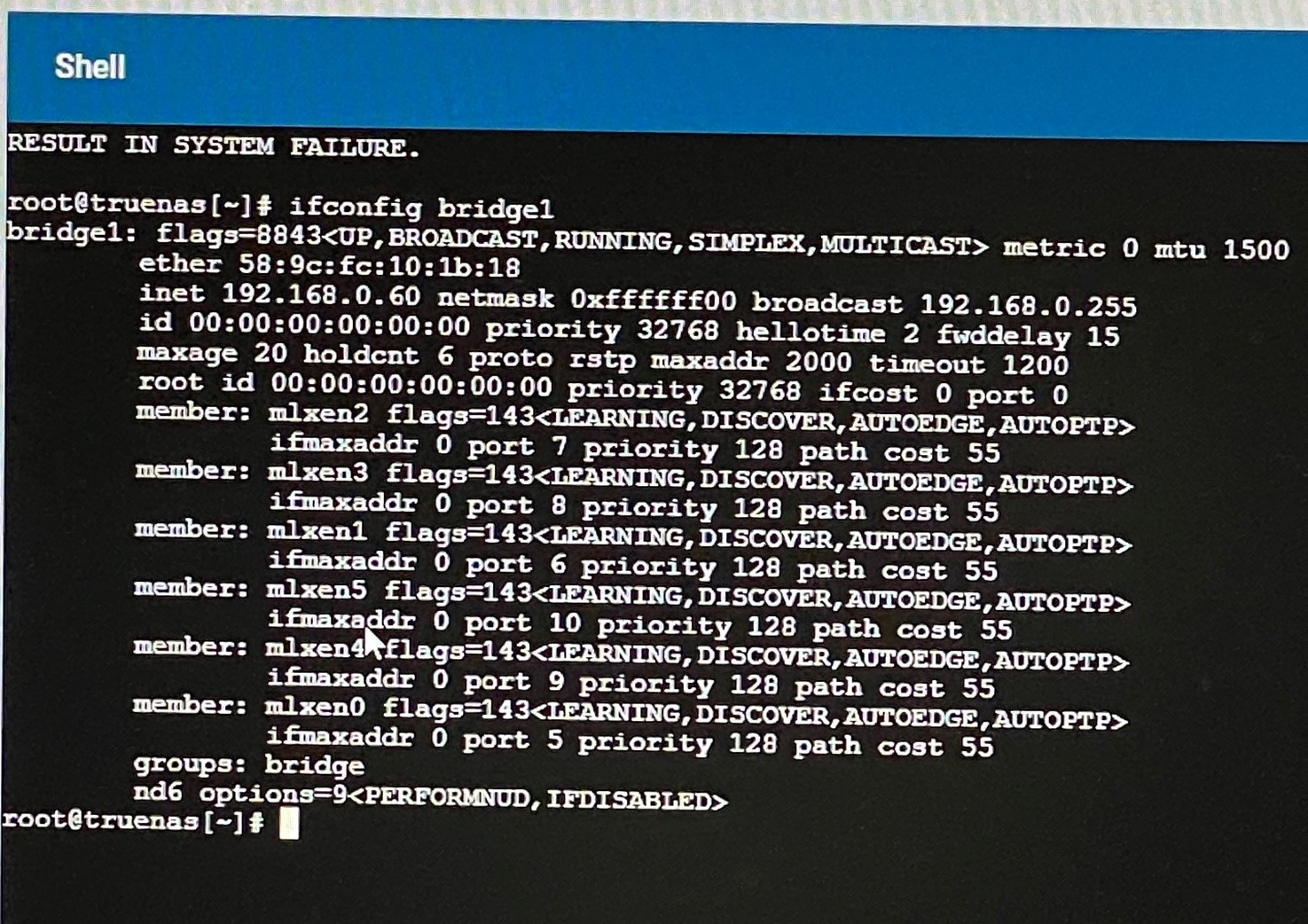

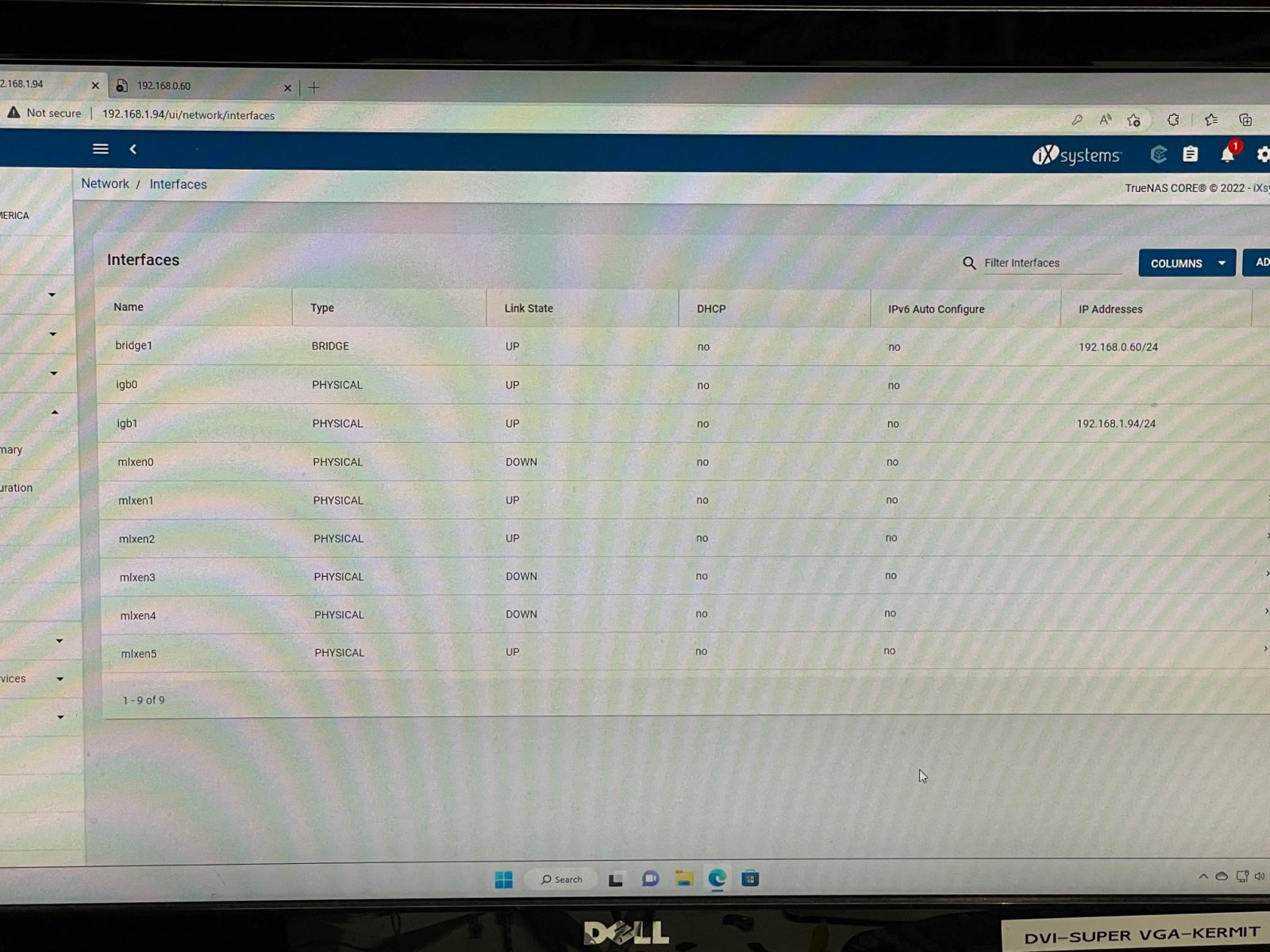

I could really use some help. I setup a bridge using the GUI. I have 3 Mellanox X2 dual port cards. System B connected to my switch with a fiber cable. System B is connected to my server. That server has Mellanox X3 dual port card and one port connected to an Aruba switch and the other to System B. System B performs perfectly when connected but does not work after I create the bridge on system B. Attached is a copy of the GUI screen and the ifconfig showing that the bridge is setup. I cannot ping the 192.160.0.60 address from any remote system. I can talk to the gui on address 192.168.1.94 from a system on that subnet.

I always have the issue that I cannot configure the bridge using the GUI to. When the network interface gui goes into test mode, the network reconfigures, and I cannot press the save button because it is no longer on that address. I cannot configure two interfaces to the same subnet. I connect to the GUI on the x.x.1.x subnet. This does not get reconfigured and I can save the config. The bridge is then persistent. I can configure the address I want through the 192.168.1.94 address. I have had this setup working in the past but I reloaded SW and now I cannot get it to connect.

I am about ready to give up on setting the bridge via the gui and go back to a script or buy a darn switch. I would really like to get this to get setuo reliably. I was hoping for some help.

I always have the issue that I cannot configure the bridge using the GUI to. When the network interface gui goes into test mode, the network reconfigures, and I cannot press the save button because it is no longer on that address. I cannot configure two interfaces to the same subnet. I connect to the GUI on the x.x.1.x subnet. This does not get reconfigured and I can save the config. The bridge is then persistent. I can configure the address I want through the 192.168.1.94 address. I have had this setup working in the past but I reloaded SW and now I cannot get it to connect.

I am about ready to give up on setting the bridge via the gui and go back to a script or buy a darn switch. I would really like to get this to get setuo reliably. I was hoping for some help.