I have 2 freenas units I am using to replicate 1 (primary) to 2 (offsite backup) and am only getting <1G throughput on a 10G link. In all I need to replicate multiple pools totalling ~150TB for backup replication so transfer performance is vital in order to keep up with the daily deltas. So first...some specs:

Unit 1: (45 drives storinator)

Freenas version - 11.3-RC1

CPU - E52620 v4 dual

Memory - 256GB

HBAs - 4 x LSI 9305 12Gb/s

Pool consists of 6 8TB Seagate ironwolf 7.2K in a RAIDz2

10GB intel nic

Unit 2:

Freenas version - 9.2.10

CPU - Intel® Xeon® Processor E5-2620 v4

Memory - 262GB

HBAs - Rocket HBA 750s

Pool consists of 10 4TB Hitachi 5.4K in a RAIDz2

10GB intel nic

Results:

iperf from 1 to 2 ~3.5GB using default iperf settings

zfs send pool/snap | ssh 'ip' zfs recv -F Pool/dataset yields 110MiB/s

During the transfer the cpu shows ~2% on unit 1 and ~3% on unit 2. I have been digging into diskinfo, gstat, iostat, and numerous zpool commands to view performance. I did find a drive taking 100% busy time seen in gstat while the rest of the pool was nearly idle. I then removed that drive and replaced it in unit 2 and throughput went from 50MB to 100MB, but am unable to get past that. I have tested with other pools on both sides as I have plenty of drives to work with, but all yield the same result.

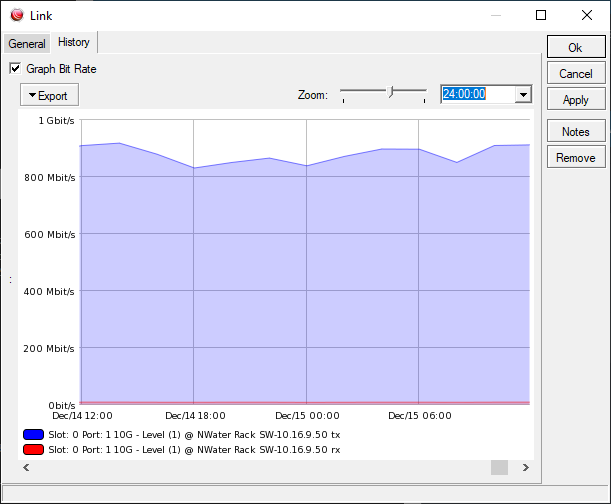

Network throughput:

I have been searching the forums over the past week but haven't yet understood where to continue digging. I have verified that it is using the 10G nics instead of the 1G management ones by looking at the reporting pages. Any thoughts.....

Unit 1: (45 drives storinator)

Freenas version - 11.3-RC1

CPU - E52620 v4 dual

Memory - 256GB

HBAs - 4 x LSI 9305 12Gb/s

Pool consists of 6 8TB Seagate ironwolf 7.2K in a RAIDz2

10GB intel nic

Unit 2:

Freenas version - 9.2.10

CPU - Intel® Xeon® Processor E5-2620 v4

Memory - 262GB

HBAs - Rocket HBA 750s

Pool consists of 10 4TB Hitachi 5.4K in a RAIDz2

10GB intel nic

Results:

iperf from 1 to 2 ~3.5GB using default iperf settings

zfs send pool/snap | ssh 'ip' zfs recv -F Pool/dataset yields 110MiB/s

During the transfer the cpu shows ~2% on unit 1 and ~3% on unit 2. I have been digging into diskinfo, gstat, iostat, and numerous zpool commands to view performance. I did find a drive taking 100% busy time seen in gstat while the rest of the pool was nearly idle. I then removed that drive and replaced it in unit 2 and throughput went from 50MB to 100MB, but am unable to get past that. I have tested with other pools on both sides as I have plenty of drives to work with, but all yield the same result.

Network throughput:

I have been searching the forums over the past week but haven't yet understood where to continue digging. I have verified that it is using the 10G nics instead of the 1G management ones by looking at the reporting pages. Any thoughts.....