Hello iX Community!

I searched for a while and couldn't find an exact answer to this question.

Devices:

I have two(2) identical 500TB NASs (built by 45drives). One is on our local network(source) and one is in a remote office in another state that we replicate our snapshot to (destination). (1G/1G on both ends)

Problem:

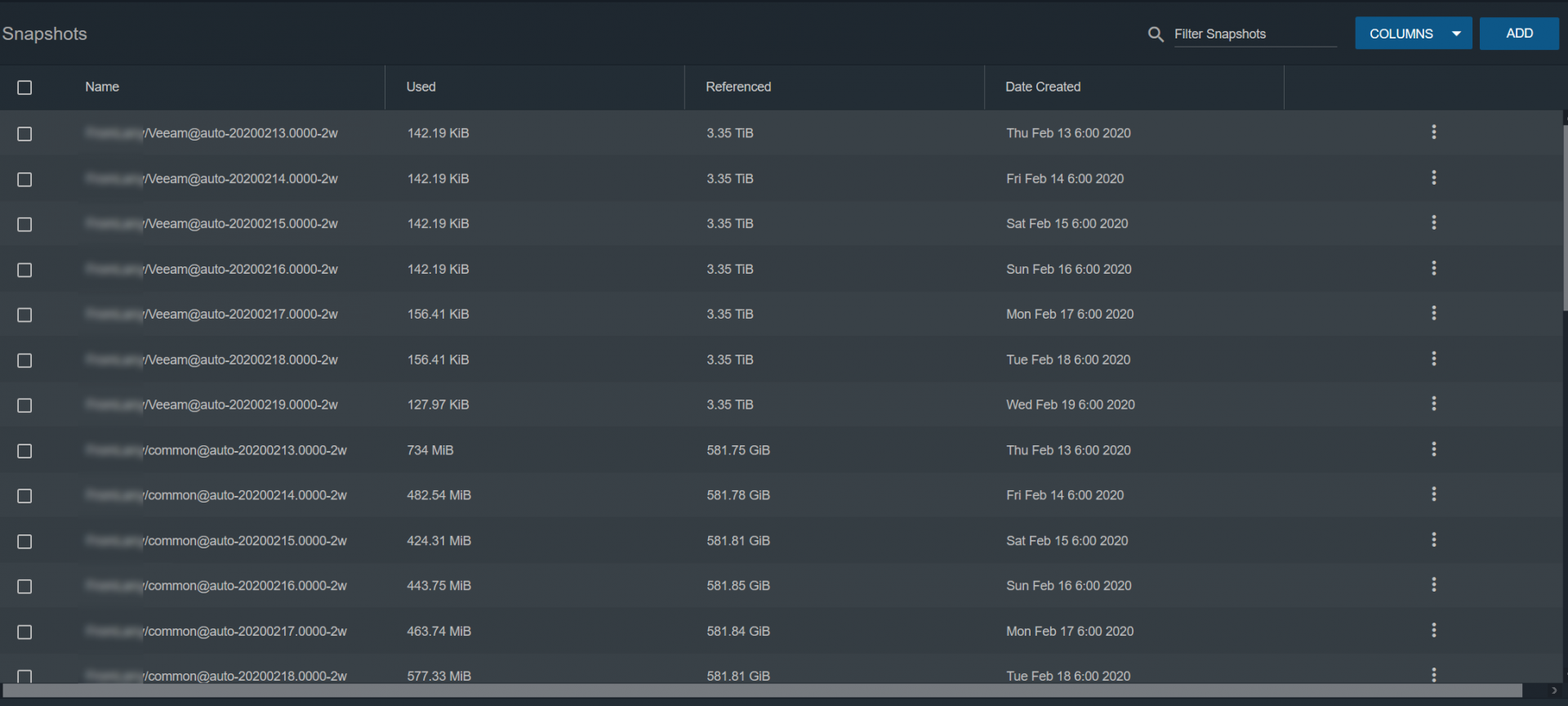

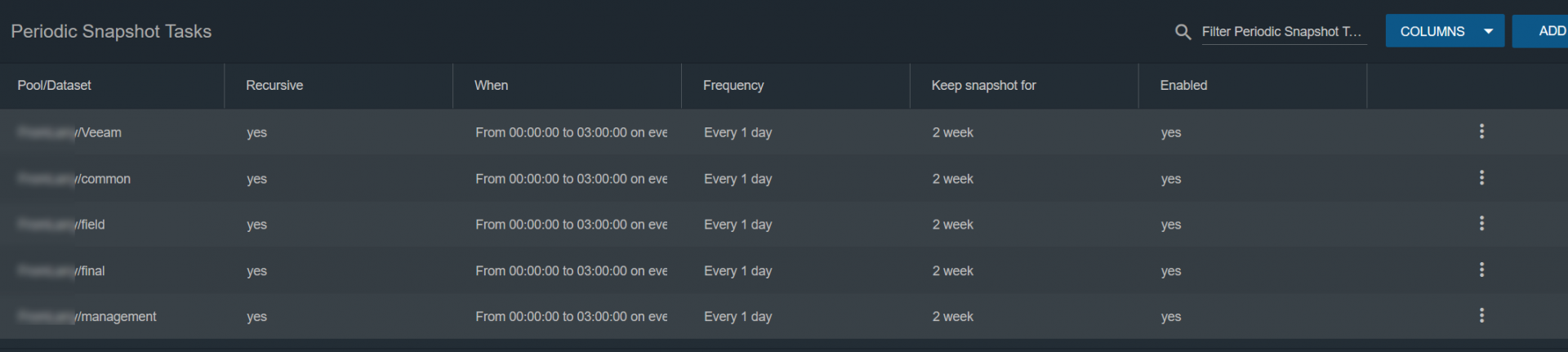

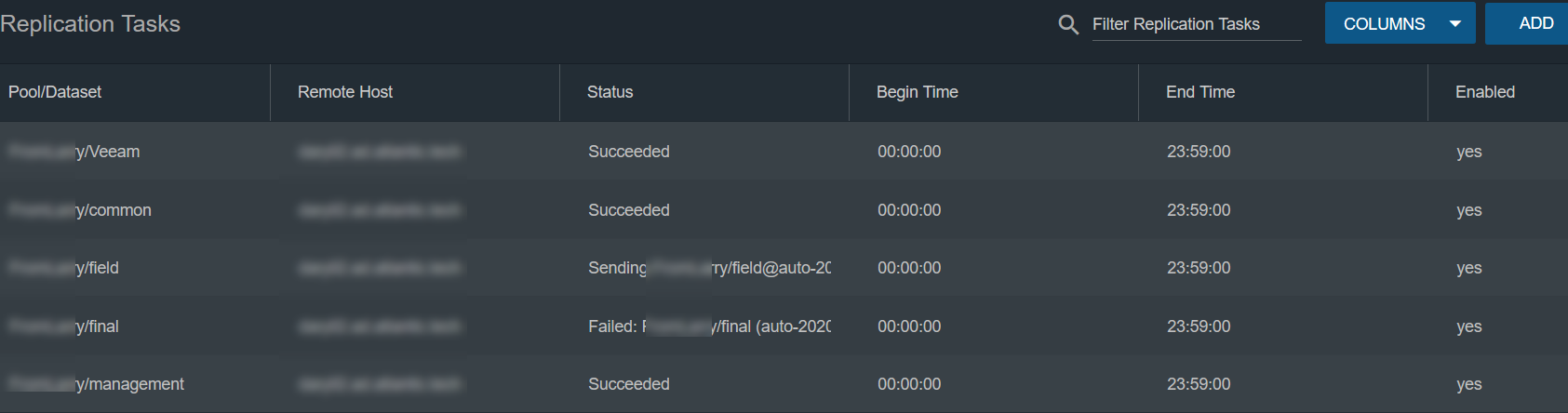

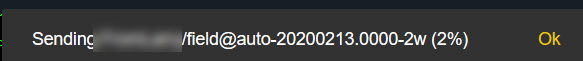

Last week due to....reasons... I changed the retention policy on snapshots on the source from 1 week to 2 weeks. Well everything was just fine until it cycled through the last xxxxxxxxx-1w snapshot and then when it began to replicate the xxxxxxxx-2w snapshot it appears to be attempting to replicate the entirety of each dataset again. This is extremely bad because it is 100+TB going at ~15-20Mbps. We specifically seeded the initial datasets more than a year ago when both NASs were in the same building and could transfer data on our 10G network.

The destination pools appear to contain all of the data still (that is visible).

Is the replication task writing all of the data from each dataset again?

Let me know if you need more data.

Screenshots - I blurred out company specific naming):

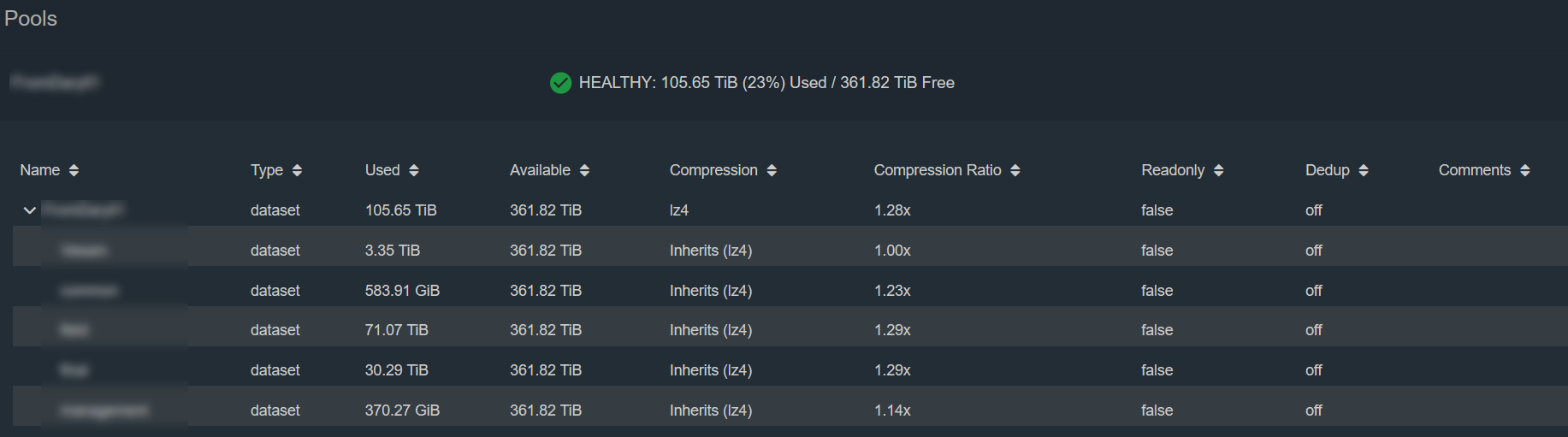

Source

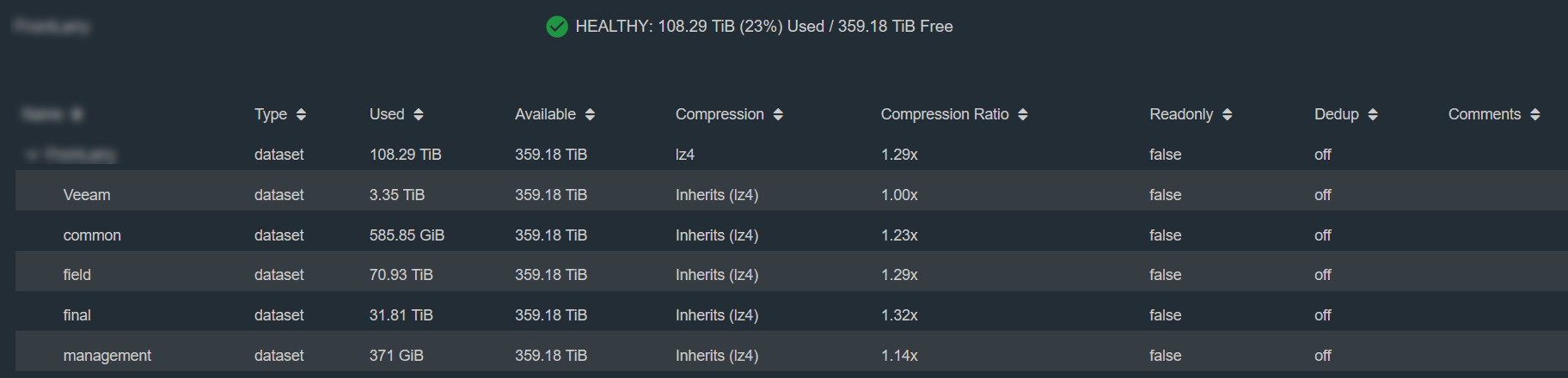

Pools

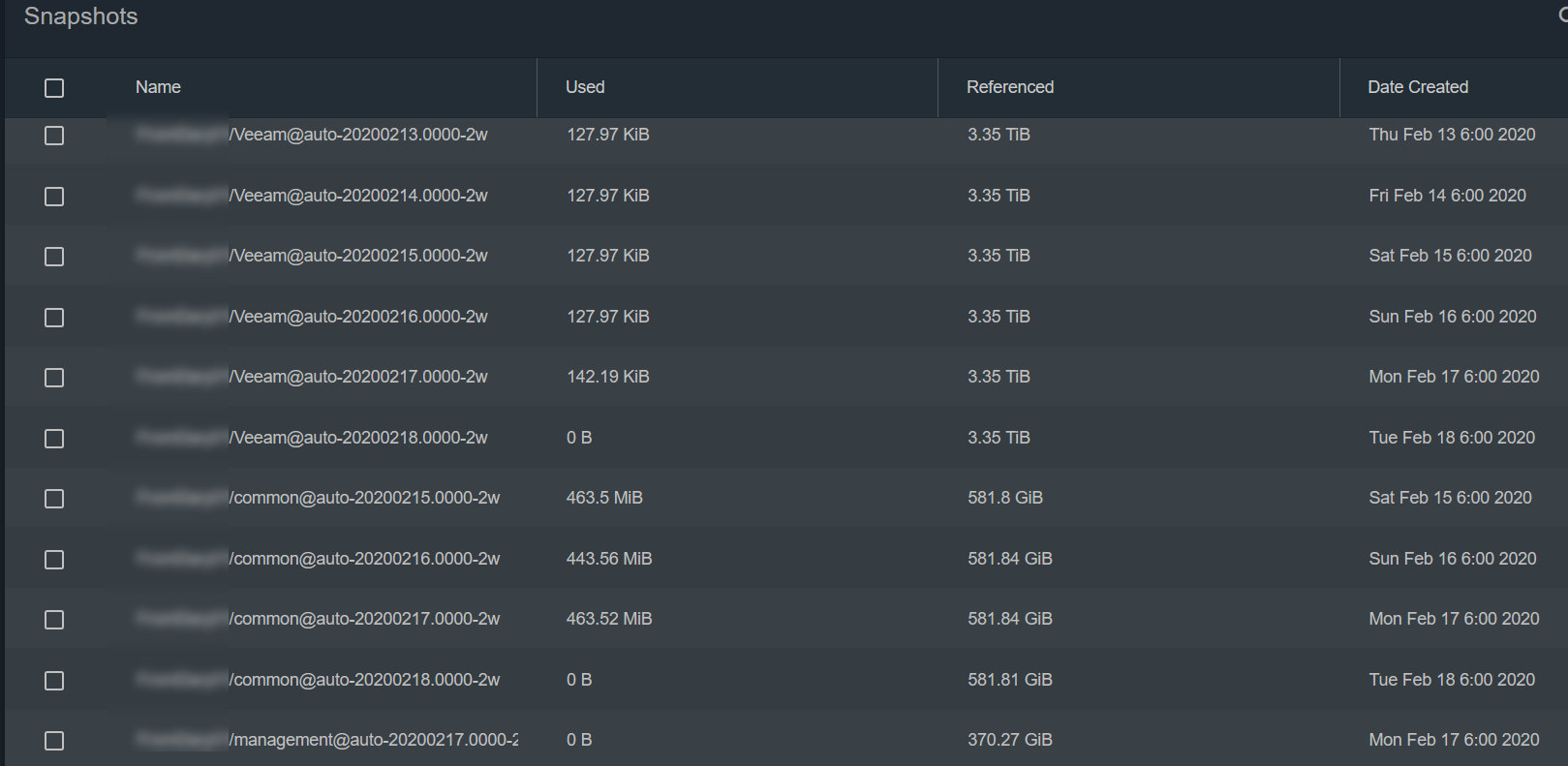

Destination

I searched for a while and couldn't find an exact answer to this question.

Devices:

I have two(2) identical 500TB NASs (built by 45drives). One is on our local network(source) and one is in a remote office in another state that we replicate our snapshot to (destination). (1G/1G on both ends)

Problem:

Last week due to....reasons... I changed the retention policy on snapshots on the source from 1 week to 2 weeks. Well everything was just fine until it cycled through the last xxxxxxxxx-1w snapshot and then when it began to replicate the xxxxxxxx-2w snapshot it appears to be attempting to replicate the entirety of each dataset again. This is extremely bad because it is 100+TB going at ~15-20Mbps. We specifically seeded the initial datasets more than a year ago when both NASs were in the same building and could transfer data on our 10G network.

The destination pools appear to contain all of the data still (that is visible).

Is the replication task writing all of the data from each dataset again?

Let me know if you need more data.

Screenshots - I blurred out company specific naming):

Source

Pools

Destination