archialsta

Dabbler

- Joined

- Oct 31, 2020

- Messages

- 12

Hi!

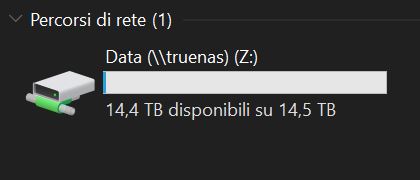

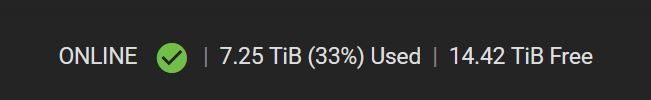

After upgrading from 12.0-U8.1 to 13, on windows explorer doesn't show correctly the used and free space available on truenas. It only shows the 'free' available space as the total capacity of the nas. Even that is not updated real time, because I've tried to copy and delete some large files and the data displayed remains almost the same.

After upgrading from 12.0-U8.1 to 13, on windows explorer doesn't show correctly the used and free space available on truenas. It only shows the 'free' available space as the total capacity of the nas. Even that is not updated real time, because I've tried to copy and delete some large files and the data displayed remains almost the same.