Hi everyone!

I switched from synology to TrueNAS just a few weeks ago, so I am still learning. So far I managed to get everything working (Apps, Ingres, Certificates, ...), but there is one issue I can not find a solution for. I have a Windows 10 Virtual Machine on my TrueNAS scale instalation:

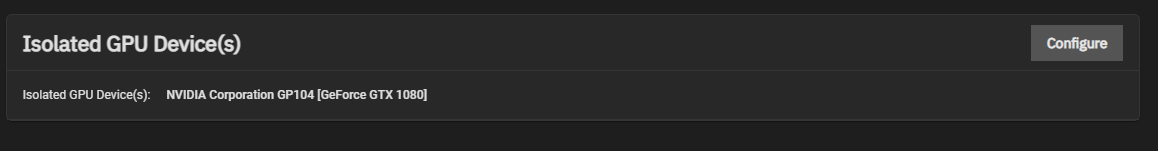

I have a Asrock Z390m-itx/ac with Intel i7-8700K, and 2x 32 GB Crucial memory. The installation itself was not a problem, I have VT-x and VT-d enabled in Bios, PCI passthrough for my Asus ROG GTX 1080 enabled. (Isolated GPU and Set for passthrough)

The problem is that sporadicaly (while gaming over steamlink) the entire PC crashes... The screen simply goes black, and the PC is not available. I cannot connect over RDP, Parsec, TeamViewer, not even ping. The issue is sporadic, sometimes after 2-3 minutes, sometimes not even after 6 hours. I assume that it is caused by the PCI passthrough, because of the following:

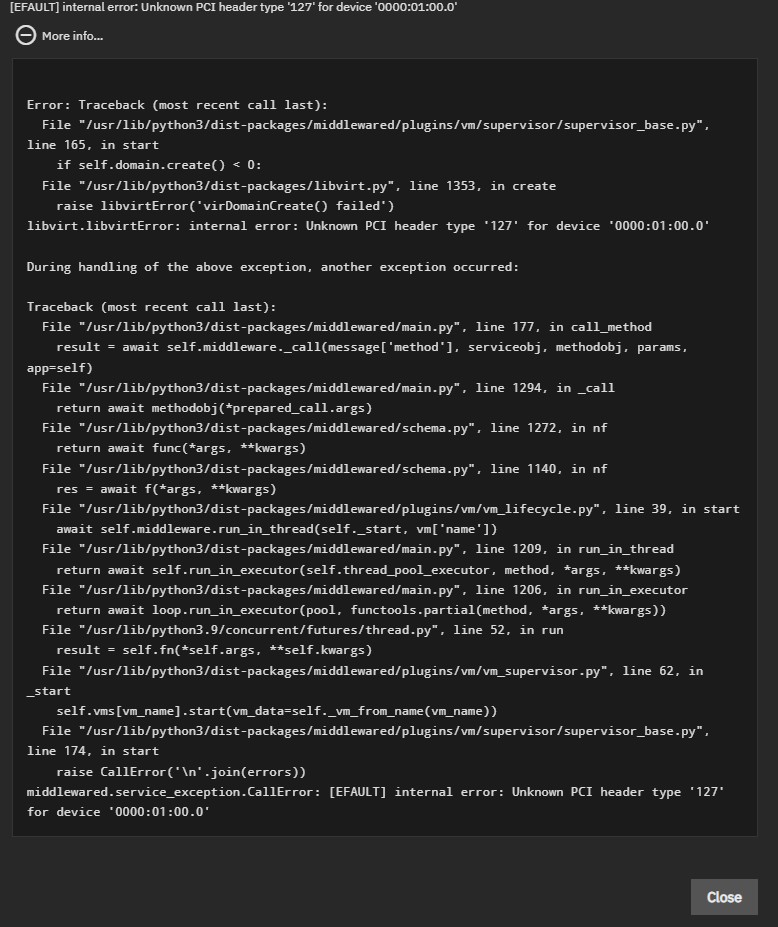

1. - after the crash, I cannot start the VM because I get the following error:

where of course the device 0000:01:00.0 is my Nvidia GPU.

2. - if i restart the entire server after this error, the Nvidia GPU is not recognized ad all, and not available for the GPU isolation in the settings.

3. - the only way I can get it to work again is to shut down the server (not restart) and then turn it on again.

Is there any advice someone can give me? I read about some registration problems with AMD GPU, but this is an NVIDIA GTX. Also my problem does not occut after I restart or shutdown the VM (I can restart, and turn it on/off several times with no problems), my problem occurs while I am using it. Again, completely sporadic.

The only thing in common is the log of the VM :

2022-11-18T16:27:34.525794Z qemu-system-x86_64: vfio: Unable to power on device, stuck in D3

2022-11-18T16:27:34.545705Z qemu-system-x86_64: vfio: Unable to power on device, stuck in D3

2022-11-18T16:27:35.574698Z qemu-system-x86_64: vfio: Unable to power on device, stuck in D3

2022-11-18T16:27:35.575669Z qemu-system-x86_64: vfio: Unable to power on device, stuck in D3

What I tried:

1. setting the CPU from Host Passthrough to Host Model

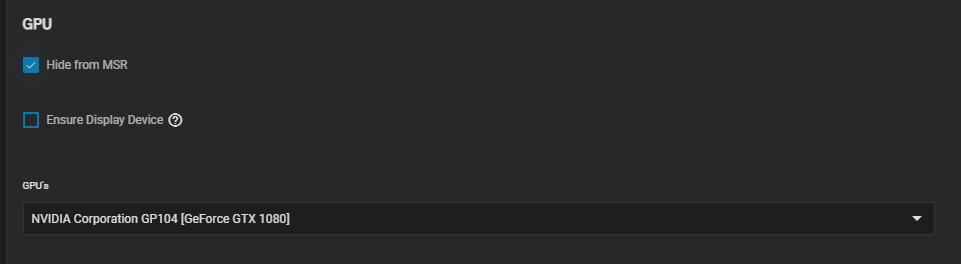

2. setting the Hide from MSR setting for the GPU passthrough (I read that it sets the driver to kvm or something like that)

3. enable Above 4G encoding in BIOS

Can anyone please help? Any ideas or recommendations are very much apreciated. I appologize if this is a known issue, I tried finding it here, but was not succesfull. I read about people solving this issue by uploading the GPU bios, but I have no idea how I can do it in TrueNAS scale. If you need any more information please just ask, (or tell me how I can get it) and I gladly will.

Thanks for any kind of help.

I switched from synology to TrueNAS just a few weeks ago, so I am still learning. So far I managed to get everything working (Apps, Ingres, Certificates, ...), but there is one issue I can not find a solution for. I have a Windows 10 Virtual Machine on my TrueNAS scale instalation:

I have a Asrock Z390m-itx/ac with Intel i7-8700K, and 2x 32 GB Crucial memory. The installation itself was not a problem, I have VT-x and VT-d enabled in Bios, PCI passthrough for my Asus ROG GTX 1080 enabled. (Isolated GPU and Set for passthrough)

The problem is that sporadicaly (while gaming over steamlink) the entire PC crashes... The screen simply goes black, and the PC is not available. I cannot connect over RDP, Parsec, TeamViewer, not even ping. The issue is sporadic, sometimes after 2-3 minutes, sometimes not even after 6 hours. I assume that it is caused by the PCI passthrough, because of the following:

1. - after the crash, I cannot start the VM because I get the following error:

where of course the device 0000:01:00.0 is my Nvidia GPU.

2. - if i restart the entire server after this error, the Nvidia GPU is not recognized ad all, and not available for the GPU isolation in the settings.

3. - the only way I can get it to work again is to shut down the server (not restart) and then turn it on again.

Is there any advice someone can give me? I read about some registration problems with AMD GPU, but this is an NVIDIA GTX. Also my problem does not occut after I restart or shutdown the VM (I can restart, and turn it on/off several times with no problems), my problem occurs while I am using it. Again, completely sporadic.

The only thing in common is the log of the VM :

2022-11-18T16:27:34.525794Z qemu-system-x86_64: vfio: Unable to power on device, stuck in D3

2022-11-18T16:27:34.545705Z qemu-system-x86_64: vfio: Unable to power on device, stuck in D3

2022-11-18T16:27:35.574698Z qemu-system-x86_64: vfio: Unable to power on device, stuck in D3

2022-11-18T16:27:35.575669Z qemu-system-x86_64: vfio: Unable to power on device, stuck in D3

What I tried:

1. setting the CPU from Host Passthrough to Host Model

2. setting the Hide from MSR setting for the GPU passthrough (I read that it sets the driver to kvm or something like that)

3. enable Above 4G encoding in BIOS

Can anyone please help? Any ideas or recommendations are very much apreciated. I appologize if this is a known issue, I tried finding it here, but was not succesfull. I read about people solving this issue by uploading the GPU bios, but I have no idea how I can do it in TrueNAS scale. If you need any more information please just ask, (or tell me how I can get it) and I gladly will.

Thanks for any kind of help.