johndanoob

Cadet

- Joined

- Feb 20, 2023

- Messages

- 4

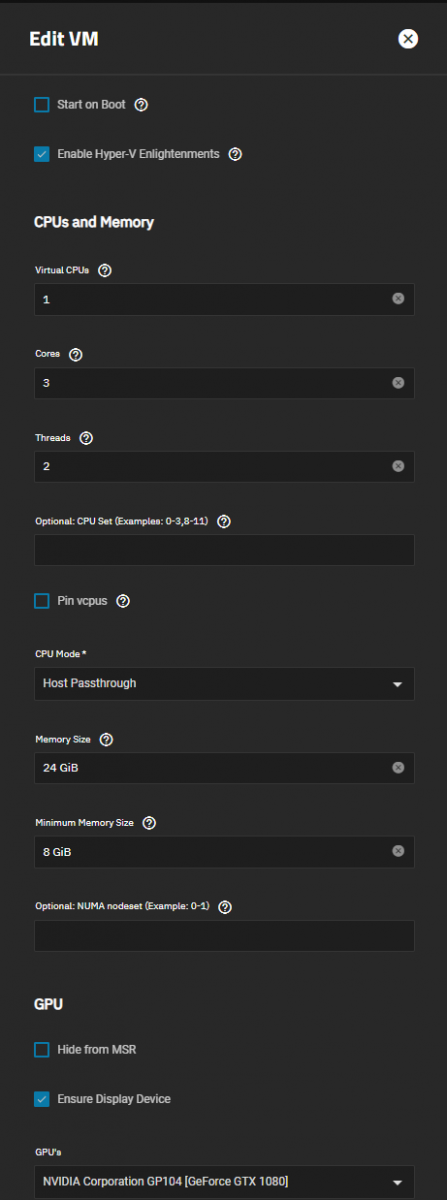

Hello all. I am trying one final time to get TrueNAS working on this box before I throw in the towel and try unRAID. I'm trying to set up a Windows 11 VM for use over Tailscale. VM settings are below, and I believe I have everything right in BIOS with VT-D and such.

Without GPU passthrough the VM functions fine, but when I try to passthrough the dedicated GPU (GTX 1080) it hangs indefinitely at the "please wait" spinning wheel until I refresh the UI page. I have tried isolating the GPU in question, pinning vcpus, enabling/disabling Hyper-V enlightenments, even completely reinstalling TrueNAS SCALE, makes no difference.

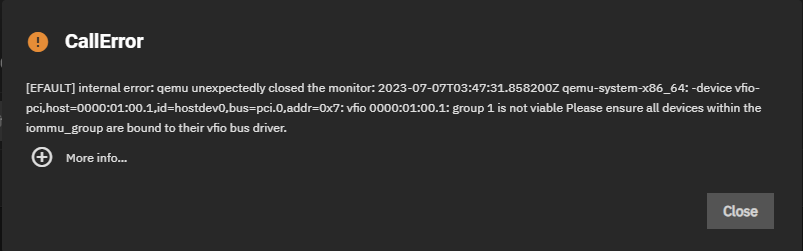

I recently got the below error message after making a new VM and trying to add the GPU before installing the OS, but all other times there's been no error message. Just hangs or crashes. Attached is the log file associated with the VM that got this error.

Sometimes it crashes the whole machine. A picture of the CLI on the box is attached when I tried to start a VM that had previously attempted a GPU, but no longer had a GPU enabled in the settings. I've found only one other thread with a similar issue and it had no responses. Anyhow I have absolutely no idea why this is happening so any help would be greatly appreciated.

Without GPU passthrough the VM functions fine, but when I try to passthrough the dedicated GPU (GTX 1080) it hangs indefinitely at the "please wait" spinning wheel until I refresh the UI page. I have tried isolating the GPU in question, pinning vcpus, enabling/disabling Hyper-V enlightenments, even completely reinstalling TrueNAS SCALE, makes no difference.

I recently got the below error message after making a new VM and trying to add the GPU before installing the OS, but all other times there's been no error message. Just hangs or crashes. Attached is the log file associated with the VM that got this error.

Code:

Error: Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 172, in start

if self.domain.create() < 0:

File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create

raise libvirtError('virDomainCreate() failed')

libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2023-07-07T03:47:31.858200Z qemu-system-x86_64: -device vfio-pci,host=0000:01:00.1,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:01:00.1: group 1 is not viable

Please ensure all devices within the iommu_group are bound to their vfio bus driver.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 204, in call_method

result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self)

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1344, in _call

return await methodobj(*prepared_call.args)

File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1378, in nf

return await func(*args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1246, in nf

res = await f(*args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 46, in start

await self.middleware.run_in_thread(self._start, vm['name'])

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1261, in run_in_thread

return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1258, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 68, in _start

self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name))

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 181, in start

raise CallError('\n'.join(errors))

middlewared.service_exception.CallError: [EFAULT] internal error: qemu unexpectedly closed the monitor: 2023-07-07T03:47:31.858200Z qemu-system-x86_64: -device vfio-pci,host=0000:01:00.1,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:01:00.1: group 1 is not viable

Please ensure all devices within the iommu_group are bound to their vfio bus driver.Sometimes it crashes the whole machine. A picture of the CLI on the box is attached when I tried to start a VM that had previously attempted a GPU, but no longer had a GPU enabled in the settings. I've found only one other thread with a similar issue and it had no responses. Anyhow I have absolutely no idea why this is happening so any help would be greatly appreciated.