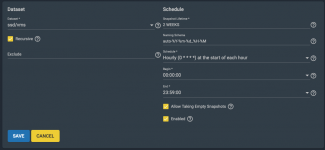

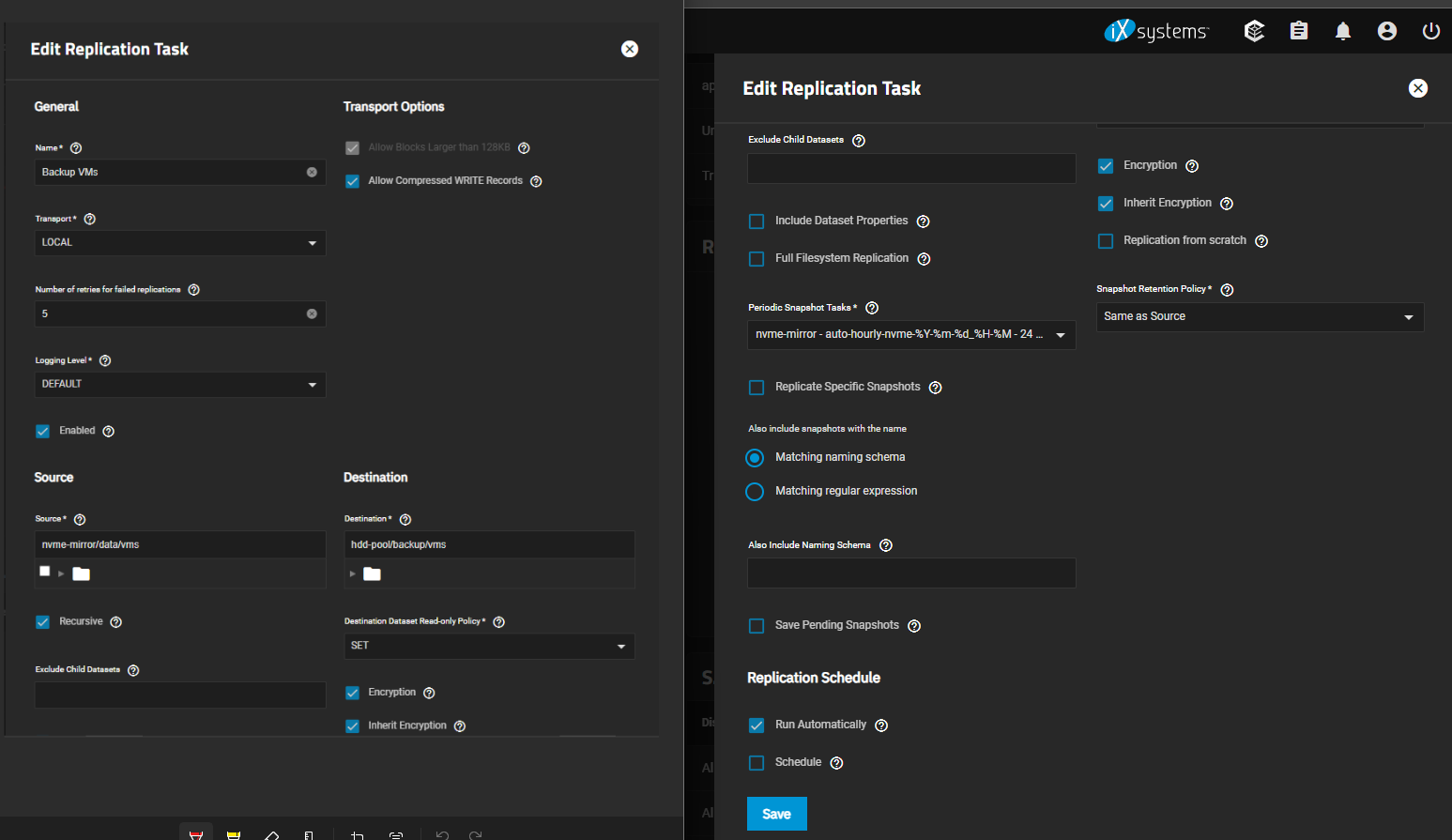

I'm replicating a dataset from [nvme-mirror]-pool (source) to my [hdd-pool]. But in doing so, I'm having a bit of trouble with managing retentions and secondary snapshot-schedules on the target pool. Basically, my recursive snapshot schedules for the target dataset are completely overwritten and/or removed by the replication.

I've experimented will a all of the options for retention policies. And trying to stagger the snapshot schedules.

My goal is to have the replication to send one dataset per schedule, to a target dataset. And then have the snapshot schedules of the target pool take over for retention.

Hoping that you all will enlighten me where I'm getting things confused.

Edit: Better wording, and hopefully a bit more clear on what I mean

I've experimented will a all of the options for retention policies. And trying to stagger the snapshot schedules.

My goal is to have the replication to send one dataset per schedule, to a target dataset. And then have the snapshot schedules of the target pool take over for retention.

Hoping that you all will enlighten me where I'm getting things confused.

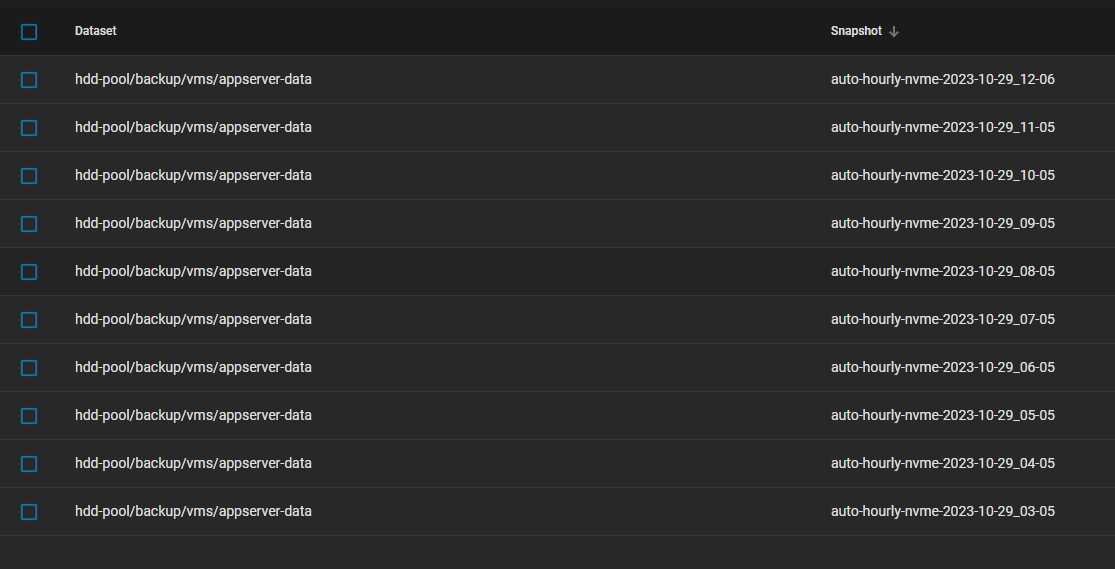

In here would also expect to see snapshots "auto-hourly-hdd-2023-XX-YY" besides the replicated ones

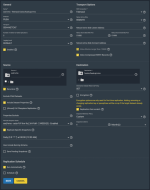

Replication task settings

Edit: Better wording, and hopefully a bit more clear on what I mean

Last edited: