Tino Zidore

Dabbler

- Joined

- Nov 23, 2015

- Messages

- 30

Hi

I have been trying to tweak the Auxiliary parameters and System Tunables to perform best possible on SMB to Mac OS X and Windows 7/10

Please help me with my read speeds what parameter have I tweaked the wrong way?

I use a 10 G setup and I have almost full throughput using iperf.

I have add the no_signing parameter to the local Mac OS X nsmb.conf file and reboot, but no luck with the speeds.

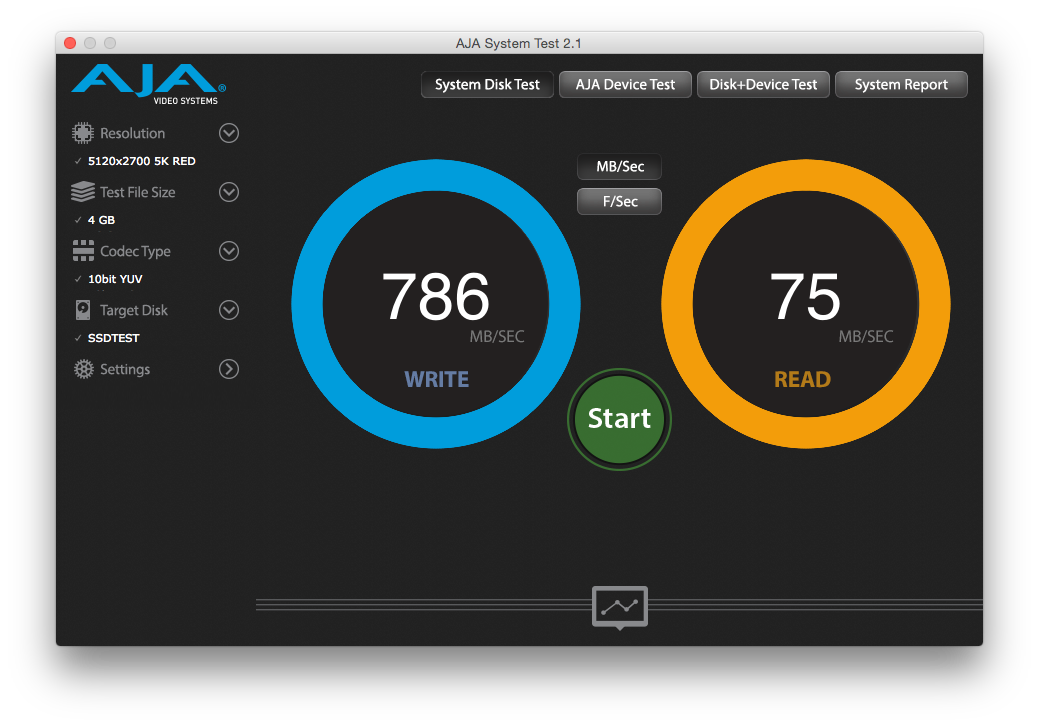

With AJA system test I get a write 786 MB/s, which is okay, but my read is only at a max of 75 MB/s(see attached file).

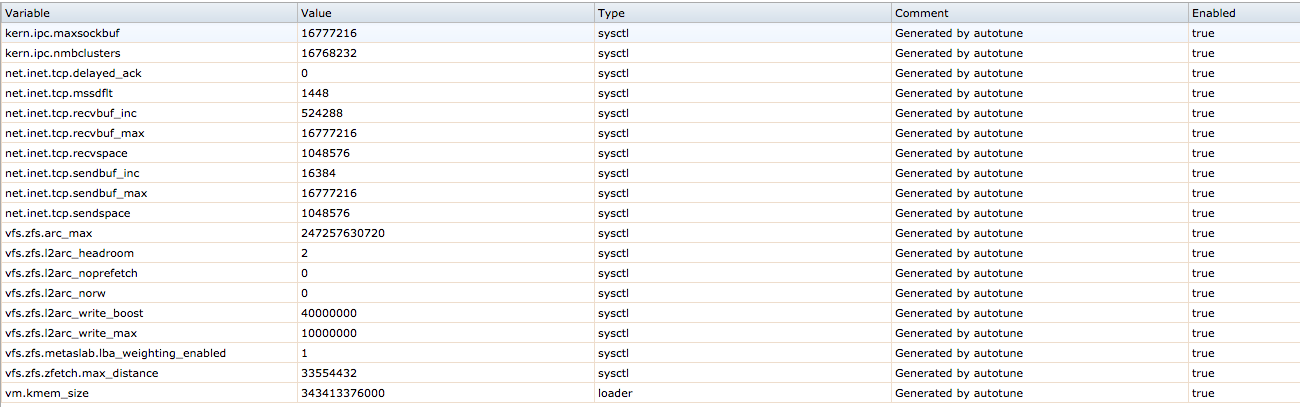

I have also attached a image of my Tunables though the Web GUI.

Here is the setup at the moment.

System/Hardware

Auxiliary parameters

sysctl.conf

smb4.conf

System/Hardware:

Auxiliary parameters:

ZFS Tunables:

sysctl.conf:

smb4.conf:

I have been trying to tweak the Auxiliary parameters and System Tunables to perform best possible on SMB to Mac OS X and Windows 7/10

Please help me with my read speeds what parameter have I tweaked the wrong way?

I use a 10 G setup and I have almost full throughput using iperf.

I have add the no_signing parameter to the local Mac OS X nsmb.conf file and reboot, but no luck with the speeds.

With AJA system test I get a write 786 MB/s, which is okay, but my read is only at a max of 75 MB/s(see attached file).

I have also attached a image of my Tunables though the Web GUI.

Here is the setup at the moment.

System/Hardware

Auxiliary parameters

sysctl.conf

smb4.conf

System/Hardware:

Mobo:

Supermicro X10DRi-T (2 x onboard X540T)

RAM:

256 GB RAM 2133 Mhz

NICs:

1 x Intel XL710-QDA2

2 x Intel X520-SR2

1 x LSI 9207e8 - 69 spinning disks

1 x LSI 9300i8 - 10 SSD disks - Samsung 850 EVO 1 TB

Auxiliary parameters:

mangled names=no

store dos attributes = no

map archive = no

map hidden = no

map readonly = no

map system = no

read raw = yes

write raw = yes

ZFS Tunables:

ZFS Tunable (sysctl):

kern.maxusers 16711

vm.kmem_size 343413379072

vm.kmem_size_scale 1

vm.kmem_size_min 0

vm.kmem_size_max 1319413950874

vfs.zfs.vol.unmap_enabled 1

vfs.zfs.vol.mode 2

vfs.zfs.sync_pass_rewrite 2

vfs.zfs.sync_pass_dont_compress 5

vfs.zfs.sync_pass_deferred_free 2

vfs.zfs.zio.dva_throttle_enabled 1

vfs.zfs.zio.exclude_metadata 0

vfs.zfs.zio.use_uma 1

vfs.zfs.zil_slog_limit 786432

vfs.zfs.cache_flush_disable 0

vfs.zfs.zil_replay_disable 0

vfs.zfs.version.zpl 5

vfs.zfs.version.spa 5000

vfs.zfs.version.acl 1

vfs.zfs.version.ioctl 7

vfs.zfs.debug 0

vfs.zfs.super_owner 0

vfs.zfs.min_auto_ashift 12

vfs.zfs.max_auto_ashift 13

vfs.zfs.vdev.queue_depth_pct 1000

vfs.zfs.vdev.write_gap_limit 4096

vfs.zfs.vdev.read_gap_limit 32768

vfs.zfs.vdev.aggregation_limit 131072

vfs.zfs.vdev.trim_max_active 64

vfs.zfs.vdev.trim_min_active 1

vfs.zfs.vdev.scrub_max_active 2

vfs.zfs.vdev.scrub_min_active 1

vfs.zfs.vdev.async_write_max_active 10

vfs.zfs.vdev.async_write_min_active 1

vfs.zfs.vdev.async_read_max_active 3

vfs.zfs.vdev.async_read_min_active 1

vfs.zfs.vdev.sync_write_max_active 10

vfs.zfs.vdev.sync_write_min_active 10

vfs.zfs.vdev.sync_read_max_active 10

vfs.zfs.vdev.sync_read_min_active 10

vfs.zfs.vdev.max_active 1000

vfs.zfs.vdev.async_write_active_max_dirty_percent60

vfs.zfs.vdev.async_write_active_min_dirty_percent30

vfs.zfs.vdev.mirror.non_rotating_seek_inc1

vfs.zfs.vdev.mirror.non_rotating_inc 0

vfs.zfs.vdev.mirror.rotating_seek_offset1048576

vfs.zfs.vdev.mirror.rotating_seek_inc 5

vfs.zfs.vdev.mirror.rotating_inc 0

vfs.zfs.vdev.trim_on_init 1

vfs.zfs.vdev.larger_ashift_minimal 0

vfs.zfs.vdev.bio_delete_disable 0

vfs.zfs.vdev.bio_flush_disable 0

vfs.zfs.vdev.cache.bshift 16

vfs.zfs.vdev.cache.size 0

vfs.zfs.vdev.cache.max 16384

vfs.zfs.vdev.metaslabs_per_vdev 200

vfs.zfs.vdev.trim_max_pending 10000

vfs.zfs.txg.timeout 5

vfs.zfs.trim.enabled 1

vfs.zfs.trim.max_interval 1

vfs.zfs.trim.timeout 30

vfs.zfs.trim.txg_delay 32

vfs.zfs.space_map_blksz 4096

vfs.zfs.spa_min_slop 134217728

vfs.zfs.spa_slop_shift 5

vfs.zfs.spa_asize_inflation 24

vfs.zfs.deadman_enabled 1

vfs.zfs.deadman_checktime_ms 5000

vfs.zfs.deadman_synctime_ms 1000000

vfs.zfs.debug_flags 0

vfs.zfs.recover 0

vfs.zfs.spa_load_verify_data 1

vfs.zfs.spa_load_verify_metadata 1

vfs.zfs.spa_load_verify_maxinflight 10000

vfs.zfs.ccw_retry_interval 300

vfs.zfs.check_hostid 1

vfs.zfs.mg_fragmentation_threshold 85

vfs.zfs.mg_noalloc_threshold 0

vfs.zfs.condense_pct 200

vfs.zfs.metaslab.bias_enabled 1

vfs.zfs.metaslab.lba_weighting_enabled 1

vfs.zfs.metaslab.fragmentation_factor_enabled1

vfs.zfs.metaslab.preload_enabled 1

vfs.zfs.metaslab.preload_limit 3

vfs.zfs.metaslab.unload_delay 8

vfs.zfs.metaslab.load_pct 50

vfs.zfs.metaslab.min_alloc_size 33554432

vfs.zfs.metaslab.df_free_pct 4

vfs.zfs.metaslab.df_alloc_threshold 131072

vfs.zfs.metaslab.debug_unload 0

vfs.zfs.metaslab.debug_load 0

vfs.zfs.metaslab.fragmentation_threshold70

vfs.zfs.metaslab.gang_bang 16777217

vfs.zfs.free_bpobj_enabled 1

vfs.zfs.free_max_blocks 18446744073709551615

vfs.zfs.no_scrub_prefetch 0

vfs.zfs.no_scrub_io 0

vfs.zfs.resilver_min_time_ms 3000

vfs.zfs.free_min_time_ms 1000

vfs.zfs.scan_min_time_ms 1000

vfs.zfs.scan_idle 50

vfs.zfs.scrub_delay 4

vfs.zfs.resilver_delay 2

vfs.zfs.top_maxinflight 32

vfs.zfs.delay_scale 500000

vfs.zfs.delay_min_dirty_percent 60

vfs.zfs.dirty_data_sync 67108864

vfs.zfs.dirty_data_max_percent 10

vfs.zfs.dirty_data_max_max 4294967296

vfs.zfs.dirty_data_max 4294967296

vfs.zfs.max_recordsize 1048576

vfs.zfs.zfetch.array_rd_sz 1048576

vfs.zfs.zfetch.max_distance 33554432

vfs.zfs.zfetch.min_sec_reap 2

vfs.zfs.zfetch.max_streams 8

vfs.zfs.prefetch_disable 0

vfs.zfs.send_holes_without_birth_time 1

vfs.zfs.mdcomp_disable 0

vfs.zfs.nopwrite_enabled 1

vfs.zfs.dedup.prefetch 1

vfs.zfs.l2c_only_size 0

vfs.zfs.mfu_ghost_data_esize 215978792448

vfs.zfs.mfu_ghost_metadata_esize 0

vfs.zfs.mfu_ghost_size 215978792448

vfs.zfs.mfu_data_esize 20093289472

vfs.zfs.mfu_metadata_esize 1356146176

vfs.zfs.mfu_size 21672891904

vfs.zfs.mru_ghost_data_esize 25354312192

vfs.zfs.mru_ghost_metadata_esize 0

vfs.zfs.mru_ghost_size 25354312192

vfs.zfs.mru_data_esize 212660954112

vfs.zfs.mru_metadata_esize 844736512

vfs.zfs.mru_size 215989390336

vfs.zfs.anon_data_esize 0

vfs.zfs.anon_metadata_esize 0

vfs.zfs.anon_size 9412608

vfs.zfs.l2arc_norw 0

vfs.zfs.l2arc_feed_again 1

vfs.zfs.l2arc_noprefetch 0

vfs.zfs.l2arc_feed_min_ms 200

vfs.zfs.l2arc_feed_secs 1

vfs.zfs.l2arc_headroom 2

vfs.zfs.l2arc_write_boost 40000000

vfs.zfs.l2arc_write_max 10000000

vfs.zfs.arc_meta_limit 61814407680

vfs.zfs.arc_free_target 453079

vfs.zfs.compressed_arc_enabled 1

vfs.zfs.arc_shrink_shift 7

vfs.zfs.arc_average_blocksize 8192

vfs.zfs.arc_min 33325678592

vfs.zfs.arc_max 247257630720

sysctl.conf:

kern.metadelay=3

kern.dirdelay=4

kern.filedelay=5

kern.coredump=1

kern.sugid_coredump=1

net.inet.tcp.delayed_ack=0

vfs.timestamp_precision=3

net.link.lagg.lacp.default_strict_mode=0

# Force minimal 4KB ashift for new top level ZFS VDEVs.

vfs.zfs.min_auto_ashift=12

smb4.conf:

[global]

server max protocol = SMB3_11

interfaces = 127.0.0.1 xx.xx.xx.xx

bind interfaces only = yes

encrypt passwords = yes

dns proxy = no

strict locking = no

oplocks = yes

deadtime = 15

max log size = 51200

max open files = 7545674

logging = file

load printers = no

printing = bsd

printcap name = /dev/null

disable spoolss = yes

getwd cache = yes

guest account = nobody

map to guest = Bad User

obey pam restrictions = yes

directory name cache size = 0

kernel change notify = no

panic action = /usr/local/libexec/samba/samba-backtrace

nsupdate command = /usr/local/bin/samba-nsupdate -g

server string = RAW Server

ea support = yes

store dos attributes = yes

lm announce = yes

acl allow execute always = true

dos filemode = yes

multicast dns register = yes

domain logons = no

idmap config *: backend = tdb

idmap config *: range = 90000001-100000000

server role = member server

workgroup = WORKGROUP

realm = MY Domain.com

security = ADS

client use spnego = yes

cache directory = /var/tmp/.cache/.samba

local master = no

domain master = no

preferred master = no

ads dns update = yes

winbind cache time = 7200

winbind offline logon = yes

winbind enum users = yes

winbind enum groups = yes

winbind nested groups = yes

winbind use default domain = no

winbind refresh tickets = yes

idmap config WORKGROUP: backend = rid

idmap config WORKGROUP: range = 20000-90000000

allow trusted domains = no

client ldap sasl wrapping = sign

template shell = /bin/sh

template homedir = /home/%D/%U

netbios name = RAW

pid directory = /var/run/samba

create mask = 0666

directory mask = 0777

client ntlmv2 auth = yes

dos charset = CP850

unix charset = UTF-8

log level = 2

mangled names=no

store dos attributes = no

map archive = no

map hidden = no

map readonly = no

map system = no

read raw = yes

write raw = yes

[SSDTEST]

path = /mnt/SSDTANK/SSDTEST

printable = no

veto files = /.snapshot/.windows/.mac/.zfs/

writeable = yes

browseable = yes

vfs objects = zfs_space zfsacl catia fruit streams_xattr aio_pthread

hide dot files = yes

guest ok = no

nfs4:mode = special

nfs4:acedup = merge

nfs4:chown = true

zfsacl:acesort = dontcare