I'm aware this subject gets pretty much done to death but unfortunately I've been searching and searching every corner of the internet for answers to no avail.

My issue is that NFS is incredibly slow. I've got one dataset being used by Plex and NextCloud mounted directly as jails, and local access is as expected for SATA. I've done an iozone and I'm happy with those results. A 32GB test file done locally gives read and writes consistent with local SATA.

Iperf3 tests to TrueNAS over gigabit Ethernet are full-speed, 900+Mb/s both ways. So no networking issues.

I've set up an SMB dataset outside that one just for testing and a 9GB test file copied to and from the NAS is full-speed on a windows device connected via gigabit ethernet. Roughly 90MB/s so around 720Mb/s which seems fine for SMB. I'm happy with that.

An NFS test through from both a raspberry pi 4 (low power device) and my surface pro 7 in windows subsystem for Linux (not low power) only gets about3-4MB/s 29-34MB/s on second check. Absolutely abysmally slow and I have no idea why.

I set up another dataset outside this one to test NFS again and I see no change so it doesn't seem to be specific to the dataset and is something to do with NFS.

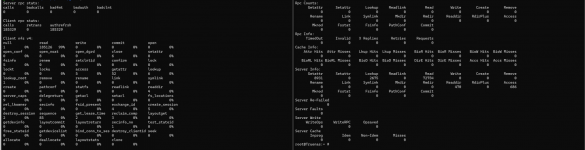

Now, specs:

I feel like a SLOG isn't the answer here. I should be seeing roughly the same speeds as SMB, right? Before working on adding things like SLOG I want to figure out what's slowing it down at the basic level.

So what can I try next?

My issue is that NFS is incredibly slow. I've got one dataset being used by Plex and NextCloud mounted directly as jails, and local access is as expected for SATA. I've done an iozone and I'm happy with those results. A 32GB test file done locally gives read and writes consistent with local SATA.

Iperf3 tests to TrueNAS over gigabit Ethernet are full-speed, 900+Mb/s both ways. So no networking issues.

I've set up an SMB dataset outside that one just for testing and a 9GB test file copied to and from the NAS is full-speed on a windows device connected via gigabit ethernet. Roughly 90MB/s so around 720Mb/s which seems fine for SMB. I'm happy with that.

An NFS test through from both a raspberry pi 4 (low power device) and my surface pro 7 in windows subsystem for Linux (not low power) only gets about

I set up another dataset outside this one to test NFS again and I see no change so it doesn't seem to be specific to the dataset and is something to do with NFS.

Now, specs:

- Motherboard: ASRock rack E3C224D2I

- CPU: Intel Core i3-4170 CPU @ 3.70GHz

- RAM: 16GB ECC DDR3 (max the board supports)

- Hard drives: 6 X WD Red 3TB WD30EFRX-68EUZN0 in RaidZ2

- Hard disk controllers: onboard SATA

- Network cards: onboard Intel gigabit, just one port no teaming

I feel like a SLOG isn't the answer here. I should be seeing roughly the same speeds as SMB, right? Before working on adding things like SLOG I want to figure out what's slowing it down at the basic level.

So what can I try next?

Last edited: