Hi,

i am very new to TrueNAS and ZFS, so please excuse my probably kind of stupid question:

i have a ZFS Pool here, wich i want to backup using snapshots to another pool. My goal is to keep lets say two weeks of daily snapshots, and 3 years of monthly snapshots of this pool. this must be possible (?), but i am just not sure how to do that.

do i need two different replication tasks? or do i need one replication task with two selected (and different) periodic snapshot tasks?

Right now it looks like this: one Replication Task with two different periodic Snapshot tasks. But: What do i do with the naming? Should montly and daily have different names, or are they all the same but have different lifetimes?

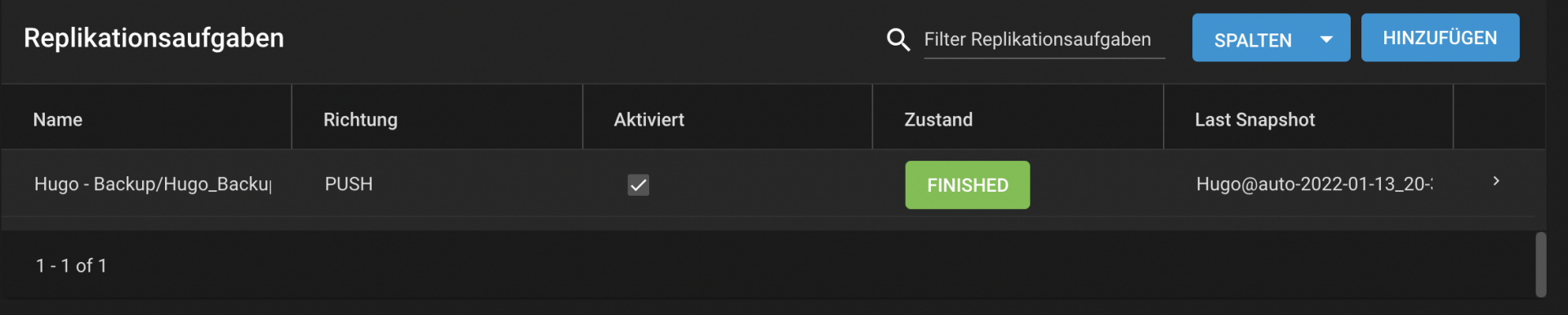

The Replication Task:

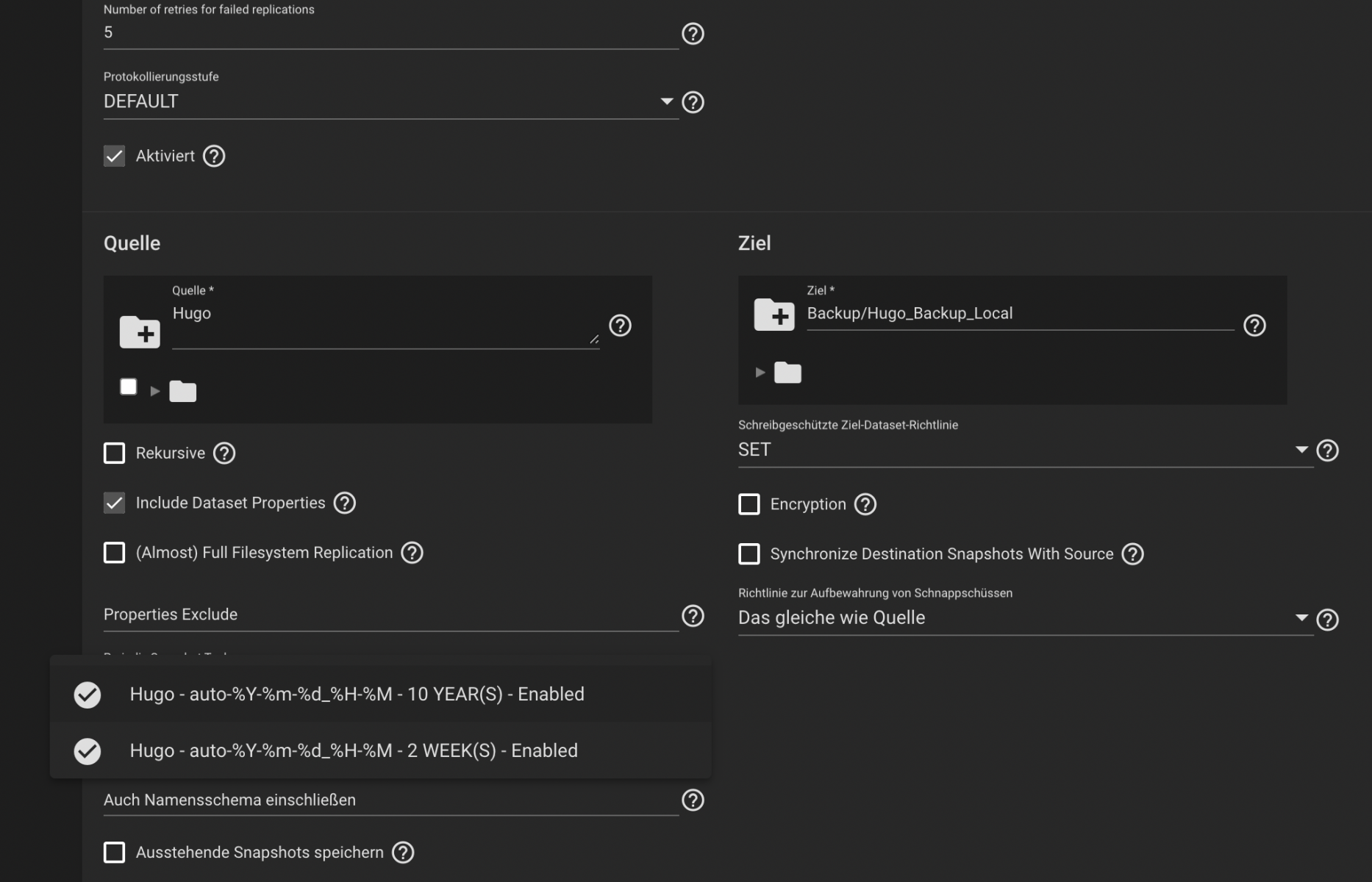

And its settings, with both selected snapshot tasks:

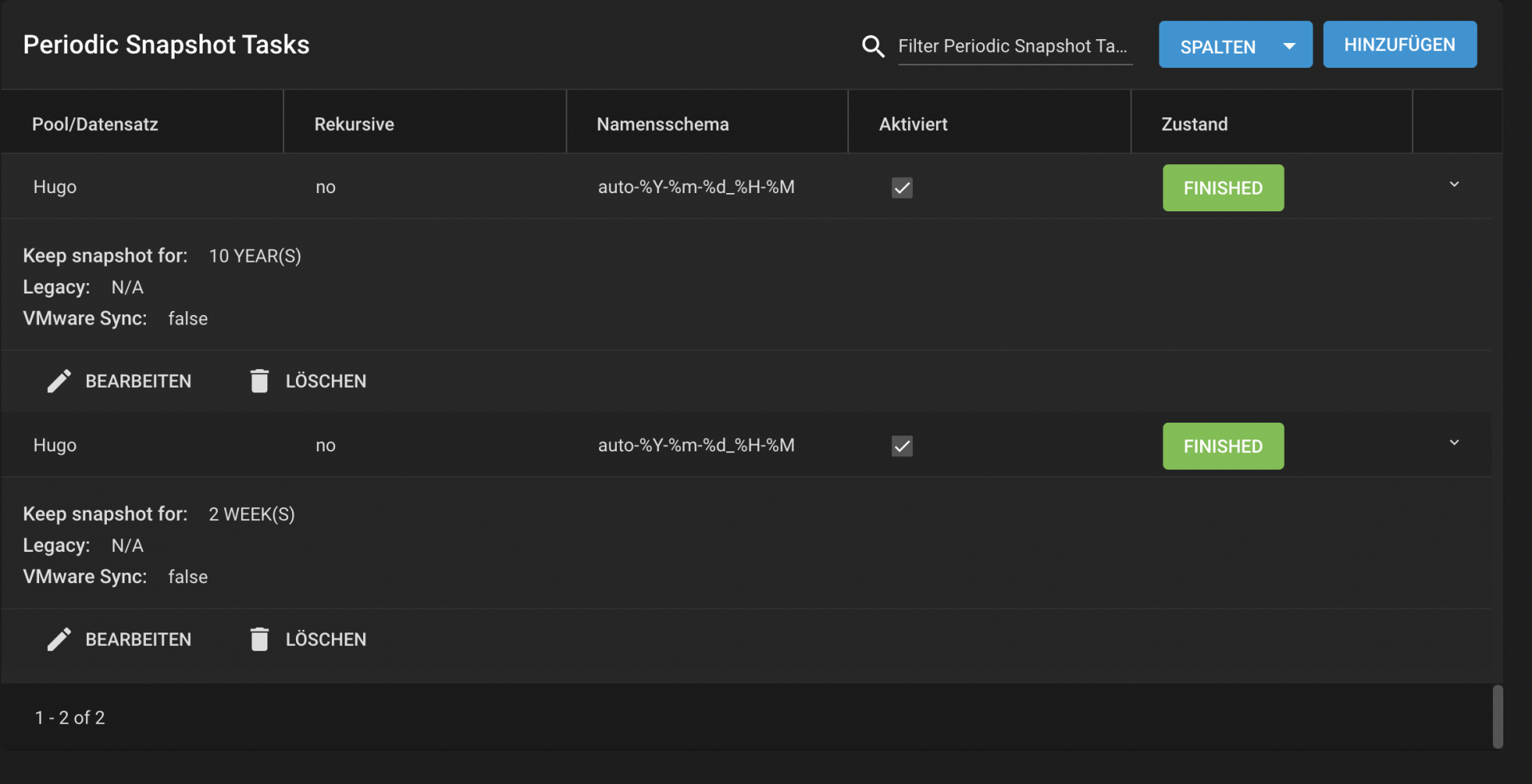

And here are the snapshot tasks:

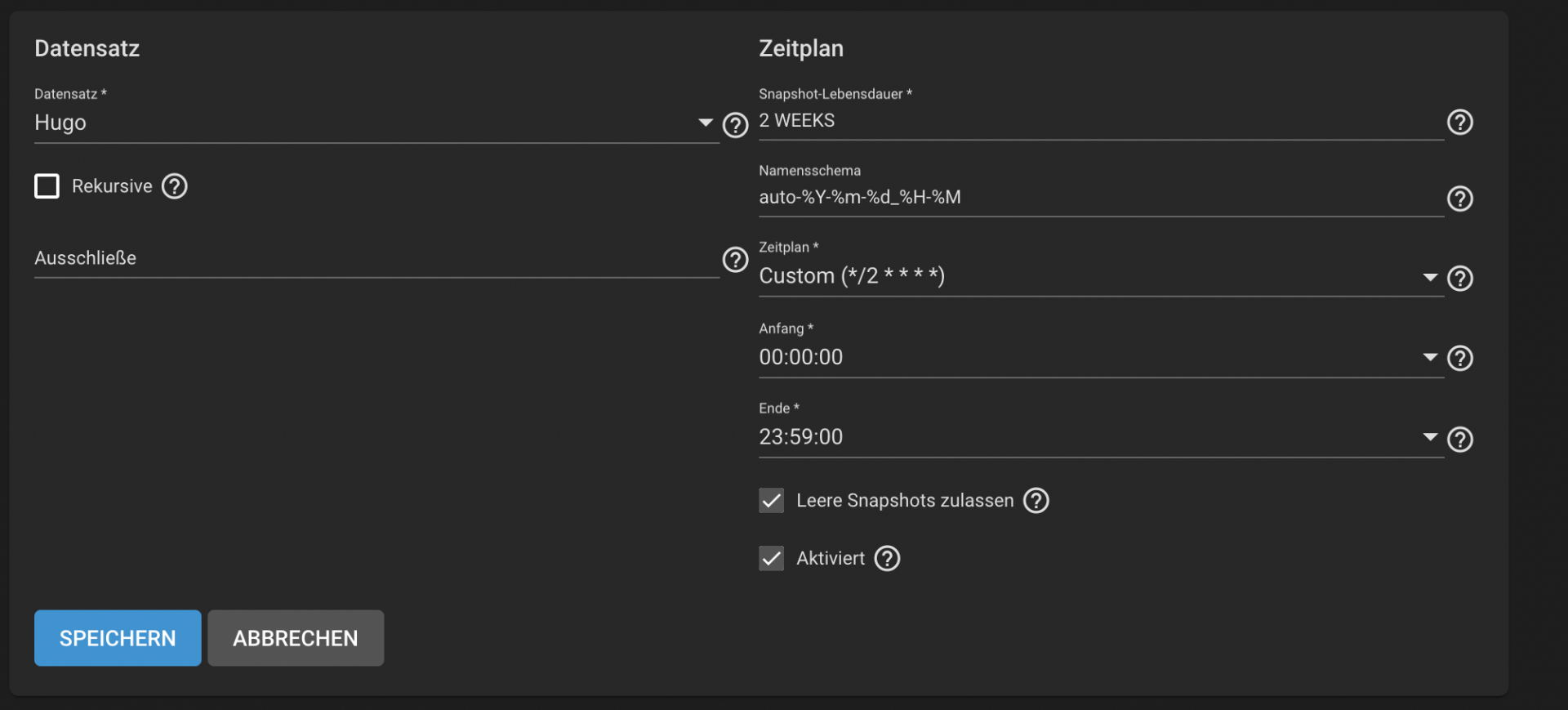

Both look like this:

(yes the times are now set that close to test the behaviour)

Is this how i would set this up? How about naming of the snapshots. Right now both snapshot tasks produce the same names. Is that a problem?

I am not quite confident that this is what i want to achieve ;)

Any suggestions?

THank you so much!!

i am very new to TrueNAS and ZFS, so please excuse my probably kind of stupid question:

i have a ZFS Pool here, wich i want to backup using snapshots to another pool. My goal is to keep lets say two weeks of daily snapshots, and 3 years of monthly snapshots of this pool. this must be possible (?), but i am just not sure how to do that.

do i need two different replication tasks? or do i need one replication task with two selected (and different) periodic snapshot tasks?

Right now it looks like this: one Replication Task with two different periodic Snapshot tasks. But: What do i do with the naming? Should montly and daily have different names, or are they all the same but have different lifetimes?

The Replication Task:

And its settings, with both selected snapshot tasks:

And here are the snapshot tasks:

Both look like this:

(yes the times are now set that close to test the behaviour)

Is this how i would set this up? How about naming of the snapshots. Right now both snapshot tasks produce the same names. Is that a problem?

I am not quite confident that this is what i want to achieve ;)

Any suggestions?

THank you so much!!