ColeTrain

Dabbler

- Joined

- Jan 30, 2022

- Messages

- 10

Scale. OS Version:TrueNAS-SCALE-23.10.1.1

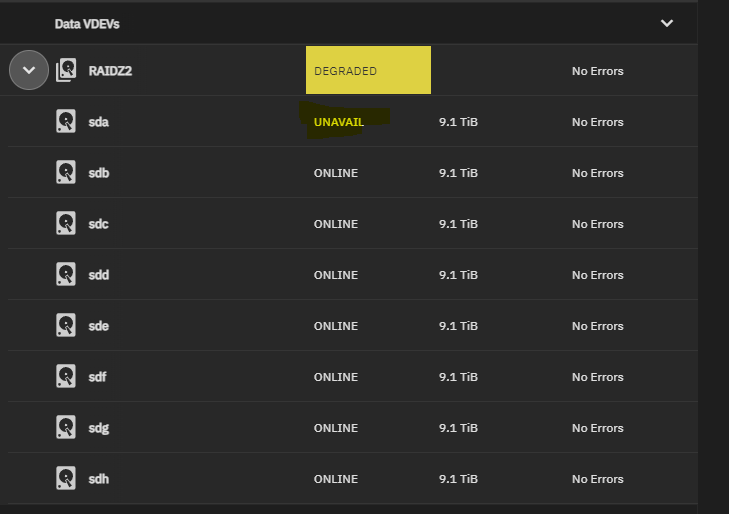

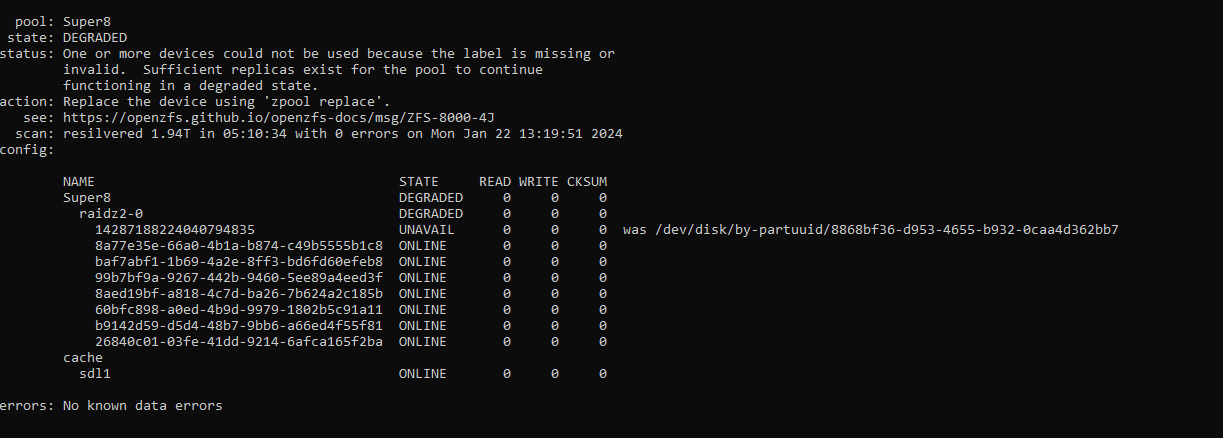

So, I replaced all the disks in a RaidZ2 8 Disk pool. 3 TB to 10 TB.

Autoexpand is ON.

Nothing happens.

I press "expand" in the GUI. I get this error.

I reboot. I get this error. (SDB has moved to SDA, by the way, when verifying serial number. Its the same disk in a different spot.)

And it looks like this here. (below)

And they were all fine before the button pressing of "expand" and then the reboot. I rebooted a couple times before this. It was the act of pressing "expand" and then reboot causes this. Not the act of rebooting.

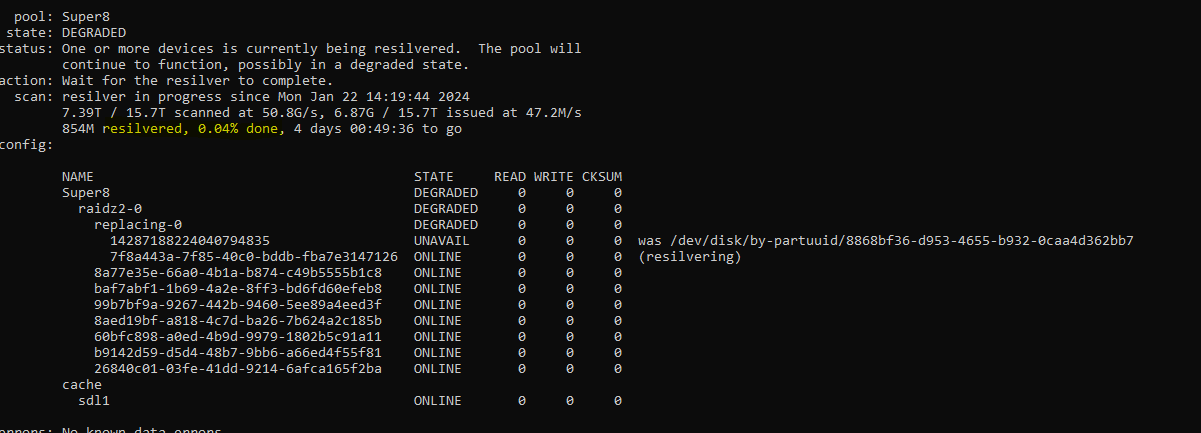

I'm trying to keep this concise, but I have chased my tail on this a couple times. and I am back here again. The long version is, I did the above and then resilver the disk. It said to Force, and then that caused different issues. I replaced that disk with another, only to resilver it again, to get back here, to get in the loop again. I just wiped that Disk. Serial ends in 69Z as it said it had partitions. Now I am replacing that disk "142...835" with the disk again.

What I did not keep track of, is when I did this before, was it the same disk or a different disk? Unsure.

When this gets done. (It will be about 4 hours, not the 4 days it says now) Can anyone advise best steps? Command line or GUI is fine with me. But if you do command line, be specific. And no, I have not used command line here other than viewing status. (Just saying, I didn't mistype anything or mess it up that way.)

EDIT - My Solution in Last post. POST 17

So, I replaced all the disks in a RaidZ2 8 Disk pool. 3 TB to 10 TB.

Autoexpand is ON.

Nothing happens.

I press "expand" in the GUI. I get this error.

[EFAULT] Command partprobe /dev/sdb failed (code 1): Error: Partition(s) 1 on /dev/sdb have been written, but we have been unable to inform the kernel of the change, probably because it/they are in use. As a result, the old partition(s) will remain in use. You should reboot now before making further changes.

I reboot. I get this error. (SDB has moved to SDA, by the way, when verifying serial number. Its the same disk in a different spot.)

And it looks like this here. (below)

And they were all fine before the button pressing of "expand" and then the reboot. I rebooted a couple times before this. It was the act of pressing "expand" and then reboot causes this. Not the act of rebooting.

I'm trying to keep this concise, but I have chased my tail on this a couple times. and I am back here again. The long version is, I did the above and then resilver the disk. It said to Force, and then that caused different issues. I replaced that disk with another, only to resilver it again, to get back here, to get in the loop again. I just wiped that Disk. Serial ends in 69Z as it said it had partitions. Now I am replacing that disk "142...835" with the disk again.

What I did not keep track of, is when I did this before, was it the same disk or a different disk? Unsure.

When this gets done. (It will be about 4 hours, not the 4 days it says now) Can anyone advise best steps? Command line or GUI is fine with me. But if you do command line, be specific. And no, I have not used command line here other than viewing status. (Just saying, I didn't mistype anything or mess it up that way.)

EDIT - My Solution in Last post. POST 17

Last edited: