Hello,

I’m very new to this, so I apologize in advance if I’m not using the right terminologies or if it has been answered.

I’m trying to see if there’s a way to replicate VOLUME or iSCSI Block Shares so that all the VOLUMEs or iSCSI Block Shares have the same exact content.

Here’s my setup and goal:

I have tens to hundreds of Windows PC, and each PC has a D:\ drive that’s sourced from the NAS. The content of this drive is exactly the same across all PCs, and the connection is iSCSI as that allows the network drive to be seen by the OS as a lettered drive rather than an IP address (i.e. lettered drive path is needed by the applications that call the files in the storage).

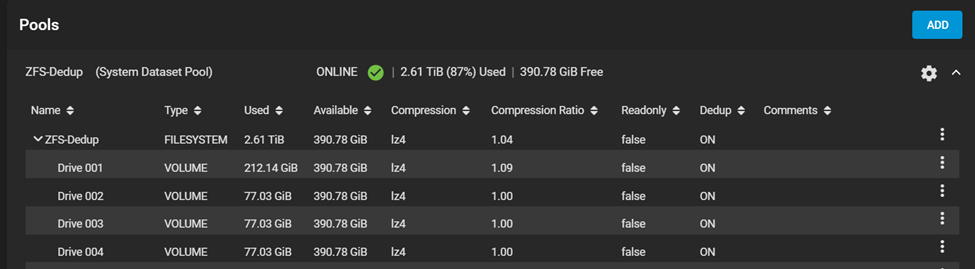

Currently to set the iSCSI drive, I created a pool with multiple volumes. Since the content is identical, deduplication is used to save physical storage space. Once the volumes are created, I set the volumes as Block Shares (iSCSI). Once this is done, I connect a Windows PC to the first volume and copy the desired content into the first volume. I then disconnect first volume, connect the second volume, and copy the same desired content into the second volume. This process is repeated until all the volumes have the identical content.

This is highly inefficient method, so I’m looking as to whether there’s a way in FreeNAS to replicate the volume via command line or web interface. For example, in the image below, you can see Drive 002 to 004 have the same content. I’d like to create Drive 005 to have the same content as Drive 004 without doing what I described above.

Thank you

I’m very new to this, so I apologize in advance if I’m not using the right terminologies or if it has been answered.

I’m trying to see if there’s a way to replicate VOLUME or iSCSI Block Shares so that all the VOLUMEs or iSCSI Block Shares have the same exact content.

Here’s my setup and goal:

I have tens to hundreds of Windows PC, and each PC has a D:\ drive that’s sourced from the NAS. The content of this drive is exactly the same across all PCs, and the connection is iSCSI as that allows the network drive to be seen by the OS as a lettered drive rather than an IP address (i.e. lettered drive path is needed by the applications that call the files in the storage).

Currently to set the iSCSI drive, I created a pool with multiple volumes. Since the content is identical, deduplication is used to save physical storage space. Once the volumes are created, I set the volumes as Block Shares (iSCSI). Once this is done, I connect a Windows PC to the first volume and copy the desired content into the first volume. I then disconnect first volume, connect the second volume, and copy the same desired content into the second volume. This process is repeated until all the volumes have the identical content.

This is highly inefficient method, so I’m looking as to whether there’s a way in FreeNAS to replicate the volume via command line or web interface. For example, in the image below, you can see Drive 002 to 004 have the same content. I’d like to create Drive 005 to have the same content as Drive 004 without doing what I described above.

Thank you