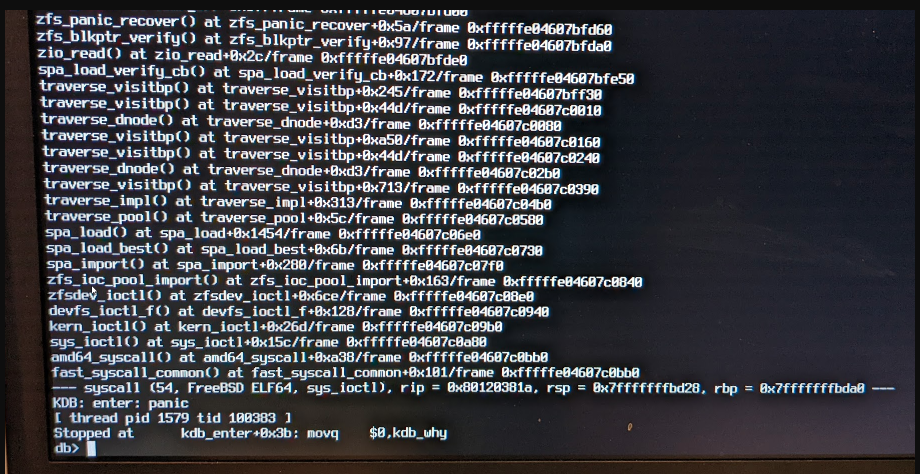

Earlier this week I noticed that my server was not showing up online. I plugged a monitor into the system, rebooted, and got this message:

After some digging, I came across this post: https://www.truenas.com/community/threads/freenas-wont-boot.19476/ and decided the best course of action was to re-install TrueNAS and then reimport my data pool.

After a fresh installation on a USB device, I got the server up and running and went to import my pool again. I could see in the Storage/Pool section that my original pool "bigdata" was offline. Following the install/upgrade instructions, I exported the pool, keeping data and share configurations in tact, confirmed, and exported.

I then went to add and import an existing pool, and I get the following error when I attempt to import it:

I did find this thread that suggested to check the "zpool import", but I honestly can't figure out where that is.

After some digging, I came across this post: https://www.truenas.com/community/threads/freenas-wont-boot.19476/ and decided the best course of action was to re-install TrueNAS and then reimport my data pool.

After a fresh installation on a USB device, I got the server up and running and went to import my pool again. I could see in the Storage/Pool section that my original pool "bigdata" was offline. Following the install/upgrade instructions, I exported the pool, keeping data and share configurations in tact, confirmed, and exported.

I then went to add and import an existing pool, and I get the following error when I attempt to import it:

Code:

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 243, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 94, in main_worker

res = MIDDLEWARE._run(*call_args)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 45, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 979, in nf

return f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 371, in import_pool

self.logger.error(

File "libzfs.pyx", line 391, in libzfs.ZFS.__exit__

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 365, in import_pool

zfs.import_pool(found, new_name or found.name, options, any_host=any_host)

File "libzfs.pyx", line 1095, in libzfs.ZFS.import_pool

File "libzfs.pyx", line 1123, in libzfs.ZFS.__import_pool

libzfs.ZFSException: I/O error

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 367, in run

await self.future

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 403, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 975, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/pool.py", line 1421, in import_pool

await self.middleware.call('zfs.pool.import_pool', pool['guid'], {

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1256, in call

return await self._call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1221, in _call

return await self._call_worker(name, *prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1227, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1154, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1128, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

libzfs.ZFSException: ('I/O error',)

I did find this thread that suggested to check the "zpool import", but I honestly can't figure out where that is.