chronowraith

Cadet

- Joined

- Feb 18, 2018

- Messages

- 4

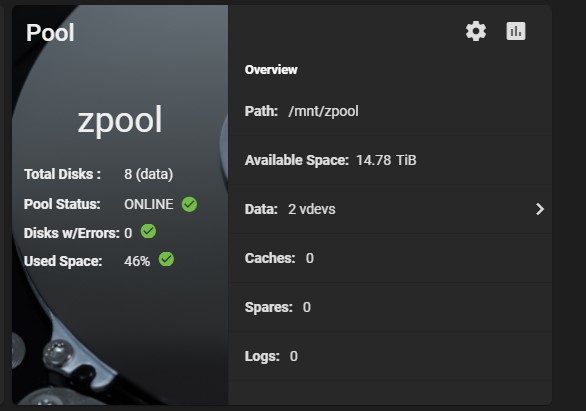

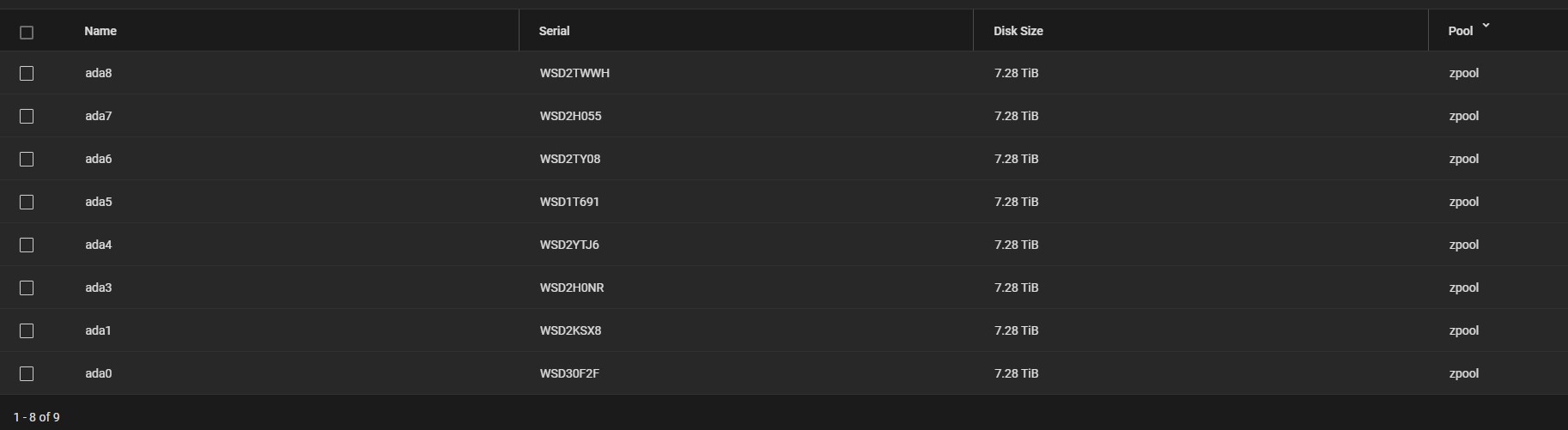

Tearing my hair out trying to figure out what's happening here. I have a TrueNAS-12.0-U5.1 install that was previously using 8x4TB hdds in a RAIDZ2 configuration. I've recently purchased 8x8TB drives and I've gone through the process of taking 1 drive offline at a time, shutting down the system, swapping out the offline drive with a new HDD and then issuing a "replace" on the drive in question (using process outlined here: https://www.truenas.com/docs/core/storage/disks/diskreplace/). This took awhile and when I finished the 8th drive I expected the pool size to increase once the resilver finished.

All of the interaction with the zpool were through the GUI

Well, it doesn't seem to have done that, instead my pool is the same size as before.

What's going on here and how can I get this pool expanded?

Thanks!

All of the interaction with the zpool were through the GUI

Well, it doesn't seem to have done that, instead my pool is the same size as before.

Code:

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zpool 58T 25.7T 32.3T - - 10% 44% 1.00x ONLINE /mnt

What's going on here and how can I get this pool expanded?

Thanks!