My server is a dell R720xd, dual E5-2637 v2 @ 3.50GHz with 256GB RAM. It has 12 x 4TB SAS drives and 2 further 2.5" bays in the rear, empty at the moment. I have 2 x 10GbE DACs to a Unifi 10GbE switch, and 2 ESXi 6.7 hosts are attached in the same way to the switch.

My main goal is pure storage performance for iSCSI, with protection of data of course. I previously didn't use multi-pathing for iSCSI but I plan to this time. For comparison I have the ESXi hosts linked over 1GbE to an old QNAP NAS with a couple of iSCSI extents and I get better speeds out of that compared to 10GbE with Free/TrueNAS. I've read and watched loads of youtube videos on Free/TrueNAS and on here, and sometimes I see or hear conflicting information or recommendations that applied on older versions and aren't required or as valid these days due to improvements in ZFS.

I have 2 x 2.5" bays I can use for SSDs for SLOG, ZIL or cache, and can also add an nvme drive for one of these functions though I read more RAM is better than SSDs and 256GB should be way more than enough. I had my drives set up in 1 large Z2 pool though I've read it might be better to split these into 6 x 2 drive mirrors, is that best? Open to suggestions from disk layout, block sizes, multi-pathing, tuneables etc.

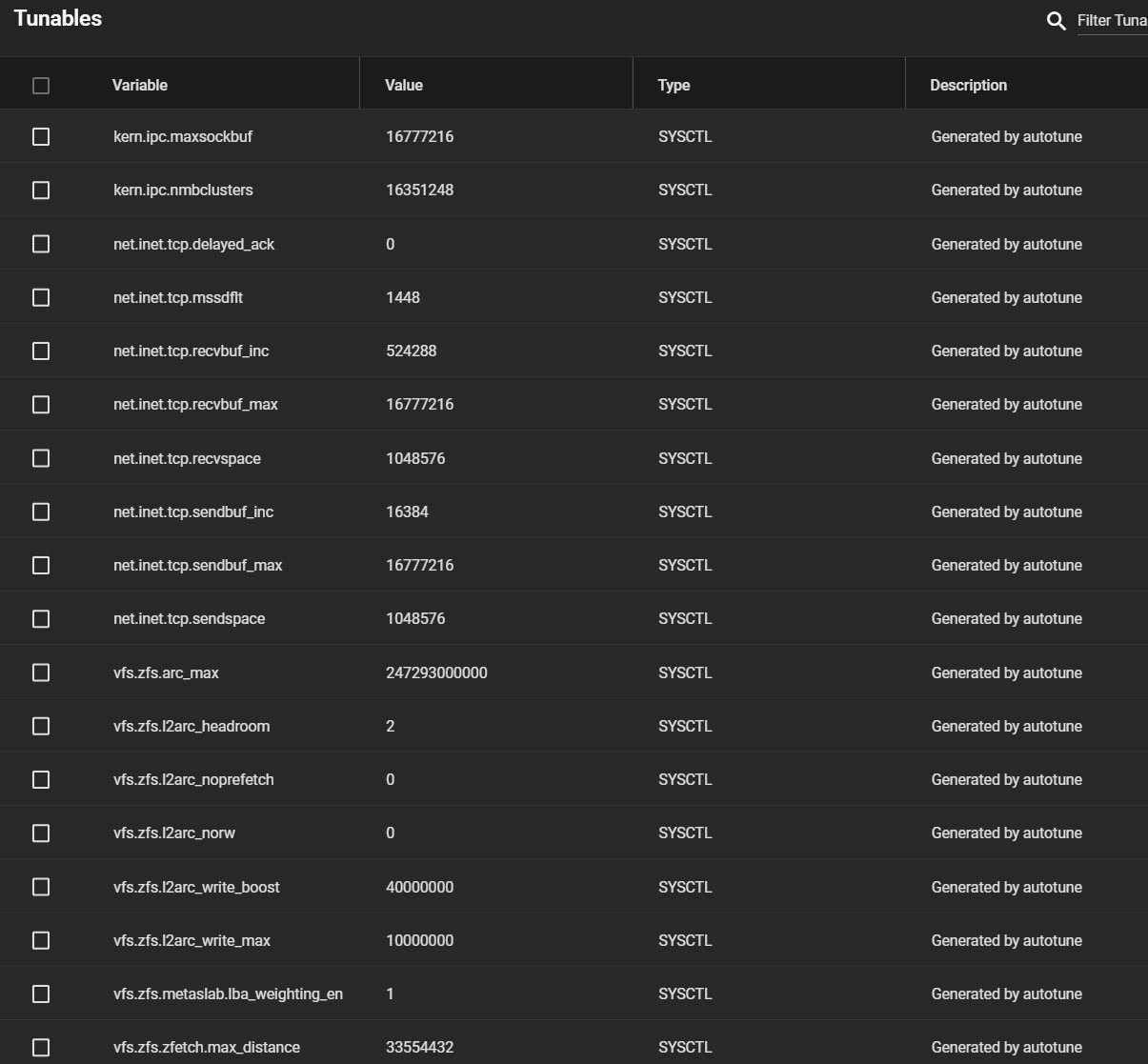

Below are the autotunes, are these valid, should they be changed/ditched or new ones added?

My main goal is pure storage performance for iSCSI, with protection of data of course. I previously didn't use multi-pathing for iSCSI but I plan to this time. For comparison I have the ESXi hosts linked over 1GbE to an old QNAP NAS with a couple of iSCSI extents and I get better speeds out of that compared to 10GbE with Free/TrueNAS. I've read and watched loads of youtube videos on Free/TrueNAS and on here, and sometimes I see or hear conflicting information or recommendations that applied on older versions and aren't required or as valid these days due to improvements in ZFS.

I have 2 x 2.5" bays I can use for SSDs for SLOG, ZIL or cache, and can also add an nvme drive for one of these functions though I read more RAM is better than SSDs and 256GB should be way more than enough. I had my drives set up in 1 large Z2 pool though I've read it might be better to split these into 6 x 2 drive mirrors, is that best? Open to suggestions from disk layout, block sizes, multi-pathing, tuneables etc.

Below are the autotunes, are these valid, should they be changed/ditched or new ones added?