A background. I am currently running a 5-years-old 6-bay QNAP NAS with 5x2TB WD RE-GP disks in RAID-5. The NAS stores media and backup files, and it almost ran out of space. I also have a bunch of single disks scattered around the house that add another 20-25TB worth of media that needs a proper and safe storage. I also have ESXi host with a bunch of VMs (domain controllers, file server, Plex, CrashPlan destination server, Linux and Windows lab machines, etc.) that run nicely on Xeon E3 machine.

I am looking for a solution to consolidate media library and backup storage in one place. Today I need ~30TB of storage, with plans to expand in the future (~10TB a year growth in the next 4 years).

I don't plan to have a full backup solution. My disaster recovery plans would look like this: I will repopulate QNAP with 6x3TB drives in RAID-6 (3TB is the largest disk the NAS box can recognize), and use it as a backup for critical and unique files (such as home videos). Most of the media that I have is not unique, so when disaster strikes, I would have to reacquire files elsewhere.

Initially I was planning on building NAS using 4U 24-bay chassis, but then reconsidered due noise concerns and footprint (I don't really have a well ventilated space for a 900+mm deep rack). The plan is to have a full tower case initially, and build JBOD expansion unit in future using another case with a power supply, a SAS expander connected through SFF-8088 to the FreeNAS machine.

I do not have huge budget, and I am planning to get most of the hardware used from eBay (drives, power supply, fans and cables excepted).

Here is my shopping list:

Thank you for your help!

I am looking for a solution to consolidate media library and backup storage in one place. Today I need ~30TB of storage, with plans to expand in the future (~10TB a year growth in the next 4 years).

I don't plan to have a full backup solution. My disaster recovery plans would look like this: I will repopulate QNAP with 6x3TB drives in RAID-6 (3TB is the largest disk the NAS box can recognize), and use it as a backup for critical and unique files (such as home videos). Most of the media that I have is not unique, so when disaster strikes, I would have to reacquire files elsewhere.

Initially I was planning on building NAS using 4U 24-bay chassis, but then reconsidered due noise concerns and footprint (I don't really have a well ventilated space for a 900+mm deep rack). The plan is to have a full tower case initially, and build JBOD expansion unit in future using another case with a power supply, a SAS expander connected through SFF-8088 to the FreeNAS machine.

I do not have huge budget, and I am planning to get most of the hardware used from eBay (drives, power supply, fans and cables excepted).

Here is my shopping list:

- Case: Lian Li PC-A75. 12 bays, good ventilation, plenty of space for E-ATX motherboard and expansion cards. Bought used one on eBay for $83 delivered

- Motherboard: Supermicro X8DTI-LN4F. Bought used on eBay for $113 (I/O shield included)

- RAM: 48GB (3x16GB) Samsung M393B2G70BH0-YH9 (ECC RDIMM from QVL list). Bought used on eBay for $249 in total. The motherboard has 6 DIMM slots per CPU, so I have a room for expansion in the future

- CPU: Xeon X5667 3.06GHz. $40 on eBay. Although the motherboard supports dual CPUs, I'm just going with a single processor - I feel one CPU should be sufficient for what I am planning to do

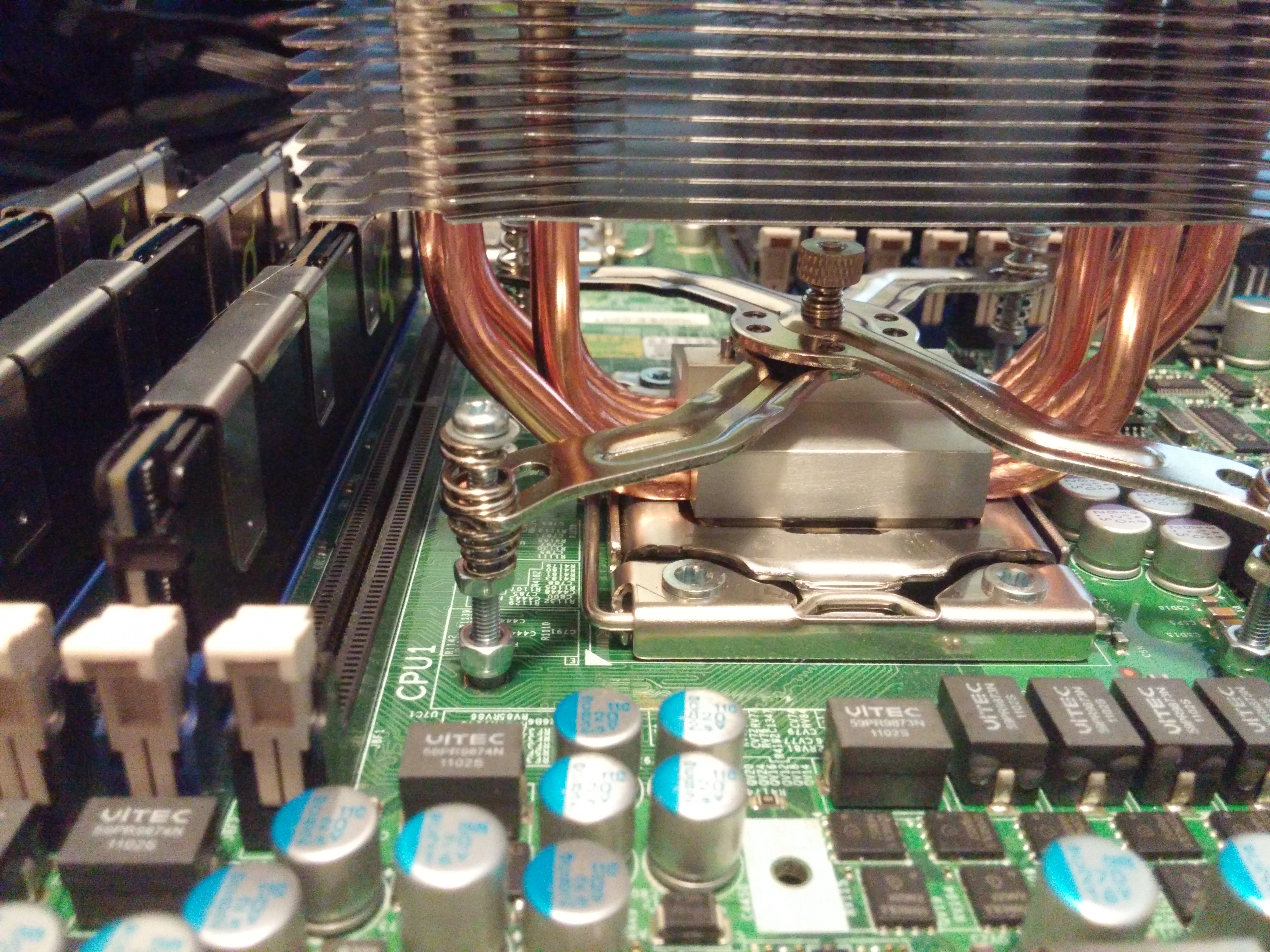

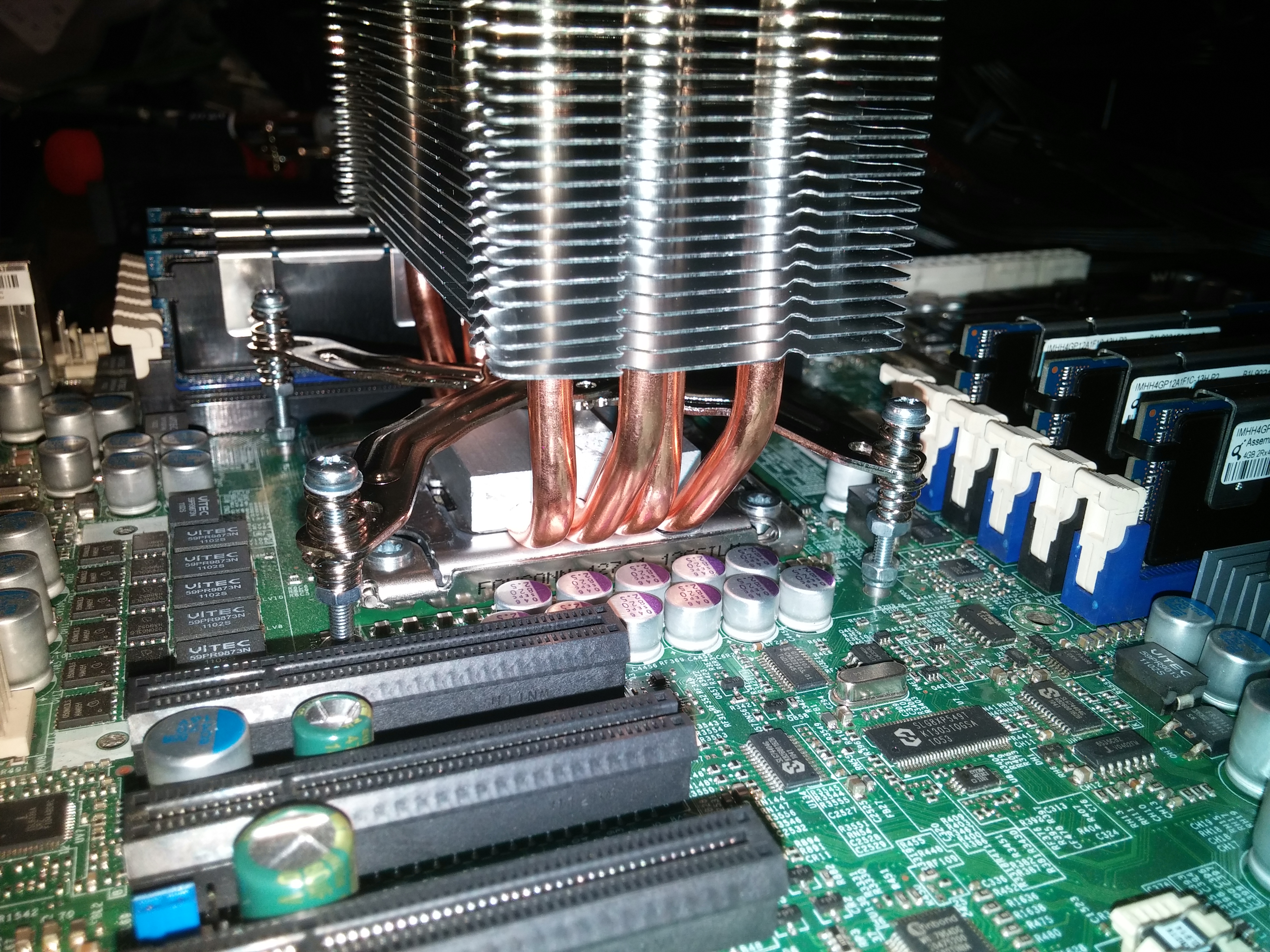

- CPU Fan: Noctua NH-U12DXi4. $64 from newegg

- Power Supply: SeaSonic Platinum SS-860XP2 860W. $125 after discount and rebate (new)

- HBA: IBM M1015. $100 on eBay with full bracket

- SAS Cables: 2 x SFF-8087 breakout cables (about $7 each on eBay)

- SATA Cables: 3 x SATA cables

- Disks: 11x6TB (in RAIDZ3) - WD Red (brand new)

- NIC: in future add Chelsio S320 or T420 10GbE card

- Boot Device: 2 x 16GB Sandisk Cruzer FIT USB 2.0 (SDCZ33-016G-A11) - $8 each from Amazon

- USB Header: 2 x USB A Female to USB Motherboard 4 Pin Header - $4 each from Amazon

Thank you for your help!

Last edited: