Hi :)

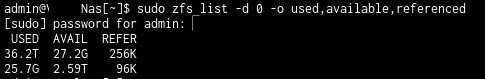

I've set up a Truenas scale box and created one big ZFS RaidZ2 dataset of 36 TB consisting of 6x10 TB disks ;

in that dataset I have 5 'slices' for storing different stuff from my network ;

in one of that 'slices' I've put 5 TB of datas, and some on the other 'slices', I have few GB free (but will increase the pool size as soon as I get 4 more 10 TB disks as I can't just add one disk at a time... ;)) ;

All the 'slices' are SBM or SMB/NFS shares.

after some month of 24/7 run I've seen something very confusing : on that 5 TB slice some data has been erased randomly, file are still listed but zero in size...

that should not happen on a ZFS raidz2 array said to be very secure...

all the disks are good with no errors, so what has happened ??? and how can I prevent this to happen again, as that nas is for keeping safe my datas I would like it to not eat it ;)

any suggestions ?

thanks by advance for the help

Jeff

I've set up a Truenas scale box and created one big ZFS RaidZ2 dataset of 36 TB consisting of 6x10 TB disks ;

in that dataset I have 5 'slices' for storing different stuff from my network ;

in one of that 'slices' I've put 5 TB of datas, and some on the other 'slices', I have few GB free (but will increase the pool size as soon as I get 4 more 10 TB disks as I can't just add one disk at a time... ;)) ;

All the 'slices' are SBM or SMB/NFS shares.

after some month of 24/7 run I've seen something very confusing : on that 5 TB slice some data has been erased randomly, file are still listed but zero in size...

that should not happen on a ZFS raidz2 array said to be very secure...

all the disks are good with no errors, so what has happened ??? and how can I prevent this to happen again, as that nas is for keeping safe my datas I would like it to not eat it ;)

any suggestions ?

thanks by advance for the help

Jeff