Borja

Contributor

- Joined

- Oct 20, 2015

- Messages

- 120

Hello,

I recently changed the com protocol of the replication task i have been doing for some years between two FreeNAS/TrueNAS system. So i changed from legacy to SSH.

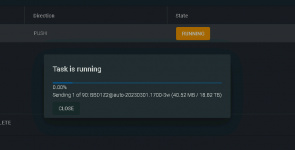

Problem is the source system has 9.65TB of data occupied. But the replication task is trying to send more than 25TB. At first, i thought it was an error so i wait for the process to complete but it fills the destination system. I dont know what is sending because the capacity of the source NAS is 24TB and it trying to send more....

Any ideas whats happening, and how can i resolve this?

Thanks

I recently changed the com protocol of the replication task i have been doing for some years between two FreeNAS/TrueNAS system. So i changed from legacy to SSH.

Problem is the source system has 9.65TB of data occupied. But the replication task is trying to send more than 25TB. At first, i thought it was an error so i wait for the process to complete but it fills the destination system. I dont know what is sending because the capacity of the source NAS is 24TB and it trying to send more....

Any ideas whats happening, and how can i resolve this?

Thanks