Hello, I am having some issues getting my backup/zfs-replication setup working.

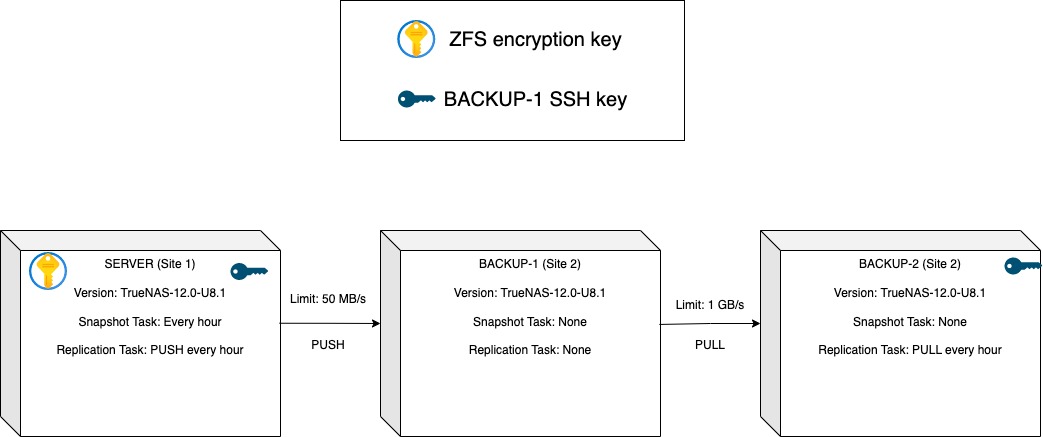

My idea of a backup scheme is illustrated in the picture below.

I have a server (SERVER) at Site 1, and two backup servers (BACKUP-1 and BACKUP-2) located at Site 2. SERVER and BACKUP-2 both have (unique) SSH keys to BACKUP-1. BACKUP-1 has no access to the other servers. I have set it up this way, such that if someone unwelcome gains access to SERVER, then they cannot reach BACKUP-2, if someone gets access to BACKUP-1, they cant get access to any of the other servers, and if someone gets access to BACKUP-2, they cannot reach SERVER. I though this configuration was a neat way of limiting potential risk of one server is breached, but please let me know if you have any better ideas.

My problem is with the replication task defined on BACKUP-2 to pull from BACKUP-1, that does not seem to work (at least not stable). I’ll explain my setup first, and elaborate more on the problem at the end.

The reason for not upgrading to 13 is because I use Proxmox to communicate with TrueNAS (SERVER Site 1), and Proxmox is, to my understanding, not compatible with the iSCSI implementation in TrueNAS Core v 13. Upgrading BACKUP-1 and BACKUP-2 to v13 is then again not possible, because TrueNAS does not support replication between v13 and v12. Please correct med if I am wrong!

BACKUP-1 has the following pool setup:

BACKUP-2 has the following pool setup:

BACKUP-1 and BACKUP-2 has no periodic snapshot task.

On BACKUP-2 [RT2] (PULL FROM BACKUP-1 TO BACKUP-2):

1: It does not seem like “Synchronize Destination Snapshots With Source” has much effect. BACKUP-2 was down for a while, and thus RT2 was not being run. After getting BACKUP-2 up again, and starting RT2 errors like the ones below started to pop up:

I thought enabling “Synchronize Destination Snapshots With Source” would allow overwriting existing data in

I have not seen any of the same errors connected to RT1.

Anyway, I circumvented this problem by creating a new dataset in

2: After creating the new dataset, everything was working fine for a while, until recently.

The problem started after we added a new ZVOL on SERVER under

RT1 picked up this new ZVOL without any problems, and the replication task is still working as intended.

RT2 picked it up once and finished its replication task. The next time RT2 was run however, the task never completed. RT2 was able to replicate all snapshots of

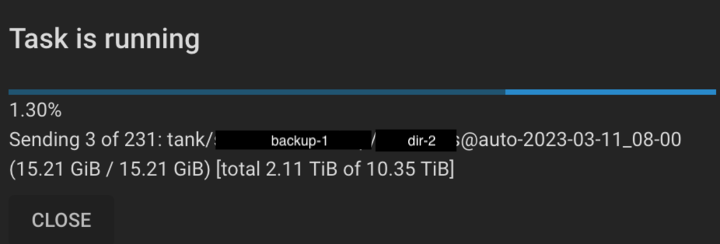

The GUI reports that the task is running, but it never seems to complete (It would hang like this forever):

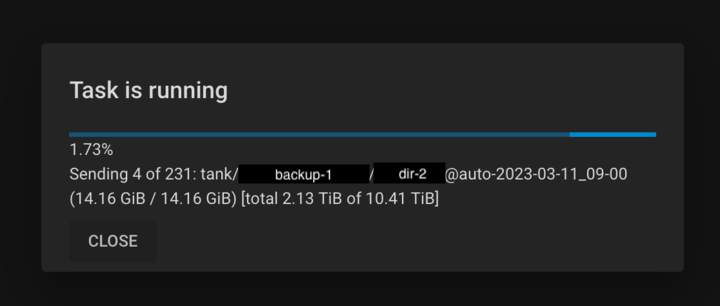

So I thought disabling, enabling and starting RT2 again hopefully would solve this problem. However, all it seems to do is move the replication task one image forwards, before it again freezes (notice the timestamp have moved one hour forwards, which matches with the snapshot task at SERVER):

This behaviour continued when I tried to disable, enable and start the replication task for five more times, until I gave up on this solution.

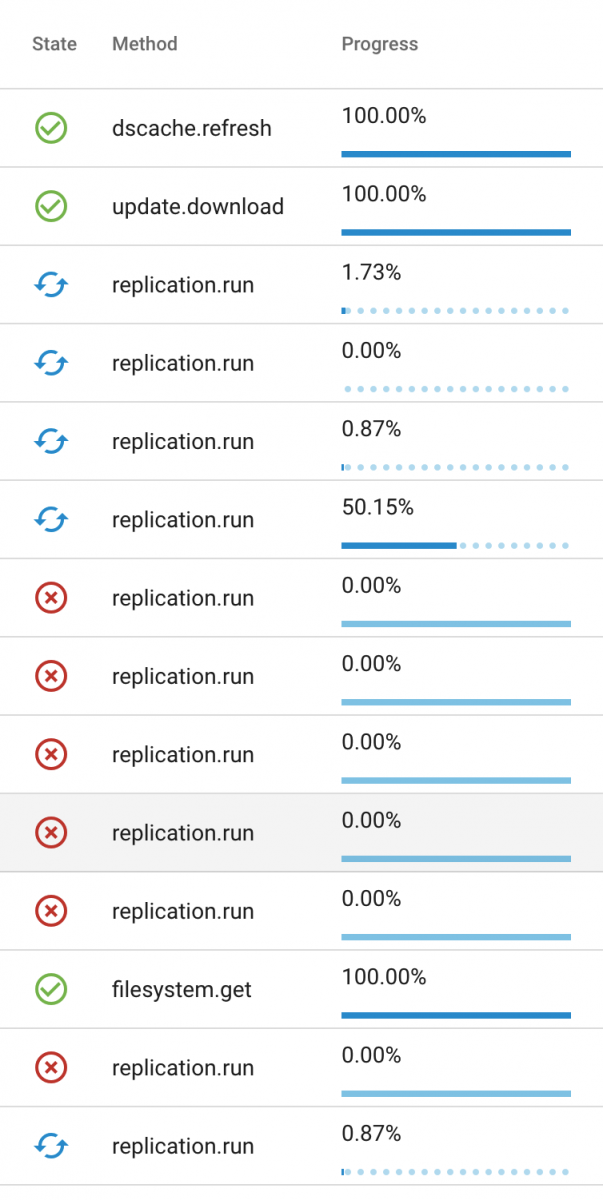

Investigating further I wanted to make sure that all replication task processes was killed before I tried to start the task again. The TaskManager reported quite a lot of processes running, although no replication task was enabled (Ignored the failed tasks, thats me experimenting around):

Opening a shell to try and find the process there and kill them I ran:

And then I killed process 1466 to 1471. The TaskManager was however reporting that some

So, full of hope, I started the replication task again and hope it would be able to finish this time (if not successfully, at least with an error). However, the task replicated

Now, I could probably “solve” this problem by creating a new dataset at BACKUP-2, and a new replication task, but that would require transferring a lot of data, and does not seem like a reliable solution, as this problem might just pop up there as well..

I would greatly appreciate if anyone has any idea of what is going on, if there is something I am doing wrong or have completely misunderstood or just some general thoughts about what I can do to try and narrow this problem down.

Thanks!

My idea of a backup scheme is illustrated in the picture below.

I have a server (SERVER) at Site 1, and two backup servers (BACKUP-1 and BACKUP-2) located at Site 2. SERVER and BACKUP-2 both have (unique) SSH keys to BACKUP-1. BACKUP-1 has no access to the other servers. I have set it up this way, such that if someone unwelcome gains access to SERVER, then they cannot reach BACKUP-2, if someone gets access to BACKUP-1, they cant get access to any of the other servers, and if someone gets access to BACKUP-2, they cannot reach SERVER. I though this configuration was a neat way of limiting potential risk of one server is breached, but please let me know if you have any better ideas.

My problem is with the replication task defined on BACKUP-2 to pull from BACKUP-1, that does not seem to work (at least not stable). I’ll explain my setup first, and elaborate more on the problem at the end.

Setup:

All servers (SERVER, BACKUP-1, BACKUP-2) are running TrueNAS Core v 12.0-U8.1.The reason for not upgrading to 13 is because I use Proxmox to communicate with TrueNAS (SERVER Site 1), and Proxmox is, to my understanding, not compatible with the iSCSI implementation in TrueNAS Core v 13. Upgrading BACKUP-1 and BACKUP-2 to v13 is then again not possible, because TrueNAS does not support replication between v13 and v12. Please correct med if I am wrong!

Pools

SERVER has the following pool setup:Code:

/

tank

dir-1

dir-2

dir-3 BACKUP-1 has the following pool setup:

Code:

/

tank

backup-1

dir-1 # by replication

dir-2 # by replication

dir-3 # by replicationBACKUP-2 has the following pool setup:

Code:

/

tank

backup-2

dir-1 # by replication

dir-2 # by replication

dir-3 # by replicationdir-1, dir-2, and dir-3 at the server all contain different ZVOLs. Periodic Snapshot Tasks

SERVER has the following periodic snapshot task:| Dataset: | tank/ |

| Recursive: | Yes |

| Schema: | Every hour |

| Allow taking empty snapshots: | Yes |

BACKUP-1 and BACKUP-2 has no periodic snapshot task.

Replication Tasks

On SERVER [RT1] (PUSH FROM SERVER TO BACKUP-1):Code:

Direction: PULL Transport: SSH Stream compression: Disabled Limit: 50 MiB Allow Blocks Larger than 128KB: Yes Allow Compressed WRITE Records: Yes Source: /tank/dir-1, /tank/dir-2, /tank/dir-3 Recursive: Yes Include Dataset Properties: Yes (Almost) Full Filesystem Replication: No Destination: /tank/backup-1/ Destination Dataset Read-only Policy: Set Encryption: No Synchronize Destination Snapshots With Source: Yes

On BACKUP-2 [RT2] (PULL FROM BACKUP-1 TO BACKUP-2):

Code:

Direction: PULL Transport: SSH Stream compression: Disabled Limit: None (1 GB/s cable) Allow Blocks Larger than 128KB: Yes Allow Compressed WRITE Records: Yes Source: /tank/backup-1/dir-1, /tank/backup-2/dir-2, /tank/backup-3/dir-3 Recursive: N/A Include Dataset Properties: N/A (Almost) Full Filesystem Replication: yes Destination: /tank/backup-2/ Destination Dataset Read-only Policy: Set Encryption: No Synchronize Destination Snapshots With Source: Yes

Problem

Now, the problem is that RT1 works perfectly, while there are a lot of problems with RT2.1: It does not seem like “Synchronize Destination Snapshots With Source” has much effect. BACKUP-2 was down for a while, and thus RT2 was not being run. After getting BACKUP-2 up again, and starting RT2 errors like the ones below started to pop up:

Error: Refusing to overwrite data

Error: Target dataset 'backup-2' does not have snapshots but has data (29071913 bytes used) and replication from scratch is not allowed. Refusing to overwrite existing data

I thought enabling “Synchronize Destination Snapshots With Source” would allow overwriting existing data in

/tank/backup-2/?I have not seen any of the same errors connected to RT1.

Anyway, I circumvented this problem by creating a new dataset in

/tank/ on BACKUP-2.2: After creating the new dataset, everything was working fine for a while, until recently.

The problem started after we added a new ZVOL on SERVER under

tank/dir-2/.RT1 picked up this new ZVOL without any problems, and the replication task is still working as intended.

RT2 picked it up once and finished its replication task. The next time RT2 was run however, the task never completed. RT2 was able to replicate all snapshots of

/tank/backup-1/dir-1, but is freezing/hanging on snapshots of /tank/backup-1/dir-2.The GUI reports that the task is running, but it never seems to complete (It would hang like this forever):

So I thought disabling, enabling and starting RT2 again hopefully would solve this problem. However, all it seems to do is move the replication task one image forwards, before it again freezes (notice the timestamp have moved one hour forwards, which matches with the snapshot task at SERVER):

This behaviour continued when I tried to disable, enable and start the replication task for five more times, until I gave up on this solution.

Investigating further I wanted to make sure that all replication task processes was killed before I tried to start the task again. The TaskManager reported quite a lot of processes running, although no replication task was enabled (Ignored the failed tasks, thats me experimenting around):

Opening a shell to try and find the process there and kill them I ran:

Code:

root@backup-2[~]# ps -A | grep zfs

16 - DL 0:09.28 [zfskern]

227 - Ss 0:00.03 /usr/local/sbin/zfsd

1466 - Is 0:00.01 sh -c exec 3>&1; eval $(exec 4>&1 >&3 3>&-; { /usr/local/bin/ssh -i /tmp/tmpzca3ano_ -o UserKnownHostsFile=/tmp/tmpaclvz49q -o StrictHostKeyChecking=yes -o BatchMode=yes -o ConnectTimeout=10 -p22000 root@10.0.15.10 'sh -c '"'"'(zfs send -V -R -w -i tank/backup-1/dir-2@auto-2023-03-11_09-00 -L -c tank/backup-1/dir-2@auto-2023-03-11_10-00 & PID=$!; echo "zettarepl: zfs send PID is $PID" 1>&2; wait $PID)'"'"'' 4>&-; echo "pipestatus0=$?;" >&4; } | { zfs recv -s -F -x mountpoint -x sharenfs -x sharesmb tank/backup-2/dir-2 4>&-; echo "pipestatus1=$?;" >&4; }); [ $pipestatus0 -ne0 ] && exit $pipestatus0; [ $pipestatus1 -ne 0 ] && exit $pipestatus1; exit 0

1467 - I 0:00.00 sh -c exec 3>&1; eval $(exec 4>&1 >&3 3>&-; { /usr/local/bin/ssh -i /tmp/tmpzca3ano_ -o UserKnownHostsFile=/tmp/tmpaclvz49q -o StrictHostKeyChecking=yes -o BatchMode=yes -o ConnectTimeout=10 -p22000 root@10.0.15.10 'sh -c '"'"'(zfs send -V -R -w -i tank/backup-1/dir-2@auto-2023-03-11_09-00 -L -c tank/backup-1/dir-2@auto-2023-03-11_10-00 & PID=$!; echo "zettarepl: zfs send PID is $PID" 1>&2; wait $PID)'"'"'' 4>&-; echo "pipestatus0=$?;" >&4; } | { zfs recv -s -F -x mountpoint -x sharenfs -x sharesmb tank/backup-2/dir-2 4>&-; echo "pipestatus1=$?;" >&4; }); [ $pipestatus0 -ne0 ] && exit $pipestatus0; [ $pipestatus1 -ne 0 ] && exit $pipestatus1; exit 0

1468 - I 0:00.00 sh -c exec 3>&1; eval $(exec 4>&1 >&3 3>&-; { /usr/local/bin/ssh -i /tmp/tmpzca3ano_ -o UserKnownHostsFile=/tmp/tmpaclvz49q -o StrictHostKeyChecking=yes -o BatchMode=yes -o ConnectTimeout=10 -p22000 root@10.0.15.10 'sh -c '"'"'(zfs send -V -R -w -i tank/backup-1/dir-2@auto-2023-03-11_09-00 -L -c tank/backup-1/dir-2@auto-2023-03-11_10-00 & PID=$!; echo "zettarepl: zfs send PID is $PID" 1>&2; wait $PID)'"'"'' 4>&-; echo "pipestatus0=$?;" >&4; } | { zfs recv -s -F -x mountpoint -x sharenfs -x sharesmb tank/backup-2/dir-2 4>&-; echo "pipestatus1=$?;" >&4; }); [ $pipestatus0 -ne0 ] && exit $pipestatus0; [ $pipestatus1 -ne 0 ] && exit $pipestatus1; exit 0

1469 - I 0:00.00 sh -c exec 3>&1; eval $(exec 4>&1 >&3 3>&-; { /usr/local/bin/ssh -i /tmp/tmpzca3ano_ -o UserKnownHostsFile=/tmp/tmpaclvz49q -o StrictHostKeyChecking=yes -o BatchMode=yes -o ConnectTimeout=10 -p22000 root@10.0.15.10 'sh -c '"'"'(zfs send -V -R -w -i tank/backup-1/dir-2@auto-2023-03-11_09-00 -L -c tank/backup-1/dir-2@auto-2023-03-11_10-00 & PID=$!; echo "zettarepl: zfs send PID is $PID" 1>&2; wait $PID)'"'"'' 4>&-; echo "pipestatus0=$?;" >&4; } | { zfs recv -s -F -x mountpoint -x sharenfs -x sharesmb tank/backup-2/dir-2 4>&-; echo "pipestatus1=$?;" >&4; }); [ $pipestatus0 -ne0 ] && exit $pipestatus0; [ $pipestatus1 -ne 0 ] && exit $pipestatus1; exit 0

1470 - R 0:57.27 /usr/local/bin/ssh -i /tmp/tmpzca3ano_ -o UserKnownHostsFile=/tmp/tmpaclvz49q -o StrictHostKeyChecking=yes -o BatchMode=yes -o ConnectTimeout=10 -p22000 root@10.0.15.10 sh -c '(zfs send -V -R -w -i tank/backup-1/dir-2@auto-2023-03-11_09-00 -L -c tank/backup-1/dir-2@auto-2023-03-11_10-00 & PID=$!; echo "zettarepl: zfs send PID is $PID" 1>&2; wait $PID)'

1471 - R 0:18.36 zfs recv -s -F -x mountpoint -x sharenfs -x sharesmb tank/backup-2/dir-2And then I killed process 1466 to 1471. The TaskManager was however reporting that some

replication.run process was stilling going on, so I did a restart of the system as well (using the GUI). After the restart, the TaskManager did not report any tasks running, and neither did ps -A | grep zfsSo, full of hope, I started the replication task again and hope it would be able to finish this time (if not successfully, at least with an error). However, the task replicated

/tank/backup-1/dir-1 just fine, but when it got to /tank/backup-1/dir-2, it moved the timestamp one hour forwards, and has been stuck there every since. I even repeated this entire process, and created a new (identical) replication task in case it was something related to the ID of the previous replication task, but that did not work either.Now, I could probably “solve” this problem by creating a new dataset at BACKUP-2, and a new replication task, but that would require transferring a lot of data, and does not seem like a reliable solution, as this problem might just pop up there as well..

I would greatly appreciate if anyone has any idea of what is going on, if there is something I am doing wrong or have completely misunderstood or just some general thoughts about what I can do to try and narrow this problem down.

Thanks!