mew

Cadet

- Joined

- Jun 20, 2022

- Messages

- 8

I am currently preparing to upgrade my TrueNAS Scale server from a two 14tb pool (mirrored) to a ten 14tb pool. My friend loaned me two of his 14tb drives to use as a temporary buffer so I can swap out my drives and resilver them for my new pool. I swapped out one and started the resilvering process with no issues thinking that I'd speed up the process to add a pool using my own drives. Upon starting that I tried to see if I could start up my plex server while this is going on only to find out that it doesn't seem to be working at all. I am using TrueCharts apps and asked them in there if this is an issue that happens when drives resilver hoping that this is a known issue. On further inspection it seems to be that the storage backend is (allegedly) not working at all. I restarted my system a little bit into the resilvering process to see if that'd fix the kubernetes issue but my issues still persisted.

This is the output from kube-system

The resilvering process is also taking forever and I'm assuming it's due to the fact that I've restarted the machine several times and caused more issues than it has helped.

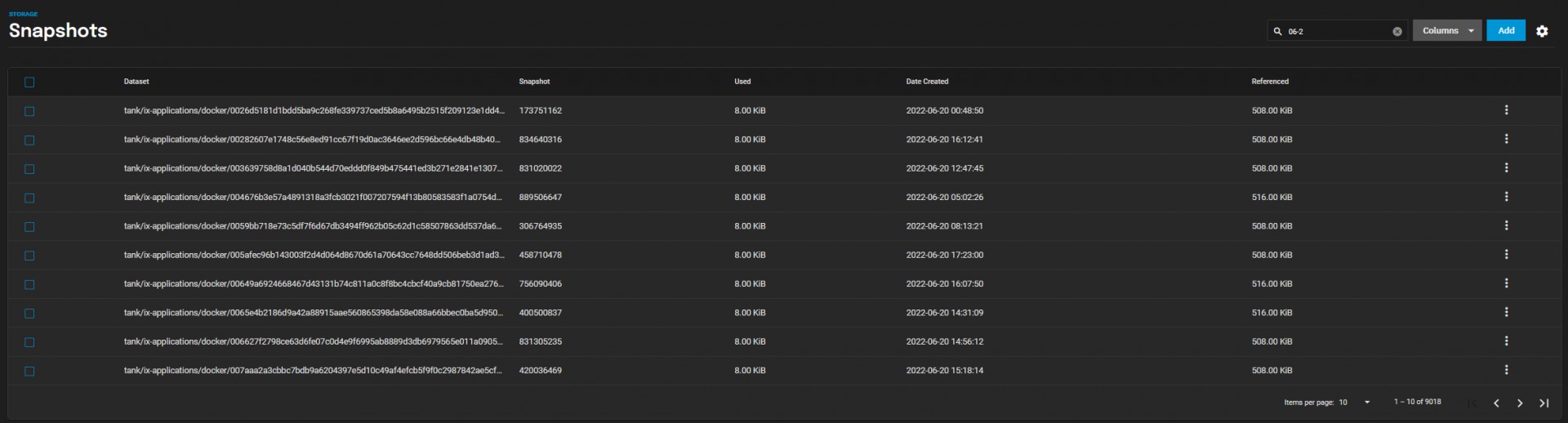

I also seemingly managed to make over 9000 snapshots in the past day and a half alone because kubernetes keeps trying to create pods?

I've made a ticket on Jira but I'm waiting for a response and I'm really not sure what I should do. Should I leave my server running while it is spamming non stop snapshots and bogging down the system? Is there any way to delete these en masse?

My current specs are

Sorry for posting this on the forum as well as on the jira, it's stressing me out as I've not seen anyone else run into similar issues?

Please let me know if you have any questions about my issue!

Thanks for the help :)

This is the output from kube-system

Code:

root@server[~]# k3s kubectl describe pods -n kube-system

Name: openebs-zfs-node-g5mw6

Namespace: kube-system

Priority: 900001000

Priority Class Name: openebs-zfs-csi-node-critical

Node: ix-truenas/192.168.1.49

Start Time: Tue, 21 Jun 2022 17:51:09 -0700

Labels: app=openebs-zfs-node

controller-revision-hash=57f5455f6b

openebs.io/component-name=openebs-zfs-node

openebs.io/version=ci

pod-template-generation=1

role=openebs-zfs

Annotations: <none>

Status: Pending

IP: 192.168.1.49

IPs:

IP: 192.168.1.49

Controlled By: DaemonSet/openebs-zfs-node

Containers:

csi-node-driver-registrar:

Container ID:

Image: k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.3.0

Image ID:

Port: <none>

Host Port: <none>

Args:

--v=5

--csi-address=$(ADDRESS)

--kubelet-registration-path=$(DRIVER_REG_SOCK_PATH)

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment:

ADDRESS: /plugin/csi.sock

DRIVER_REG_SOCK_PATH: /var/lib/kubelet/plugins/zfs-localpv/csi.sock

KUBE_NODE_NAME: (v1:spec.nodeName)

NODE_DRIVER: openebs-zfs

Mounts:

/plugin from plugin-dir (rw)

/registration from registration-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qvnfn (ro)

openebs-zfs-plugin:

Container ID:

Image: openebs/zfs-driver:2.0.0

Image ID:

Port: <none>

Host Port: <none>

Args:

--nodename=$(OPENEBS_NODE_NAME)

--endpoint=$(OPENEBS_CSI_ENDPOINT)

--plugin=$(OPENEBS_NODE_DRIVER)

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment:

OPENEBS_NODE_NAME: (v1:spec.nodeName)

OPENEBS_CSI_ENDPOINT: unix:///plugin/csi.sock

OPENEBS_NODE_DRIVER: agent

OPENEBS_NAMESPACE: openebs

ALLOWED_TOPOLOGIES: All

Mounts:

/dev from device-dir (rw)

/home/keys from encr-keys (rw)

/host from host-root (ro)

/plugin from plugin-dir (rw)

/sbin/zfs from chroot-zfs (rw,path="zfs")

/var/lib/kubelet/ from pods-mount-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qvnfn (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

device-dir:

Type: HostPath (bare host directory volume)

Path: /dev

HostPathType: Directory

encr-keys:

Type: HostPath (bare host directory volume)

Path: /home/keys

HostPathType: DirectoryOrCreate

chroot-zfs:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: openebs-zfspv-bin

Optional: false

host-root:

Type: HostPath (bare host directory volume)

Path: /

HostPathType: Directory

registration-dir:

Type: HostPath (bare host directory volume)

Path: /var/lib/kubelet/plugins_registry/

HostPathType: DirectoryOrCreate

plugin-dir:

Type: HostPath (bare host directory volume)

Path: /var/lib/kubelet/plugins/zfs-localpv/

HostPathType: DirectoryOrCreate

pods-mount-dir:

Type: HostPath (bare host directory volume)

Path: /var/lib/kubelet/

HostPathType: Directory

kube-api-access-qvnfn:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/network-unavailable:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 52m default-scheduler Successfully assigned kube-system/openebs-zfs-node-g5mw6 to ix-truenas

Normal SandboxChanged 10m (x12 over 32m) kubelet Pod sandbox changed, it will be killed and re-created.

Warning FailedCreatePodSandBox 1s (x25 over 50m) kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to create a sandbox for pod "openebs-zfs-node-g5mw6": operation timeout: context deadline exceeded

Name: coredns-d76bd69b-6h7nj

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: ix-truenas/192.168.1.49

Start Time: Tue, 21 Jun 2022 17:51:09 -0700

Labels: k8s-app=kube-dns

pod-template-hash=d76bd69b

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/coredns-d76bd69b

Containers:

coredns:

Container ID:

Image: rancher/mirrored-coredns-coredns:1.9.1

Image ID:

Ports: 53/UDP, 53/TCP, 9153/TCP

Host Ports: 0/UDP, 0/TCP, 0/TCP

Args:

-conf

/etc/coredns/Corefile

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Limits:

memory: 170Mi

Requests:

cpu: 100m

memory: 70Mi

Liveness: http-get http://:8080/health delay=60s timeout=1s period=10s #success=1 #failure=3

Readiness: http-get http://:8181/ready delay=0s timeout=1s period=2s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/coredns from config-volume (ro)

/etc/coredns/custom from custom-config-volume (ro)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-lxmn7 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: coredns

Optional: false

custom-config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: coredns-custom

Optional: true

kube-api-access-lxmn7:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: beta.kubernetes.io/os=linux

Tolerations: CriticalAddonsOnly op=Exists

node-role.kubernetes.io/control-plane:NoSchedule op=Exists

node-role.kubernetes.io/master:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 57m default-scheduler 0/1 nodes are available: 1 node(s) had taint {ix-svc-start: }, that the pod didn't tolerate.

Warning FailedScheduling 54m (x1 over 55m) default-scheduler 0/1 nodes are available: 1 node(s) had taint {ix-svc-start: }, that the pod didn't tolerate.

Warning FailedScheduling 53m default-scheduler 0/1 nodes are available: 1 node(s) had taint {node.kubernetes.io/unreachable: }, that the pod didn't tolerate.

Normal Scheduled 52m default-scheduler Successfully assigned kube-system/coredns-d76bd69b-6h7nj to ix-truenas

Warning FailedSync 29m (x5 over 30m) kubelet error determining status: rpc error: code = Unknown desc = Error: No such container: 438d145717f95533dc20661f4ca3259e5af73e94521a4ec2a05fbd0ec0c7781a

Normal SandboxChanged 17m (x9 over 34m) kubelet Pod sandbox changed, it will be killed and re-created.

Warning FailedCreatePodSandBox 5m19s (x22 over 50m) kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to create a sandbox for pod "coredns-d76bd69b-6h7nj": operation timeout: context deadline exceeded

Name: nvidia-device-plugin-daemonset-n7fwf

Namespace: kube-system

Priority: 2000001000

Priority Class Name: system-node-critical

Node: ix-truenas/192.168.1.49

Start Time: Tue, 21 Jun 2022 17:51:37 -0700

Labels: controller-revision-hash=77f95bfc79

name=nvidia-device-plugin-ds

pod-template-generation=1

Annotations: scheduler.alpha.kubernetes.io/critical-pod:

Status: Pending

IP:

IPs: <none>

Controlled By: DaemonSet/nvidia-device-plugin-daemonset

Containers:

nvidia-device-plugin-ctr:

Container ID:

Image: nvidia/k8s-device-plugin:v0.10.0

Image ID:

Port: <none>

Host Port: <none>

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/lib/kubelet/device-plugins from device-plugin (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-r2vhn (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

device-plugin:

Type: HostPath (bare host directory volume)

Path: /var/lib/kubelet/device-plugins

HostPathType:

kube-api-access-r2vhn:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: CriticalAddonsOnly op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

nvidia.com/gpu:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 51m default-scheduler Successfully assigned kube-system/nvidia-device-plugin-daemonset-n7fwf to ix-truenas

Normal SandboxChanged 9m51s (x13 over 34m) kubelet Pod sandbox changed, it will be killed and re-created.

Warning FailedCreatePodSandBox 3m40s (x23 over 49m) kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to create a sandbox for pod "nvidia-device-plugin-daemonset-n7fwf": operation timeout: context deadline exceeded

Name: openebs-zfs-controller-0

Namespace: kube-system

Priority: 900000000

Priority Class Name: openebs-zfs-csi-controller-critical

Node: ix-truenas/192.168.1.49

Start Time: Tue, 21 Jun 2022 17:51:36 -0700

Labels: app=openebs-zfs-controller

controller-revision-hash=openebs-zfs-controller-698698d48b

openebs.io/component-name=openebs-zfs-controller

openebs.io/version=ci

role=openebs-zfs

statefulset.kubernetes.io/pod-name=openebs-zfs-controller-0

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: StatefulSet/openebs-zfs-controller

Containers:

csi-resizer:

Container ID:

Image: k8s.gcr.io/sig-storage/csi-resizer:v1.2.0

Image ID:

Port: <none>

Host Port: <none>

Args:

--v=5

--csi-address=$(ADDRESS)

--leader-election

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment:

ADDRESS: /var/lib/csi/sockets/pluginproxy/csi.sock

Mounts:

/var/lib/csi/sockets/pluginproxy/ from socket-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-jwxj2 (ro)

csi-snapshotter:

Container ID:

Image: k8s.gcr.io/sig-storage/csi-snapshotter:v4.0.0

Image ID:

Port: <none>

Host Port: <none>

Args:

--csi-address=$(ADDRESS)

--leader-election

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment:

ADDRESS: /var/lib/csi/sockets/pluginproxy/csi.sock

Mounts:

/var/lib/csi/sockets/pluginproxy/ from socket-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-jwxj2 (ro)

snapshot-controller:

Container ID:

Image: k8s.gcr.io/sig-storage/snapshot-controller:v4.0.0

Image ID:

Port: <none>

Host Port: <none>

Args:

--v=5

--leader-election=true

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-jwxj2 (ro)

csi-provisioner:

Container ID:

Image: k8s.gcr.io/sig-storage/csi-provisioner:v3.0.0

Image ID:

Port: <none>

Host Port: <none>

Args:

--csi-address=$(ADDRESS)

--v=5

--feature-gates=Topology=true

--strict-topology

--leader-election

--extra-create-metadata=true

--enable-capacity=true

--default-fstype=ext4

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment:

ADDRESS: /var/lib/csi/sockets/pluginproxy/csi.sock

NAMESPACE: kube-system (v1:metadata.namespace)

POD_NAME: openebs-zfs-controller-0 (v1:metadata.name)

Mounts:

/var/lib/csi/sockets/pluginproxy/ from socket-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-jwxj2 (ro)

openebs-zfs-plugin:

Container ID:

Image: openebs/zfs-driver:2.0.0

Image ID:

Port: <none>

Host Port: <none>

Args:

--endpoint=$(OPENEBS_CSI_ENDPOINT)

--plugin=$(OPENEBS_CONTROLLER_DRIVER)

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment:

OPENEBS_CONTROLLER_DRIVER: controller

OPENEBS_CSI_ENDPOINT: unix:///var/lib/csi/sockets/pluginproxy/csi.sock

OPENEBS_NAMESPACE: openebs

OPENEBS_IO_INSTALLER_TYPE: zfs-operator

OPENEBS_IO_ENABLE_ANALYTICS: true

Mounts:

/var/lib/csi/sockets/pluginproxy/ from socket-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-jwxj2 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

socket-dir:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

kube-api-access-jwxj2:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 51m default-scheduler Successfully assigned kube-system/openebs-zfs-controller-0 to ix-truenas

Warning FailedSync 38m (x3 over 38m) kubelet error determining status: rpc error: code = Unknown desc = Error: No such container: 2c97475ed30a8d3dc3c987f44517dad4720751c3a6366dc3869cbc4216141ef5

Warning FailedSync 23m (x3 over 23m) kubelet error determining status: rpc error: code = Unknown desc = Error: No such container: a81e009b4f084f12806bec335c332c6c2b168fd461909906875183083448cb12

Normal SandboxChanged 19m (x10 over 37m) kubelet Pod sandbox changed, it will be killed and re-created.

Warning FailedCreatePodSandBox 4m47s (x22 over 49m) kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to create a sandbox for pod "openebs-zfs-controller-0": operation timeout: context deadline exceeded

root@server[~]#

The resilvering process is also taking forever and I'm assuming it's due to the fact that I've restarted the machine several times and caused more issues than it has helped.

I also seemingly managed to make over 9000 snapshots in the past day and a half alone because kubernetes keeps trying to create pods?

I've made a ticket on Jira but I'm waiting for a response and I'm really not sure what I should do. Should I leave my server running while it is spamming non stop snapshots and bogging down the system? Is there any way to delete these en masse?

My current specs are

Code:

Intel i7 5820k Gigabyte X99 UD4 (Revision 1) 102gb of of DDR4 RAM Corsair ax850 Gold Nvidia EVGA 1070 One (formerly two) shucked WD easystore (wd whites) - 14tb One WD Purple 14tb drive

Sorry for posting this on the forum as well as on the jira, it's stressing me out as I've not seen anyone else run into similar issues?

Please let me know if you have any questions about my issue!

Thanks for the help :)