tngri

Dabbler

- Joined

- Jun 7, 2017

- Messages

- 39

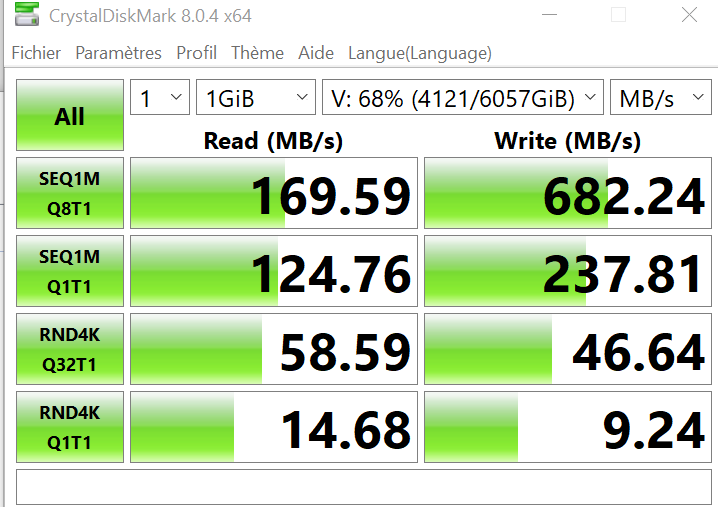

I'm doing some test with my 2xChelsion 320 connecte with short DAC sfp+ cable (no switch), to validate usage scenario (consolidate working files)

Truenas 12u5 <==> windows 10 pro, using smb share

My pool is like this

I don't understand why I only get this read performance on sequential read ....

what would be wrong ? never undestand how I can bench locally well the pool either ...

Any advice would be appreciated :)

Truenas 12u5 <==> windows 10 pro, using smb share

My pool is like this

Code:

pool: tank

state: ONLINE

scan: scrub repaired 0B in 05:21:56 with 0 errors on Sun Mar 8 05:21:57 2020

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/cdc1a35e-a93a-11e9-95a1-d05099c188d1 ONLINE 0 0 0

gptid/cf82bc53-a93a-11e9-95a1-d05099c188d1 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/dd0fbcf3-a93a-11e9-95a1-d05099c188d1 ONLINE 0 0 0

gptid/df012241-a93a-11e9-95a1-d05099c188d1 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/ec9e4ce9-a93a-11e9-95a1-d05099c188d1 ONLINE 0 0 0

gptid/1996590b-42c8-11ea-87b4-d05099d3036a ONLINE 0 0 0

errors: No known data errors

I don't understand why I only get this read performance on sequential read ....

what would be wrong ? never undestand how I can bench locally well the pool either ...

Any advice would be appreciated :)