Hi Forums,

Here is my hardware for FreeNAS:

2X Supermicro 4U Chassis ( 24Bay and external 45 Bay )

MB: Supermicro X10DRH-C/i

CPU: 2X Xeon 2.4 Ghz 2460 v3

MEM: 256GB

RAID: LSI 3108 MegaRAID 6.22.03.0 - FW 24.7.0.-0026

Drives:

1x: 32GB Sandisk USB 3.0 ( boot drive ) - hd0

4X: KINGSTON SUV400S 400GB ea (single L2ARC cache device)

4X: INTEL SSDSC2CT24 240GB ea (single ZIL device)

16X: WDC WD2003FZEX-0 2TB ea

24X: WDC WD2003FZEX-0 2TB ea - Connected via external SAS onto LSI RAID

20x vdevs consisting of ( 2 x 2TB devices )

2 X RJ-45 10G

4 X 10G SFP+ - Intel X710 chipset ( Installed Oct 2016 )

Here is my hardware for my ESXi Hosts:

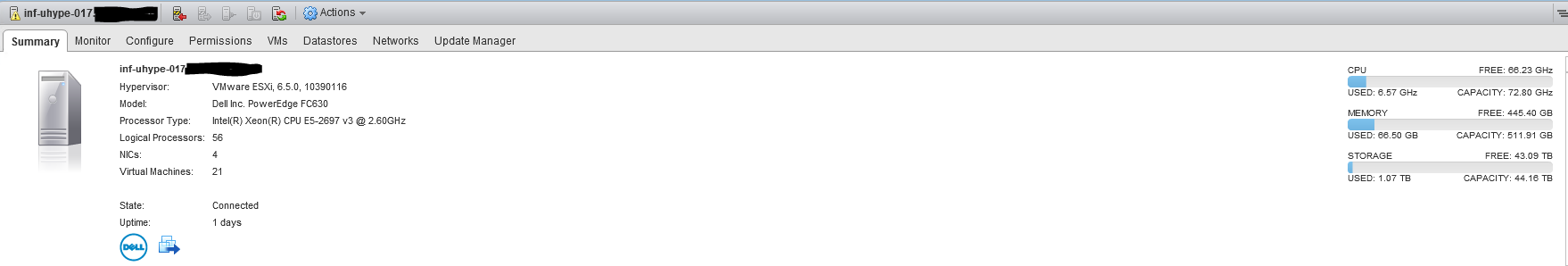

4X Dell FC630 - 2 ea E5-2697v3 - 512GB RAM - 4X Intel x710 NICs ( passthrough IOM )

FreeNAS and ESXi hosts are connected via Dell S4048-ON 10Gb switch running DNOS 9.14.1 with Intel/Dell Official 10-GBASE-SR Fibermodules and high quality manufactured LC/LC fiber at 5m in the same rack.

What I am seeking help with is to troubleshoot an issue where I am having one way rate limiting on my FreeNAS storage. This has NEVER been an issue until very recently ( 1DEC2018 ) and I've been taking drastic troubleshooting steps to resolve. I am at my wits end and am seeking the assistance of the community at large.

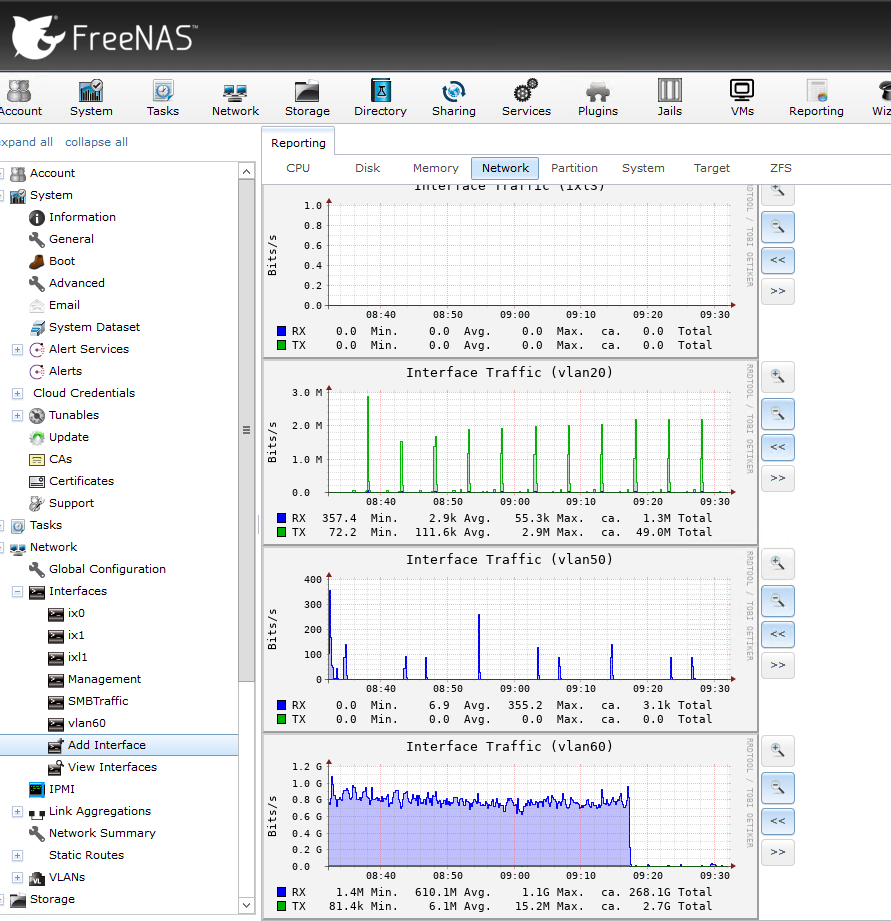

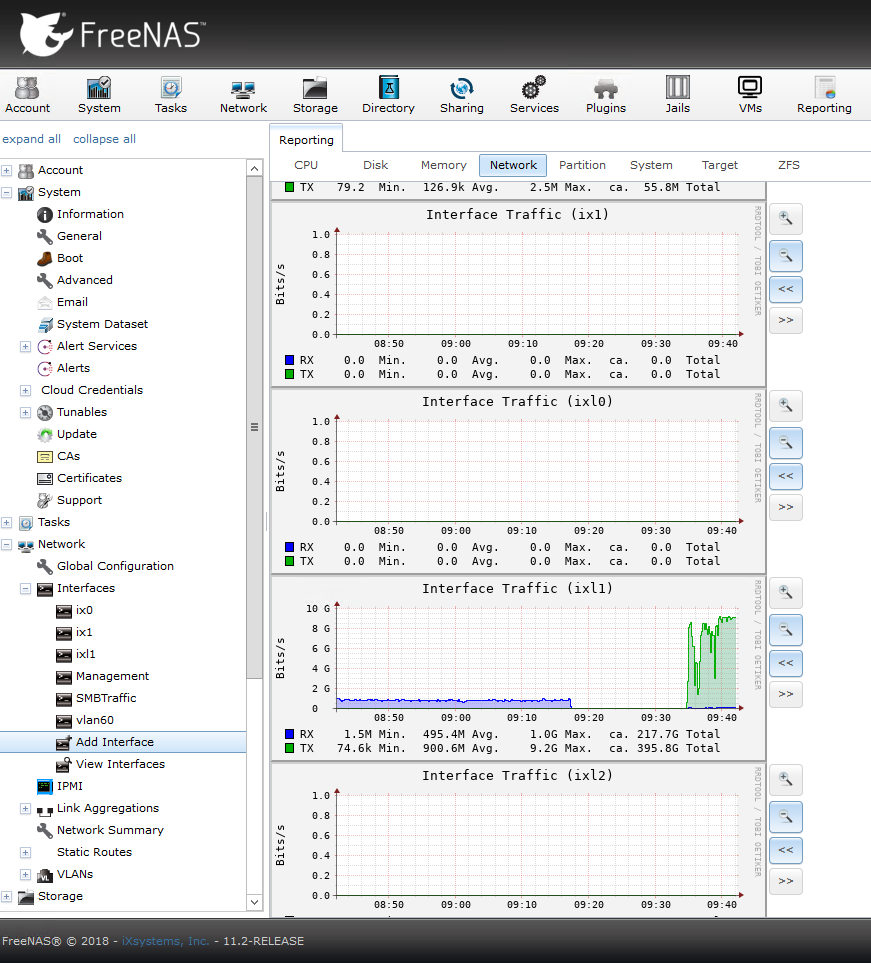

Seeing poor performance on my 10Gb network ESXi -> FreeNAS ( Currently running at about <1 Gbps )

Seeing fair performance on my 10Gb network FreeNAS -> ESXi ( Varies from 10Gbps -> 6 Gbps in hard step downs )

Previously, I have been able to completely saturate a 10Gb link from these hosts to the storage bidirectionally. ( copying from All flash VSAN -> FreeNAS and back )

What I am trying to accomplish:

I am trying to regain the same performance that I had previously as it's required for the environment to operate effectively.

Steps I have taken thus far:

Removed 4x 10Gb lagg0 on FreeNAS to isolate potential bonding issues ( and check SFP+ health )

Created single tagged interface on FreeNAS to investigate and more easily run tcpdumps in FreeBSD.

Removed Jumbo frames from ESXi hosts, FreeNAS interfaces ( all MTU sizes sans switch are now set to default 1500 bytes )

Once I vacate all of my important files to the All-Flash VSAN, I am prepared to do the worst to the FreeNAS pool in the spirit of solving the problem.

Any ideas/thoughts that anyone has would be most appreciated as I am a one man show with no assistance or sounding board to see if my ideas are terrible.

Here is my hardware for FreeNAS:

2X Supermicro 4U Chassis ( 24Bay and external 45 Bay )

MB: Supermicro X10DRH-C/i

CPU: 2X Xeon 2.4 Ghz 2460 v3

MEM: 256GB

RAID: LSI 3108 MegaRAID 6.22.03.0 - FW 24.7.0.-0026

Drives:

1x: 32GB Sandisk USB 3.0 ( boot drive ) - hd0

4X: KINGSTON SUV400S 400GB ea (single L2ARC cache device)

4X: INTEL SSDSC2CT24 240GB ea (single ZIL device)

16X: WDC WD2003FZEX-0 2TB ea

24X: WDC WD2003FZEX-0 2TB ea - Connected via external SAS onto LSI RAID

20x vdevs consisting of ( 2 x 2TB devices )

2 X RJ-45 10G

4 X 10G SFP+ - Intel X710 chipset ( Installed Oct 2016 )

Here is my hardware for my ESXi Hosts:

4X Dell FC630 - 2 ea E5-2697v3 - 512GB RAM - 4X Intel x710 NICs ( passthrough IOM )

FreeNAS and ESXi hosts are connected via Dell S4048-ON 10Gb switch running DNOS 9.14.1 with Intel/Dell Official 10-GBASE-SR Fibermodules and high quality manufactured LC/LC fiber at 5m in the same rack.

What I am seeking help with is to troubleshoot an issue where I am having one way rate limiting on my FreeNAS storage. This has NEVER been an issue until very recently ( 1DEC2018 ) and I've been taking drastic troubleshooting steps to resolve. I am at my wits end and am seeking the assistance of the community at large.

Seeing poor performance on my 10Gb network ESXi -> FreeNAS ( Currently running at about <1 Gbps )

Seeing fair performance on my 10Gb network FreeNAS -> ESXi ( Varies from 10Gbps -> 6 Gbps in hard step downs )

Previously, I have been able to completely saturate a 10Gb link from these hosts to the storage bidirectionally. ( copying from All flash VSAN -> FreeNAS and back )

What I am trying to accomplish:

I am trying to regain the same performance that I had previously as it's required for the environment to operate effectively.

Steps I have taken thus far:

Removed 4x 10Gb lagg0 on FreeNAS to isolate potential bonding issues ( and check SFP+ health )

Created single tagged interface on FreeNAS to investigate and more easily run tcpdumps in FreeBSD.

Removed Jumbo frames from ESXi hosts, FreeNAS interfaces ( all MTU sizes sans switch are now set to default 1500 bytes )

Once I vacate all of my important files to the All-Flash VSAN, I am prepared to do the worst to the FreeNAS pool in the spirit of solving the problem.

Any ideas/thoughts that anyone has would be most appreciated as I am a one man show with no assistance or sounding board to see if my ideas are terrible.