Shigure

Dabbler

- Joined

- Sep 1, 2022

- Messages

- 39

Hey guys, I'm new but finially joined the TrueNAS family after a long time delay due to several different reasons.

Here's my hardware list:

Ryzen 7 Pro 5750G

ASRock B550 Phantom Gaming itx/ax

Micron MTA18ADF2G72AZ3G2R1 x2(16G x2 3200 unbuffered ECC)

LSI 9208-8i

Mellanox MCX311A-XCAT ConnectX-3 EN(connected with M.2 to PCIe x4 raiser)

Fractal Design Node 304

EVGA 750W SFX full modular PSU

TureNAS will be installed on a 256G SSD connected via USB

For the drives, I plan to use one 2TB M.2 NVMe SSD laying around as L2ARC, 4x 1TB SATA SSD as "RAID10" and 3x EXOS X18 16TB as RAIDZ1.

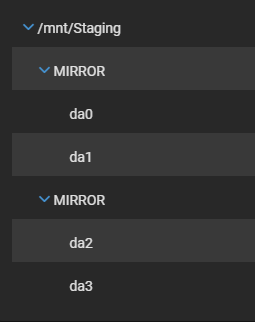

Not sure what's the best practise for my case but I guess I shouldn't put SSDs and HDDs into a same zpool? The plan is to create two different zpools, one for the SSDs and another one for the HDDs. For the SSD zpool the layout should be two mirror vdevs with 2 SSDs in each vdev, which should be the ZFS equivalent of RAID10? (a screenshot is attached)

But how about the wear across the 4 SSDs, or will the wear spread evenly among drives/vdevs? I ask this because 2 of the 4 drives are used with about 1TB write, and I will mix one used drive and one brand new into each mirror vdev.

For the HDD zpool, it should be easier for now as I only have 3 drives now, 1 RAIDZ1 vdev is enough and if necessary I can add another 3 drives as a second vdev to expand that zpool.

Is my understanding correct? Or anything I can improve?

Here's my hardware list:

Ryzen 7 Pro 5750G

ASRock B550 Phantom Gaming itx/ax

Micron MTA18ADF2G72AZ3G2R1 x2(16G x2 3200 unbuffered ECC)

LSI 9208-8i

Mellanox MCX311A-XCAT ConnectX-3 EN(connected with M.2 to PCIe x4 raiser)

Fractal Design Node 304

EVGA 750W SFX full modular PSU

TureNAS will be installed on a 256G SSD connected via USB

For the drives, I plan to use one 2TB M.2 NVMe SSD laying around as L2ARC, 4x 1TB SATA SSD as "RAID10" and 3x EXOS X18 16TB as RAIDZ1.

Not sure what's the best practise for my case but I guess I shouldn't put SSDs and HDDs into a same zpool? The plan is to create two different zpools, one for the SSDs and another one for the HDDs. For the SSD zpool the layout should be two mirror vdevs with 2 SSDs in each vdev, which should be the ZFS equivalent of RAID10? (a screenshot is attached)

But how about the wear across the 4 SSDs, or will the wear spread evenly among drives/vdevs? I ask this because 2 of the 4 drives are used with about 1TB write, and I will mix one used drive and one brand new into each mirror vdev.

For the HDD zpool, it should be easier for now as I only have 3 drives now, 1 RAIDZ1 vdev is enough and if necessary I can add another 3 drives as a second vdev to expand that zpool.

Is my understanding correct? Or anything I can improve?