Flashyy

Cadet

- Joined

- Oct 7, 2022

- Messages

- 3

I've been having a few problems with my system recently, upon checking the dashboard, I saw a few critical alerts to two of my disks and was immediately worried, so I decided to check smart status as well as the syslog to see if anything was wrong with it

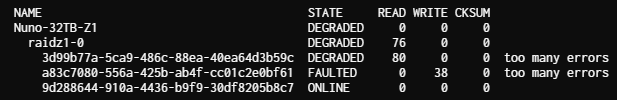

Here's the zpool status when I saw the errors:

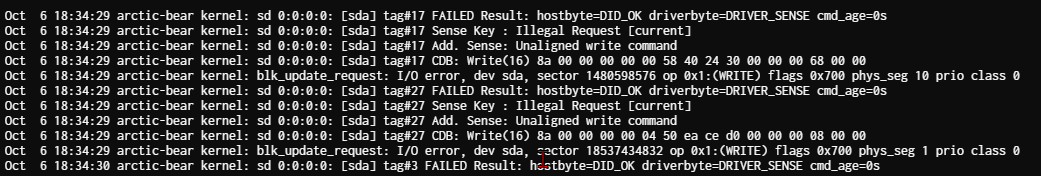

Syslog:

Upon seeing these errors, I powered off the system and replaced the SATA cables connecting those two drives, as I suspect it was a cable/controller issue.

This is the zpool result after replacing the cables:

So the errors went away, everything is working as expected, however one of the drives still reports as DEGRADED and says it has too many errors.

I'd like to know what is causing this, or some lead I can follow to troubleshoot the problem, and whether or not I should be too worried about it.

I could of course just clear the errors but I'd like to know more about what's wrong before I do so.

I still suspect the controller connecting the disks is the problem here, it's connected directly to the motherboard and not using an HBA or anything like that.

I'm in the process of purchasing an HBA since I've had a few checksum errors in the past, would that likely solve this problem too?

This is the smart status of all the drives:

All of the disks contain errors in ID 199, which leads me to believe this is indeed a controller problem (since I only replaced two cables, and all 3 disks have this counter incremented).

I also noticed lines such as these from the syslog, which I found strange.

The reason this is strange is because the disks have fans pointed directly at them, and never exceed 45C in any operation, so jumping to those temperatures in my case really is impossible, could this be a misreport due to poor communication with the drives?

Thanks!

Here's the zpool status when I saw the errors:

Syslog:

Upon seeing these errors, I powered off the system and replaced the SATA cables connecting those two drives, as I suspect it was a cable/controller issue.

This is the zpool result after replacing the cables:

pool: Nuno-32TB-Z1

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: resilvered 7.98G in 00:01:05 with 0 errors on Thu Oct 6 19:03:54 2022

config:

NAME STATE READ WRITE CKSUM

Nuno-32TB-Z1 DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

3d99b77a-5ca9-486c-88ea-40ea64d3b59c DEGRADED 0 0 0 too many errors

a83c7080-556a-425b-ab4f-cc01c2e0bf61 ONLINE 0 0 0

9d288644-910a-4436-b9f9-30df8205b8c7 ONLINE 0 0 0

So the errors went away, everything is working as expected, however one of the drives still reports as DEGRADED and says it has too many errors.

I'd like to know what is causing this, or some lead I can follow to troubleshoot the problem, and whether or not I should be too worried about it.

I could of course just clear the errors but I'd like to know more about what's wrong before I do so.

I still suspect the controller connecting the disks is the problem here, it's connected directly to the motherboard and not using an HBA or anything like that.

I'm in the process of purchasing an HBA since I've had a few checksum errors in the past, would that likely solve this problem too?

This is the smart status of all the drives:

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000f 073 064 044 Pre-fail Always - 20262960

3 Spin_Up_Time 0x0003 091 090 000 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 100 100 020 Old_age Always - 34

5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0

7 Seek_Error_Rate 0x000f 077 060 045 Pre-fail Always - 45393938

9 Power_On_Hours 0x0032 099 099 000 Old_age Always - 1607

10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 020 Old_age Always - 34

18 Head_Health 0x000b 100 100 050 Pre-fail Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

188 Command_Timeout 0x0032 100 099 000 Old_age Always - 1

190 Airflow_Temperature_Cel 0x0022 063 049 040 Old_age Always - 37 (Min/Max 30/38)

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 5

193 Load_Cycle_Count 0x0032 095 095 000 Old_age Always - 11900

194 Temperature_Celsius 0x0022 037 051 000 Old_age Always - 37 (0 26 0 0 0)

197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0

[B]199 UDMA_CRC_Error_Count 0x003e 200 200 000 Old_age Always - 15[/B]

200 Pressure_Limit 0x0023 100 100 001 Pre-fail Always - 0

240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 693h+11m+25.744s

241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 9787228124

242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 19571805922

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000f 076 064 044 Pre-fail Always - 43734347

3 Spin_Up_Time 0x0003 090 089 000 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 100 100 020 Old_age Always - 36

5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0

7 Seek_Error_Rate 0x000f 077 060 045 Pre-fail Always - 45762454

9 Power_On_Hours 0x0032 099 099 000 Old_age Always - 1607

10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 020 Old_age Always - 34

18 Head_Health 0x000b 100 100 050 Pre-fail Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

188 Command_Timeout 0x0032 100 093 000 Old_age Always - 12885360648

190 Airflow_Temperature_Cel 0x0022 060 043 040 Old_age Always - 40 (Min/Max 33/41)

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 6

193 Load_Cycle_Count 0x0032 093 093 000 Old_age Always - 15710

194 Temperature_Celsius 0x0022 040 057 000 Old_age Always - 40 (0 25 0 0 0)

197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0

[B]199 UDMA_CRC_Error_Count 0x003e 200 199 000 Old_age Always - 2[/B]

200 Pressure_Limit 0x0023 100 100 001 Pre-fail Always - 0

240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 415h+02m+56.783s

241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 9783103219

242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 19606959916

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000f 083 064 044 Pre-fail Always - 219151662

3 Spin_Up_Time 0x0003 091 090 000 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 100 100 020 Old_age Always - 36

5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0

7 Seek_Error_Rate 0x000f 076 060 045 Pre-fail Always - 44167273

9 Power_On_Hours 0x0032 099 099 000 Old_age Always - 1663

10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 020 Old_age Always - 36

18 Head_Health 0x000b 100 100 050 Pre-fail Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

188 Command_Timeout 0x0032 100 100 000 Old_age Always - 0

190 Airflow_Temperature_Cel 0x0022 062 049 040 Old_age Always - 38 (Min/Max 32/40)

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 5

193 Load_Cycle_Count 0x0032 094 094 000 Old_age Always - 12423

194 Temperature_Celsius 0x0022 038 051 000 Old_age Always - 38 (0 24 0 0 0)

197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0

[B]199 UDMA_CRC_Error_Count 0x003e 200 199 000 Old_age Always - 42[/B]

200 Pressure_Limit 0x0023 100 100 001 Pre-fail Always - 0

240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 695h+16m+28.075s

241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 10240725195

242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 13689336576

All of the disks contain errors in ID 199, which leads me to believe this is indeed a controller problem (since I only replaced two cables, and all 3 disks have this counter incremented).

I also noticed lines such as these from the syslog, which I found strange.

Sep 14 23:26:18 arctic-bear smartd[4869]: Device: /dev/sdd [SAT], SMART Prefailure Attribute: 194 Temperature_Celsius changed from 63 to 71The reason this is strange is because the disks have fans pointed directly at them, and never exceed 45C in any operation, so jumping to those temperatures in my case really is impossible, could this be a misreport due to poor communication with the drives?

Thanks!