DougATClark

Dabbler

- Joined

- Jan 15, 2018

- Messages

- 14

Hey all,

I have two FreeNAS boxes that I've inherited from a predecessor that are being used as iscsi storage for Veeam backup. I'm having some slowness with having the files merge together and their support is thinking that it may be an i/o issue with the storage (FreeNAS). I'm looking for any suggestions or help that anyone can provide on getting to the bottom of this. I'm going to apologize in advance in I mix up some of the terminology, trying to keep this all straight.

Since I upgraded from 9.x to 11.x I ran into alerts indicating out of chains, so my only tunables are dev.mps.0.max_chains and hw.mps.max_chains both are 4096. Thats seemingly made the daily warnings go away.

Hardware:

Supermicro X9SCL-F motherboard

Intel E3-1230 V2 CPU

32gb ECC RAM

LSI 9201-16i IT mode

16 WD30EFRX (WD Red) 3TB drives

Intel 82599ES 10gig card

OS installed on a 32gig Supermicro Sata-DOM

Setup:

FreeNAS 11.1-u1

Single Volume with a single dataset with a single zvol

Two 8 disk raidz2 arrays

lz4 compression on the dataset, no dedupe

9216 MTU on the 10gig cards ( confirmed functional jumbo frames end to end )

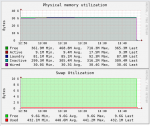

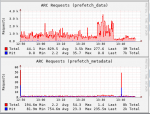

Reporting Charts:

There are a bunch of disk charts but they don't really lend themselves to being screen captured and posted up. I have a feeling they are going to be some of the important ones, is there a better way to dump the disk stats to the post?

thank you!

I have two FreeNAS boxes that I've inherited from a predecessor that are being used as iscsi storage for Veeam backup. I'm having some slowness with having the files merge together and their support is thinking that it may be an i/o issue with the storage (FreeNAS). I'm looking for any suggestions or help that anyone can provide on getting to the bottom of this. I'm going to apologize in advance in I mix up some of the terminology, trying to keep this all straight.

Since I upgraded from 9.x to 11.x I ran into alerts indicating out of chains, so my only tunables are dev.mps.0.max_chains and hw.mps.max_chains both are 4096. Thats seemingly made the daily warnings go away.

Hardware:

Supermicro X9SCL-F motherboard

Intel E3-1230 V2 CPU

32gb ECC RAM

LSI 9201-16i IT mode

16 WD30EFRX (WD Red) 3TB drives

Intel 82599ES 10gig card

OS installed on a 32gig Supermicro Sata-DOM

Setup:

FreeNAS 11.1-u1

Single Volume with a single dataset with a single zvol

Two 8 disk raidz2 arrays

lz4 compression on the dataset, no dedupe

9216 MTU on the 10gig cards ( confirmed functional jumbo frames end to end )

Reporting Charts:

There are a bunch of disk charts but they don't really lend themselves to being screen captured and posted up. I have a feeling they are going to be some of the important ones, is there a better way to dump the disk stats to the post?

thank you!