@kdragon75

So I have some more interesting data ... I decided to try going the route of setting up a couple of windows backup proxy instances as VM's, so that you could go the virtual appliance hotadd route. I am not running another backup, and I'm seeing backup speeds that are MUCH faster than before. While still not amazing, it is night and day from where I was originally.

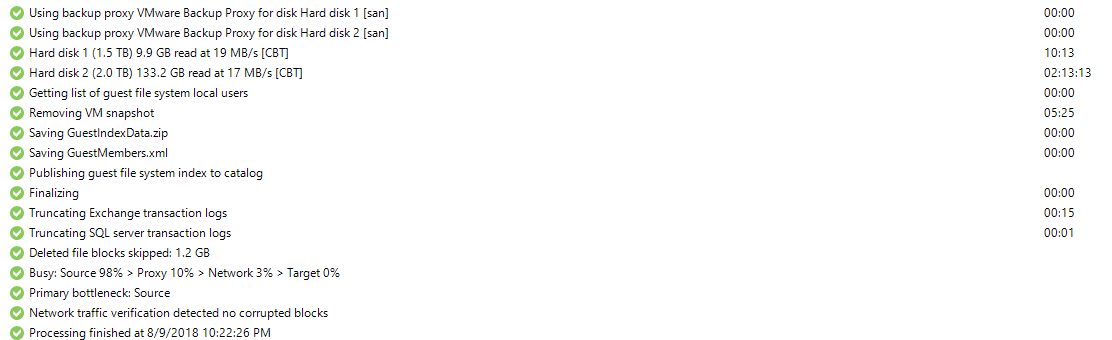

Here are my original results when running Direct SAN backups:

Overall Summary - 3Hr, 53Min - 19 MB/s

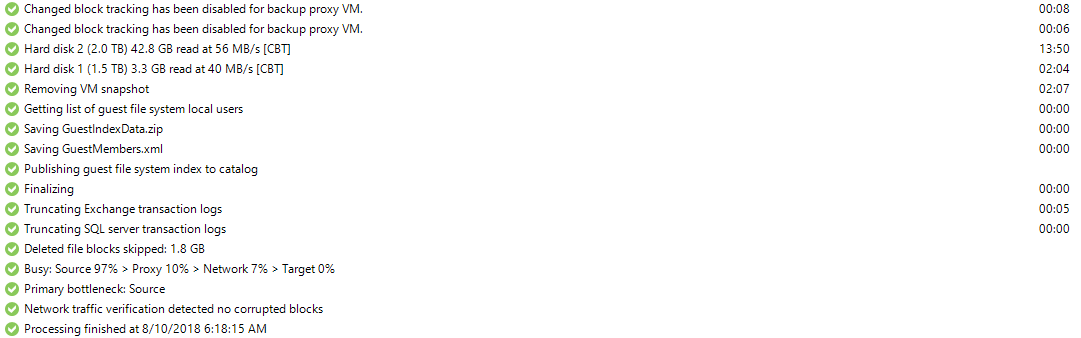

Now, with the switch to HOTADD backups:

Overall Summary - 1Hr, 10Min - 60 MB/s

The fact that we are topping out at 60MB/sec might be in part due to the fact that these VM's have 1GB guest connectivity. They have 2 1GB vmnics in the guest Vswitch, but any given guest is only going to see throughput of a single 1GB vmnic do to how load balancing works. I actually have a project on deck to upgrade the entire network to 10GB this fall, so my hope is that with the 10GB upgrade, we might see some increased performance there as well.

I'm still really having a hard time understanding though why Direct SAN is slower than Virtual Appliance mode. I know for a fact in SAN mode, there is "zero" routing taking place as the network interfaces on the SAN side that are used for iSCSI are not routable. The fact that Veeam can talk to the SAN confirms we are going direct switching to it, with no firewalls/routers in between.

So I have some more interesting data ... I decided to try going the route of setting up a couple of windows backup proxy instances as VM's, so that you could go the virtual appliance hotadd route. I am not running another backup, and I'm seeing backup speeds that are MUCH faster than before. While still not amazing, it is night and day from where I was originally.

Here are my original results when running Direct SAN backups:

Overall Summary - 3Hr, 53Min - 19 MB/s

Now, with the switch to HOTADD backups:

Overall Summary - 1Hr, 10Min - 60 MB/s

The fact that we are topping out at 60MB/sec might be in part due to the fact that these VM's have 1GB guest connectivity. They have 2 1GB vmnics in the guest Vswitch, but any given guest is only going to see throughput of a single 1GB vmnic do to how load balancing works. I actually have a project on deck to upgrade the entire network to 10GB this fall, so my hope is that with the 10GB upgrade, we might see some increased performance there as well.

I'm still really having a hard time understanding though why Direct SAN is slower than Virtual Appliance mode. I know for a fact in SAN mode, there is "zero" routing taking place as the network interfaces on the SAN side that are used for iSCSI are not routable. The fact that Veeam can talk to the SAN confirms we are going direct switching to it, with no firewalls/routers in between.