NickF

Guru

- Joined

- Jun 12, 2014

- Messages

- 763

Hi Everyone,

I've been experimenting with my home TrueNAS rig for the past several weeks, mostly out of curiosity as I begin planning deployments for business use cases in my professional life.

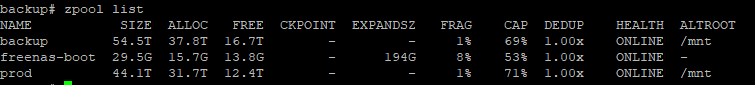

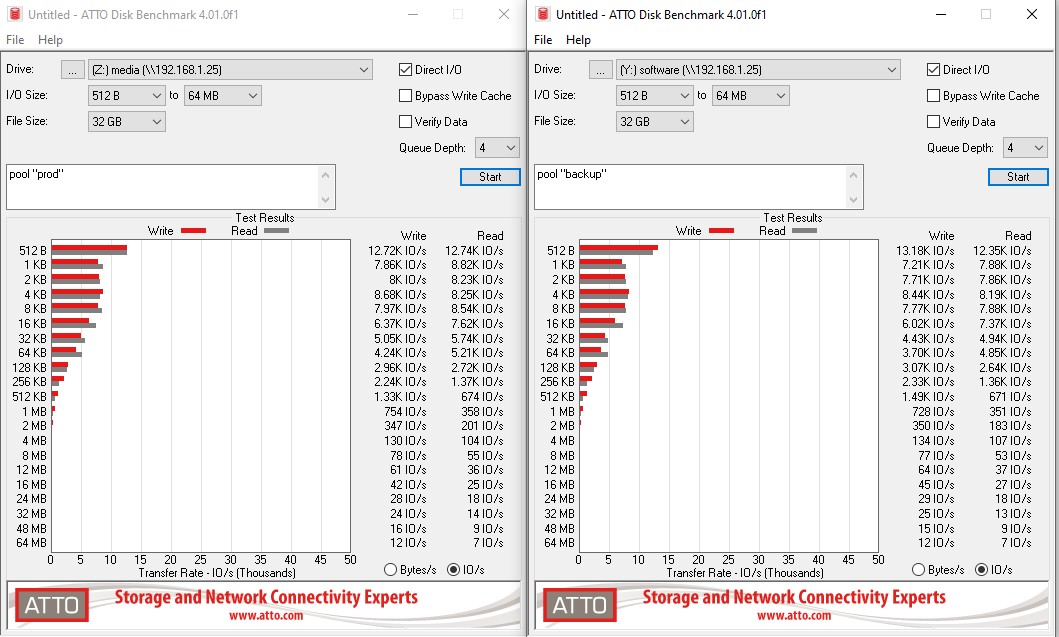

The TLDR, Am I doing something wrong? I am seeing little difference in performance between the old Supermicro server and my new Dell server. I am seeing little difference in performance between my two pools.

I know I'm not using mirrors, and this is not a performance-oriented build, it is a storage-focused one. However, I am not seeing any benefit from all of the additional RAM, L2ARC and SPECIAL devices??

I have a Dell R720 running TrueNAS. It has:

Anyway, I've built this system to replace a Supermicro server with a Supermicro X10SLL-F, E3-1265L v3 and 32GB of ram. Prior to the upgrade, there was no flash in the system and it had significantly less memory. SMB shares appear to be no more, or less, performant than before. ISCSI performance is marginally faster (sync=off)

Additionally, the SMB shares on the "prod" pool (top) are no faster than the "backup" pool (bottom). This is coming from my 10-gigabit (Intel X540-T1) NIC:

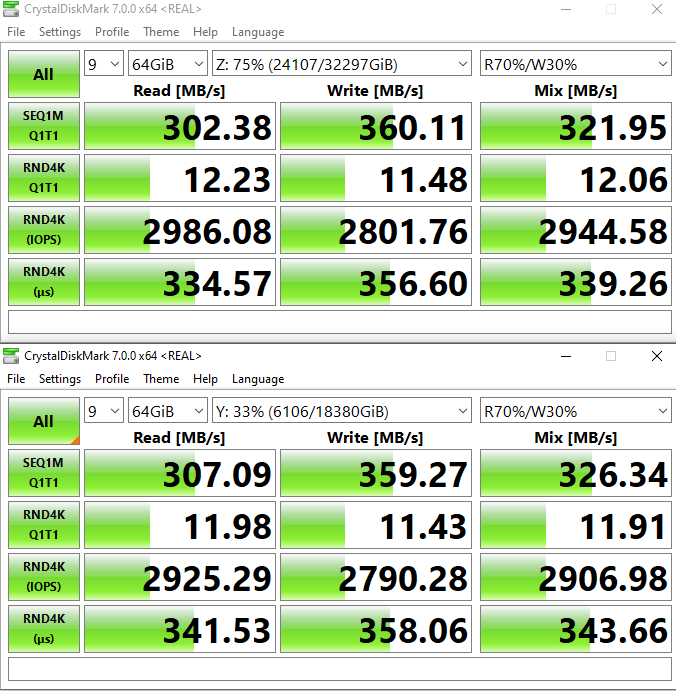

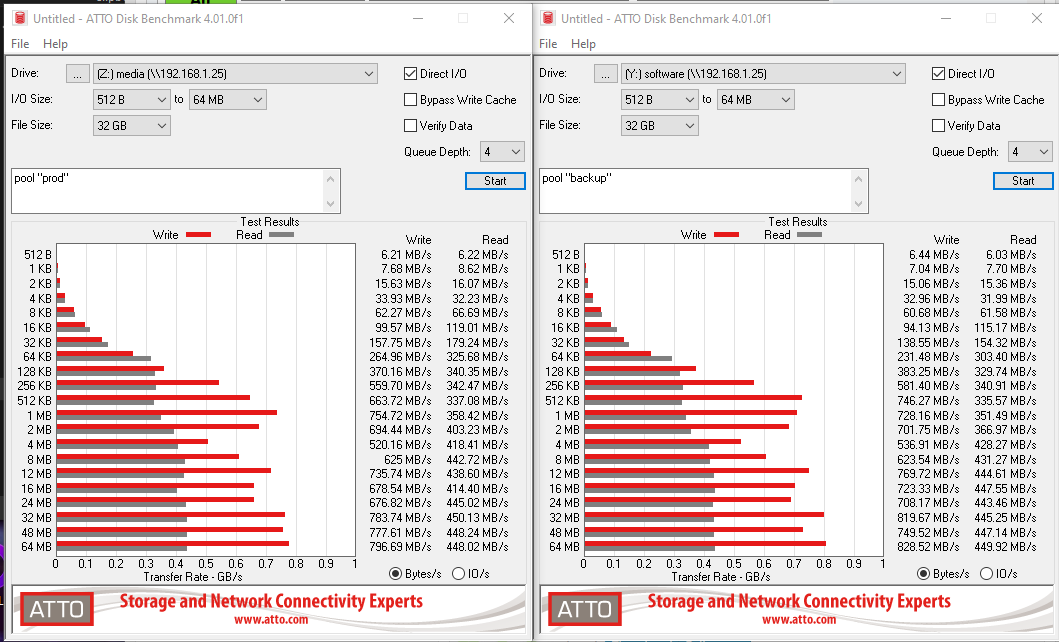

Atto performance is similar. The really is no discernable difference in performance between the two pools. I get that this is not apples to apples, but really, I was expecting more from the "prod" pool. In some cases it's slower than the "backup" pool, and faster in others.

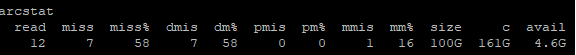

Additionally, L2ARC hit rate is abysmal. I cannot explain the gap at noon.

I've been experimenting with my home TrueNAS rig for the past several weeks, mostly out of curiosity as I begin planning deployments for business use cases in my professional life.

The TLDR, Am I doing something wrong? I am seeing little difference in performance between the old Supermicro server and my new Dell server. I am seeing little difference in performance between my two pools.

I know I'm not using mirrors, and this is not a performance-oriented build, it is a storage-focused one. However, I am not seeing any benefit from all of the additional RAM, L2ARC and SPECIAL devices??

I have a Dell R720 running TrueNAS. It has:

- 2x Intel Xeon E5-2620

- 196GB of ECC Reg DDR3 1333

- Dell Perc H710 flashed to IT mode

- LSI 9207-8e

- LSI 9205-8i

- Chelsio T520-TO-CR card (LACP LAG to a Brocade VDX 6740)

- 12x 4TB Western Digital Red CMR drives are in an HP D2600 Shelf connected to the LSI 9207-8e,

- Raid Z2, pool "prod"

- 2x Samsung SM 953 480 GB setup as a Special VDEV for 1MB

- 2x Samsung 850 EVO 500GB drives as L2ARC (connected to the LSI 9205)

- 6x 10TB Western Digital Easy Shucks

- Raid Z1, pool "backup"

- Runs on PERC H710 IT mode card

Anyway, I've built this system to replace a Supermicro server with a Supermicro X10SLL-F, E3-1265L v3 and 32GB of ram. Prior to the upgrade, there was no flash in the system and it had significantly less memory. SMB shares appear to be no more, or less, performant than before. ISCSI performance is marginally faster (sync=off)

Additionally, the SMB shares on the "prod" pool (top) are no faster than the "backup" pool (bottom). This is coming from my 10-gigabit (Intel X540-T1) NIC:

Atto performance is similar. The really is no discernable difference in performance between the two pools. I get that this is not apples to apples, but really, I was expecting more from the "prod" pool. In some cases it's slower than the "backup" pool, and faster in others.

Additionally, L2ARC hit rate is abysmal. I cannot explain the gap at noon.

Last edited: