Zepherian04

Dabbler

- Joined

- Apr 1, 2021

- Messages

- 10

Hello good people!

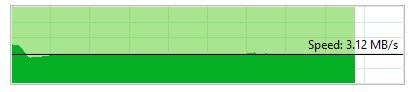

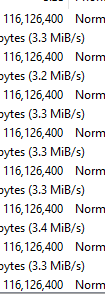

I recently set up an OpenVPN configuration in Truenas Scale to access my pools from my other location, but transferring files through the VPN is painfully slow (~3.3MB/s). I am using the UDP protocol, SHA256 authentication, AES-256 cipher, and LZ4 compression. I've tried several different combinations of all of these settings, as well as utilizing different ports, and it still caps out at ~3.3MB/s. The internet speeds at both locations are gigabit, up and down. I also don't believe I'm hardware limited, as CPU usage barely changes when transferring files, and about half of my 40 threads sit idle most of the time.

Before I set up the VPN, I was using Filezilla with SFTP, which allowed me to do 10 transfers simultaneously. However, each transfer was limited to the same ~3.3MB/s.

I don't know enough about this to begin to know where to look to solve this issue, so any and all help is greatly appreciated!

I'm starting to think my ISP is doing some funny business...

I recently set up an OpenVPN configuration in Truenas Scale to access my pools from my other location, but transferring files through the VPN is painfully slow (~3.3MB/s). I am using the UDP protocol, SHA256 authentication, AES-256 cipher, and LZ4 compression. I've tried several different combinations of all of these settings, as well as utilizing different ports, and it still caps out at ~3.3MB/s. The internet speeds at both locations are gigabit, up and down. I also don't believe I'm hardware limited, as CPU usage barely changes when transferring files, and about half of my 40 threads sit idle most of the time.

Before I set up the VPN, I was using Filezilla with SFTP, which allowed me to do 10 transfers simultaneously. However, each transfer was limited to the same ~3.3MB/s.

I don't know enough about this to begin to know where to look to solve this issue, so any and all help is greatly appreciated!

I'm starting to think my ISP is doing some funny business...