Hoping someone can help me figure out what is going on. My FreeNAS box has been great, when it works. Lately it has been getting into a fit, where whenever I access the WebUI dashboard I have 3 processes nail the system to the wall to the point that unless I'm already logged in via SSH, I can't even login. The console is slow and unresponsive, slower than SSH, and even if I issue a shutdown, it never will after is has already unmounted all the pooled disks. If I am lucky to already be SSH'd in I can run top and see this:

FreeNAS is latest stable version as of this writing (Updated 2 nights ago). Storage/Boot Drives, Memory and RAM is barely touched, CPU is cranking on something from collectd, rrdcached and python. I see nothing in the logs, and eventually it gets to the point the metric offloads to Graphite stop, Web UI won't load (in fact I will see socket timeout messages on the console from Django), NFS won't mount, and existing mounts are very slow or time out. I'd like to figure out what is causing it and get it to stop. Or worst yet, find whatever hardware maybe went bad. I'll admit, I don't really know how to check if my backplanes or drives are okay, outside of extended SMART checks are returning okay.

Here is the hardware FreeNAS is running on:

Processor: 2x AMD Opteron AMD 6212 Octo (8) Core 2.6Ghz (Total 16 Cores)

Memory: 64GB DDR3 (8 x 8GB - DDR3 - REG PC3-10600R (1333MHZ)

Server Chassis/ Case: CSE-847E16-R1400UBMotherboard: H8DGU-F

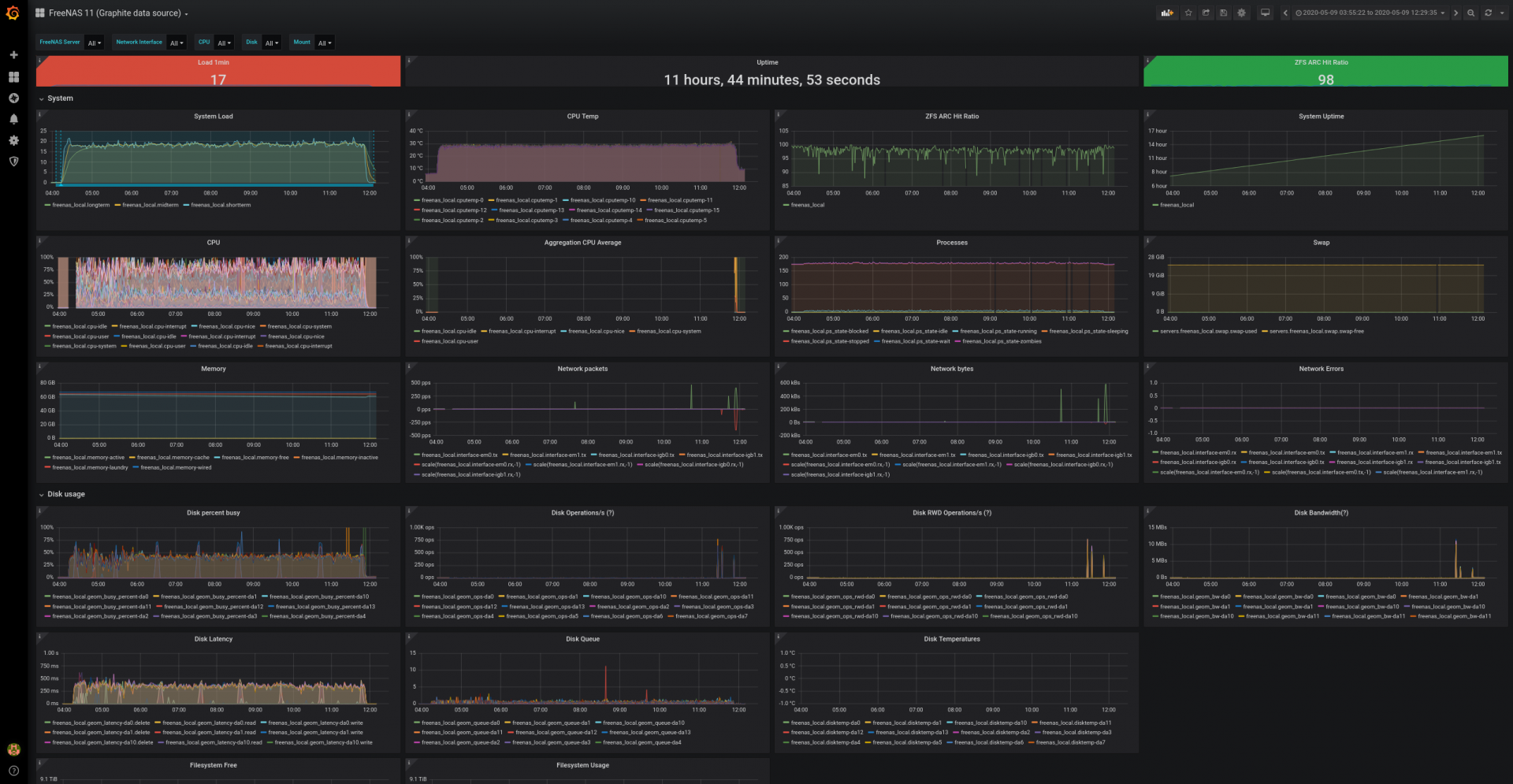

Just last night it happened again while I was asleep. I woke up to find it in the problematic state. I was poking around trying to get more information while it was happening (not very successful, I'm normally a Linux admin guy and FreeBSD is proving to really test what I can do with limited tools I'm used to, but that's a learning problem). Suddenly I noticed the problem stopped. I double checked my graphite/graphana server (which I stood up to specifically figure this out), and all the metrics that weren't getting saved off, were now there. Here is what metrics I have over the 8 hour period that collectd, python3.7, and rrdcached were working hard in some mine and I don't even know why.

Unfortunately, when I opened the UI after this happened, it happened yet again, and the UI has yet to return. I suspect that it will take another 8 hours to return unless I force a reboot. UI seems fine if I get in and out quick enough. Otherwise CMD line is my only way to do anything. Any help would be greatly appreciated.

Code:

last pid: 7979; load averages: 22.66, 23.51, 22.78 up 0+06:52:39 22:03:57

59 processes: 2 running, 57 sleeping

CPU: 19.3% user, 0.0% nice, 64.9% system, 5.9% interrupt, 9.9% idle

Mem: 222M Active, 1456M Inact, 1694M Wired, 59G Free

ARC: 547M Total, 126M MFU, 390M MRU, 9709K Anon, 5774K Header, 16M Other

132M Compressed, 816M Uncompressed, 6.16:1 Ratio

Swap: 24G Total, 24G Free

PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND

2929 root 11 25 0 390M 340M nanslp 9 273:01 246.04% collectd

79 root 37 46 0 316M 238M umtxn 4 189:26 228.95% python3.7

1427 root 8 21 0 31968K 11632K select 12 79:18 93.42% rrdcached

7836 root 1 81 0 7892K 3920K CPU5 5 30:22 25.00% top

3328 root 1 38 0 12924K 7912K select 14 7:50 15.79% sshd

1359 root 1 30 0 12484K 12580K select 14 11:22 11.84% ntpd

1504 root 1 26 0 127M 106M kqread 5 6:12 9.21% uwsgi-3.7

1407 root 1 28 0 38072K 22540K select 6 3:30 3.95% winbindd

1391 root 1 22 0 31052K 17860K select 2 3:03 2.63% nmbd

1442 root 1 20 0 120M 103M select 5 0:05 1.32% smbd

1035 root 1 22 0 9164K 5556K select 10 0:04 1.32% devdFreeNAS is latest stable version as of this writing (Updated 2 nights ago). Storage/Boot Drives, Memory and RAM is barely touched, CPU is cranking on something from collectd, rrdcached and python. I see nothing in the logs, and eventually it gets to the point the metric offloads to Graphite stop, Web UI won't load (in fact I will see socket timeout messages on the console from Django), NFS won't mount, and existing mounts are very slow or time out. I'd like to figure out what is causing it and get it to stop. Or worst yet, find whatever hardware maybe went bad. I'll admit, I don't really know how to check if my backplanes or drives are okay, outside of extended SMART checks are returning okay.

Here is the hardware FreeNAS is running on:

Processor: 2x AMD Opteron AMD 6212 Octo (8) Core 2.6Ghz (Total 16 Cores)

Memory: 64GB DDR3 (8 x 8GB - DDR3 - REG PC3-10600R (1333MHZ)

Server Chassis/ Case: CSE-847E16-R1400UBMotherboard: H8DGU-F

Just last night it happened again while I was asleep. I woke up to find it in the problematic state. I was poking around trying to get more information while it was happening (not very successful, I'm normally a Linux admin guy and FreeBSD is proving to really test what I can do with limited tools I'm used to, but that's a learning problem). Suddenly I noticed the problem stopped. I double checked my graphite/graphana server (which I stood up to specifically figure this out), and all the metrics that weren't getting saved off, were now there. Here is what metrics I have over the 8 hour period that collectd, python3.7, and rrdcached were working hard in some mine and I don't even know why.

Unfortunately, when I opened the UI after this happened, it happened yet again, and the UI has yet to return. I suspect that it will take another 8 hours to return unless I force a reboot. UI seems fine if I get in and out quick enough. Otherwise CMD line is my only way to do anything. Any help would be greatly appreciated.