Hello,

I want to write my first experience with nvme namespaces because I can't find a lot of information on this subject.

I'm not a FreeBSD or TrueNAS Core expert, so I probably made some mistake.

I buy one Kingston DC1500M (960GB, 64 namespaces supported).

I put the new disk on my system to see if it is working, made a pool with only the disk above, perform some test and then destroy the pool.

Then I start to use nvmecontrol command.

List NVME Controllers and namespaces

The only disk that support namespaces is the last one. So I use this disk (nvme4) on the following commands.

Print summary of the IDENTIFY information

List active and allocated namespaces

Print IDENTIFY for allocated namespace

List all controllers in NVM subsystem

List controllers attached to a namespace

I think that the commands above are safe on a running system.

Now I want to destroy this namespace (all the space on disk is used by this namespace) and try to create 4 namespaces.

Below this point the commands executed destroy your namespaces and all the data on your disk!!!

Don't use them!!!

Detach a controller from a namespace

Delete a namespace

These are the sizes in bytes and blocks of my disk (from nvmecontrol ns identify nvme4ns1 command) and I want 4 namespaces:

Bytes:

960197124096 / 4 = 240049281024

Blocks:

1875385008 / 4 = 468846252

Create 4 namespace

Attach namespace and controllers

To create all /dev/nvme4ns? device files I execute a controller reset

Not all /dev/nvd? device are created, so I reboot the server.

After reboot all the devices are present

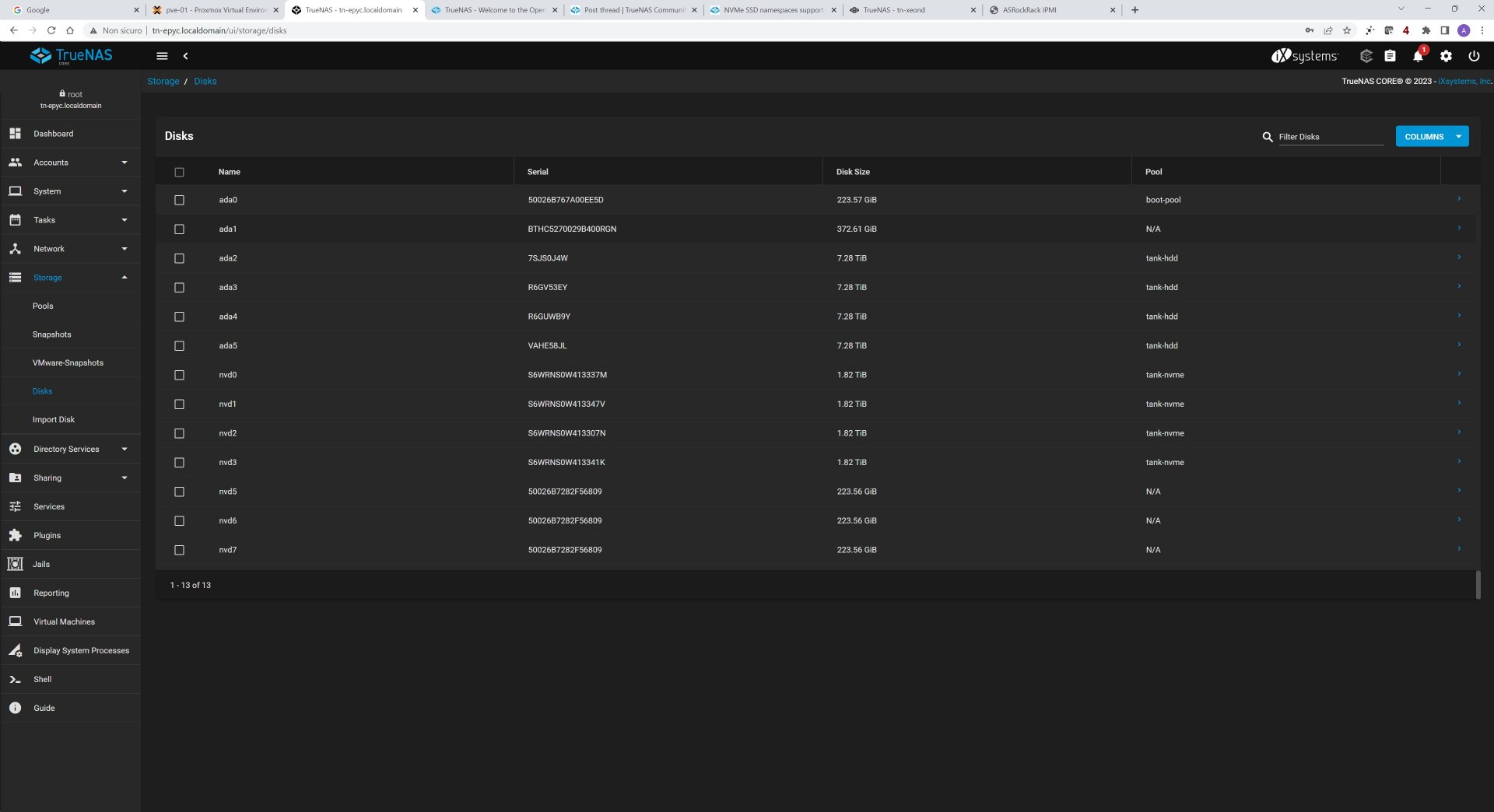

But in TrueNAS web interface nvd4 is missing.

Probably is something wrong that I perform with nvmecontrol command.

If someone has some ideas how to fix my configuration...

Sorry for this long post!

Best Regards,

Antonio

I want to write my first experience with nvme namespaces because I can't find a lot of information on this subject.

I'm not a FreeBSD or TrueNAS Core expert, so I probably made some mistake.

I buy one Kingston DC1500M (960GB, 64 namespaces supported).

I put the new disk on my system to see if it is working, made a pool with only the disk above, perform some test and then destroy the pool.

Then I start to use nvmecontrol command.

List NVME Controllers and namespaces

Code:

root@tn-epyc[~]# nvmecontrol devlist

nvme0: Samsung SSD 980 PRO with Heatsink 2TB

nvme0ns1 (1907729MB)

nvme1: Samsung SSD 980 PRO with Heatsink 2TB

nvme1ns1 (1907729MB)

nvme2: Samsung SSD 980 PRO with Heatsink 2TB

nvme2ns1 (1907729MB)

nvme3: Samsung SSD 980 PRO with Heatsink 2TB

nvme3ns1 (1907729MB)

nvme4: KINGSTON SEDC1500M960G

nvme4ns1 (915715MB)The only disk that support namespaces is the last one. So I use this disk (nvme4) on the following commands.

Print summary of the IDENTIFY information

Code:

root@tn-epyc[~]# nvmecontrol identify nvme4 Controller Capabilities/Features ================================ Vendor ID: 2646 Subsystem Vendor ID: 2646 Serial Number: 50026B7282F56809 Model Number: KINGSTON SEDC1500M960G Firmware Version: S67F0103 Recommended Arb Burst: 3 IEEE OUI Identifier: b7 26 00 Multi-Path I/O Capabilities: Not Supported Max Data Transfer Size: 262144 bytes Controller ID: 0x0001 Version: 1.3.0 Admin Command Set Attributes ============================ Security Send/Receive: Not Supported Format NVM: Supported Firmware Activate/Download: Supported Namespace Management: Supported Device Self-test: Not Supported Directives: Not Supported NVMe-MI Send/Receive: Not Supported Virtualization Management: Not Supported Doorbell Buffer Config: Not Supported Get LBA Status: Not Supported Sanitize: Not Supported Abort Command Limit: 4 Async Event Request Limit: 4 Number of Firmware Slots: 4 Firmware Slot 1 Read-Only: No Per-Namespace SMART Log: No Error Log Page Entries: 64 Number of Power States: 1 Total NVM Capacity: 960197124096 bytes Unallocated NVM Capacity: 0 bytes Firmware Update Granularity: 00 (Not Reported) Host Buffer Preferred Size: 0 bytes Host Buffer Minimum Size: 0 bytes NVM Command Set Attributes ========================== Submission Queue Entry Size Max: 64 Min: 64 Completion Queue Entry Size Max: 16 Min: 16 Number of Namespaces: 64 Compare Command: Not Supported Write Uncorrectable Command: Not Supported Dataset Management Command: Supported Write Zeroes Command: Not Supported Save Features: Supported Reservations: Not Supported Timestamp feature: Supported Verify feature: Not Supported Fused Operation Support: Not Supported Format NVM Attributes: All-NVM Erase, All-NVM Format Volatile Write Cache: Not Present NVM Subsystem Name: nqn.2023-02.com.kingston:nvm-subsystem-sn-50026B7282F56809

List active and allocated namespaces

Code:

root@tn-epyc[~]# nvmecontrol ns active nvme4

Active namespaces:

1

root@tn-epyc[~]# nvmecontrol ns allocated nvme4

Allocated namespaces:

1Print IDENTIFY for allocated namespace

Code:

root@tn-epyc[~]# nvmecontrol ns identify nvme4ns1 Size: 1875385008 blocks Capacity: 1875385008 blocks Utilization: 1875385008 blocks Thin Provisioning: Not Supported Number of LBA Formats: 1 Current LBA Format: LBA Format #00 Data Protection Caps: Not Supported Data Protection Settings: Not Enabled Multi-Path I/O Capabilities: Not Supported Reservation Capabilities: Not Supported Format Progress Indicator: Not Supported Deallocate Logical Block: Read Not Reported Optimal I/O Boundary: 0 blocks NVM Capacity: 960197124096 bytes Globally Unique Identifier: 00000000000000000026b7282f568095 IEEE EUI64: 0026b72002f56809 LBA Format #00: Data Size: 512 Metadata Size: 0 Performance: Best

List all controllers in NVM subsystem

Code:

root@tn-epyc[~]# nvmecontrol ns controllers nvme4 NVM subsystem includes 1 controller(s): 0x0001

List controllers attached to a namespace

Code:

root@tn-epyc[~]# nvmecontrol ns attached nvme4ns1 Attached 1 controller(s): 0x0001

I think that the commands above are safe on a running system.

Now I want to destroy this namespace (all the space on disk is used by this namespace) and try to create 4 namespaces.

Below this point the commands executed destroy your namespaces and all the data on your disk!!!

Don't use them!!!

Detach a controller from a namespace

Code:

root@tn-epyc[~]# nvmecontrol ns detach nvme4ns1

namespace 1 detached

# Check

root@tn-epyc[~]# nvmecontrol ns attached nvme4ns1

Attached 0 controller(s):

root@tn-epyc[~]# nvmecontrol ns active nvme4

Active namespaces:

root@tn-epyc[~]# nvmecontrol ns allocated nvme4

Allocated namespaces:

1Delete a namespace

Code:

root@tn-epyc[~]# nvmecontrol ns delete nvme4ns1 namespace 1 deleted # Check root@tn-epyc[~]# nvmecontrol devlist ... nvme4: KINGSTON SEDC1500M960G root@tn-epyc[~]# nvmecontrol ns allocated nvme4 Allocated namespaces:

These are the sizes in bytes and blocks of my disk (from nvmecontrol ns identify nvme4ns1 command) and I want 4 namespaces:

Bytes:

960197124096 / 4 = 240049281024

Blocks:

1875385008 / 4 = 468846252

Create 4 namespace

Code:

root@tn-epyc[~]# nvmecontrol ns create -s 468846252 -c 468846252 -L 0 -d 0 nvme4

namespace 1 created

root@tn-epyc[~]# nvmecontrol ns create -s 468846252 -c 468846252 -L 0 -d 0 nvme4

namespace 2 created

root@tn-epyc[~]# nvmecontrol ns create -s 468846252 -c 468846252 -L 0 -d 0 nvme4

namespace 3 created

root@tn-epyc[~]# nvmecontrol ns create -s 468846252 -c 468846252 -L 0 -d 0 nvme4

namespace 4 created

# Check

root@tn-epyc[~]# nvmecontrol ns active nvme4

Active namespaces:

root@tn-epyc[~]# nvmecontrol ns allocated nvme4

Allocated namespaces:

1

2

3

4Attach namespace and controllers

Code:

root@tn-epyc[~]# nvmecontrol ns attach -n 1 -c 1 nvme4

namespace 1 attached

root@tn-epyc[~]# nvmecontrol ns attach -n 2 -c 1 nvme4

namespace 2 attached

root@tn-epyc[~]# nvmecontrol ns attach -n 3 -c 1 nvme4

namespace 3 attached

root@tn-epyc[~]# nvmecontrol ns attach -n 4 -c 1 nvme4

namespace 4 attached

# Check

root@tn-epyc[~]# nvmecontrol ns active nvme4

Active namespaces:

1

2

3

4

root@tn-epyc[~]# nvmecontrol ns allocated nvme4

Allocated namespaces:

1

2

3

4

root@tn-epyc[~]# nvmecontrol devlist

nvme0: Samsung SSD 980 PRO with Heatsink 2TB

nvme0ns1 (1907729MB)

nvme1: Samsung SSD 980 PRO with Heatsink 2TB

nvme1ns1 (1907729MB)

nvme2: Samsung SSD 980 PRO with Heatsink 2TB

nvme2ns1 (1907729MB)

nvme3: Samsung SSD 980 PRO with Heatsink 2TB

nvme3ns1 (1907729MB)

nvme4: KINGSTON SEDC1500M960G

nvme4ns1 (228928MB)

nvme4ns2 (228928MB)

nvme4ns3 (228928MB)

nvme4ns4 (228928MB)To create all /dev/nvme4ns? device files I execute a controller reset

Code:

root@tn-epyc[~]# nvmecontrol reset nvme4 # Check root@tn-epyc[~]# ls -la /dev/nvme4* crw------- 1 root wheel 0x55 Jun 21 14:42 /dev/nvme4 crw------- 1 root wheel 0x87 Jun 21 14:42 /dev/nvme4ns1 crw------- 1 root wheel 0xf2 Jun 22 14:06 /dev/nvme4ns2 crw------- 1 root wheel 0xf3 Jun 22 14:06 /dev/nvme4ns3 crw------- 1 root wheel 0xf4 Jun 22 14:06 /dev/nvme4ns4

Not all /dev/nvd? device are created, so I reboot the server.

After reboot all the devices are present

Code:

root@tn-epyc[~]# ls -la /dev/nvd? crw-r----- 1 root operator 0x73 Jun 22 14:49 /dev/nvd0 crw-r----- 1 root operator 0x79 Jun 22 14:49 /dev/nvd1 crw-r----- 1 root operator 0x7f Jun 22 14:49 /dev/nvd2 crw-r----- 1 root operator 0x85 Jun 22 14:49 /dev/nvd3 crw-r----- 1 root operator 0x8e Jun 22 14:49 /dev/nvd4 crw-r----- 1 root operator 0x8f Jun 22 14:49 /dev/nvd5 crw-r----- 1 root operator 0x90 Jun 22 14:49 /dev/nvd6 crw-r----- 1 root operator 0x91 Jun 22 14:49 /dev/nvd7

But in TrueNAS web interface nvd4 is missing.

Probably is something wrong that I perform with nvmecontrol command.

If someone has some ideas how to fix my configuration...

Sorry for this long post!

Best Regards,

Antonio