FreeNAS-11.2-U5

I have 2x p3605 1.6tb NVMe drives that were extra/unused, so i decided to put them in my system in my sig, and run them in a mirror (mainly as a nfs share to my vmware/vcenter).

Once i set them up and added the NFS share, i started (stress) testing the mirror via doing some storage vmotions to the mirror, or adding a VMDK to a win10 VM and running AJA disk test or Blackmagic disktest or even just copying large files in windows (some of the standard disk IO test tools i use) - While im seeing great speeds (ie ~500-950 MB/s , maxing my 10g) something seems very unusual/wrong. According to gstat (and i could be wrong), but it looks related to delete operations (ms/d)?

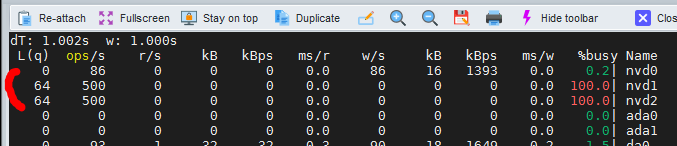

After whichever test/copy is done (thus guest IO has stopped) i keep seeing 100% spikes ( on nvd1 and nvd2- note how its no Read nor write, and is running the queue up):

(nvd0 is unrelated to all this, its a zil for other pools, its nvd1 and nvd2 that are the drives in question here)

this will occur sometimes for up to 3 or mroe minutes after the io has stopped (100%, then will drop off to ~30-50% for a few secs, then go back to 100%). I wouldnt think too much of it, but for the first time ever i saw a few storage vmotions timeout mid-transfer (svm to the nvme mirror, while this 100% / 64 L(q) was going on). Ive never seen that, even on the HDD NFS shares i have on this same server.

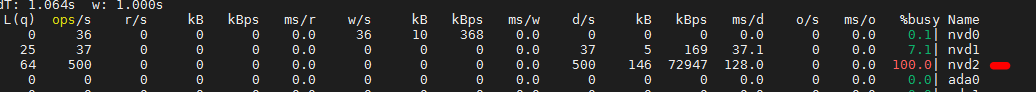

So i figured maybe one of the nvme drives is bad- so i redid the test with each as a single drive. Im seeing the exact same thing occur on whichever of the 2x drive im doing disk IO. (also each of these drives came from working systems- one of which was a extra freenas box i have)

(again, note how its no Read nor write, but 500 iops , maxing the 64 queue depth, with these ms/d transactions).

As this is all test data, i have tried turning off compression, as well as setting sync=disabled, but the issue is unaffected.

anyone have any ideas or have seen this before?

thanks!

fwiw, here are some screenshots of settings (exact same as im using for my other SSD and HDD backed NFS shares, which are running great!):

I have 2x p3605 1.6tb NVMe drives that were extra/unused, so i decided to put them in my system in my sig, and run them in a mirror (mainly as a nfs share to my vmware/vcenter).

Once i set them up and added the NFS share, i started (stress) testing the mirror via doing some storage vmotions to the mirror, or adding a VMDK to a win10 VM and running AJA disk test or Blackmagic disktest or even just copying large files in windows (some of the standard disk IO test tools i use) - While im seeing great speeds (ie ~500-950 MB/s , maxing my 10g) something seems very unusual/wrong. According to gstat (and i could be wrong), but it looks related to delete operations (ms/d)?

After whichever test/copy is done (thus guest IO has stopped) i keep seeing 100% spikes ( on nvd1 and nvd2- note how its no Read nor write, and is running the queue up):

(nvd0 is unrelated to all this, its a zil for other pools, its nvd1 and nvd2 that are the drives in question here)

this will occur sometimes for up to 3 or mroe minutes after the io has stopped (100%, then will drop off to ~30-50% for a few secs, then go back to 100%). I wouldnt think too much of it, but for the first time ever i saw a few storage vmotions timeout mid-transfer (svm to the nvme mirror, while this 100% / 64 L(q) was going on). Ive never seen that, even on the HDD NFS shares i have on this same server.

So i figured maybe one of the nvme drives is bad- so i redid the test with each as a single drive. Im seeing the exact same thing occur on whichever of the 2x drive im doing disk IO. (also each of these drives came from working systems- one of which was a extra freenas box i have)

(again, note how its no Read nor write, but 500 iops , maxing the 64 queue depth, with these ms/d transactions).

As this is all test data, i have tried turning off compression, as well as setting sync=disabled, but the issue is unaffected.

anyone have any ideas or have seen this before?

thanks!

fwiw, here are some screenshots of settings (exact same as im using for my other SSD and HDD backed NFS shares, which are running great!):

Last edited: