tomkinsg

Cadet

- Joined

- Aug 11, 2021

- Messages

- 4

I had an alert of a disk degraded two days ago. I ordered two replacement disks from amazon (figured i may as well have a spare).

They arrived and this afternoon, i had another disk report degraded. Oh no!

That's the 2nd nothing going right.

now when i go to the GUI and look at the pool, i see no drives. I see all drives under disks in the GUI.

When in the shell i issue a ZPOOL STATUS, this is returned:

i tried rebooting, removing the first degraded disk, putting a new one in and then issuing

I rebooted, replaced the degraded original ada0 and tried again and got the same result.

The only good thing is that i appear to be able to access data on the drive thru SMB.

I really appreciate some help.

GT

They arrived and this afternoon, i had another disk report degraded. Oh no!

That's the 2nd nothing going right.

now when i go to the GUI and look at the pool, i see no drives. I see all drives under disks in the GUI.

When in the shell i issue a ZPOOL STATUS, this is returned:

Code:

root@TrueNAS:~ # zpool status

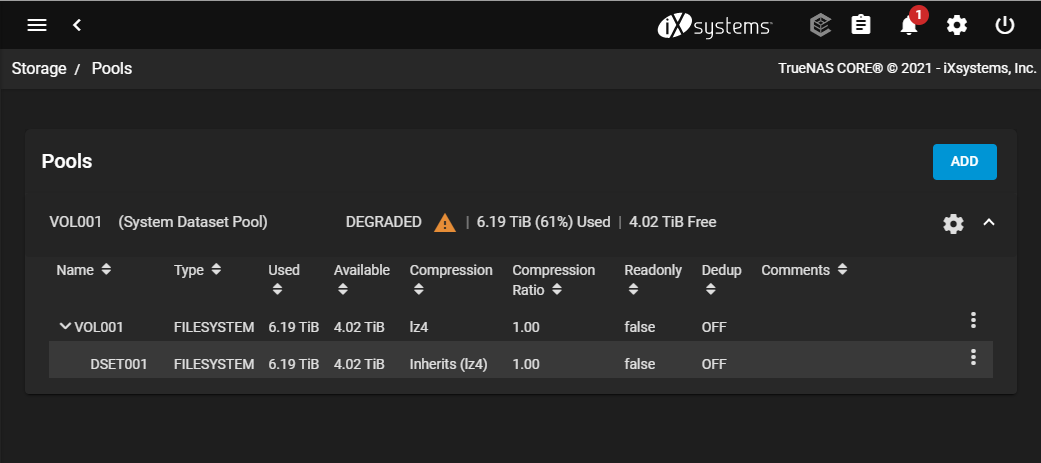

pool: VOL001

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: resilvered 444K in 00:00:02 with 0 errors on Wed Aug 11 15:31:24 2021

config:

NAME STATE READ WRITE CKSUM

VOL001 DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

gptid/af14e8bd-b359-11e6-ac8e-d05099760fd1 DEGRADED 0 0 0 too many errors

gptid/b063d911-b359-11e6-ac8e-d05099760fd1 ONLINE 0 0 0

gptid/b15f3b6b-b359-11e6-ac8e-d05099760fd1 DEGRADED 0 0 0 too many errors

gptid/b279a1ed-b359-11e6-ac8e-d05099760fd1 ONLINE 0 0 0

errors: No known data errors

pool: freenas-boot

state: ONLINE

status: Some supported features are not enabled on the pool. The pool can

still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(5) for details.

scan: scrub repaired 0B in 00:00:37 with 0 errors on Tue Aug 10 03:45:37 2021

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

i tried rebooting, removing the first degraded disk, putting a new one in and then issuing

I rebooted, replaced the degraded original ada0 and tried again and got the same result.

The only good thing is that i appear to be able to access data on the drive thru SMB.

I really appreciate some help.

GT