drwoodcomb

Explorer

- Joined

- Sep 15, 2016

- Messages

- 74

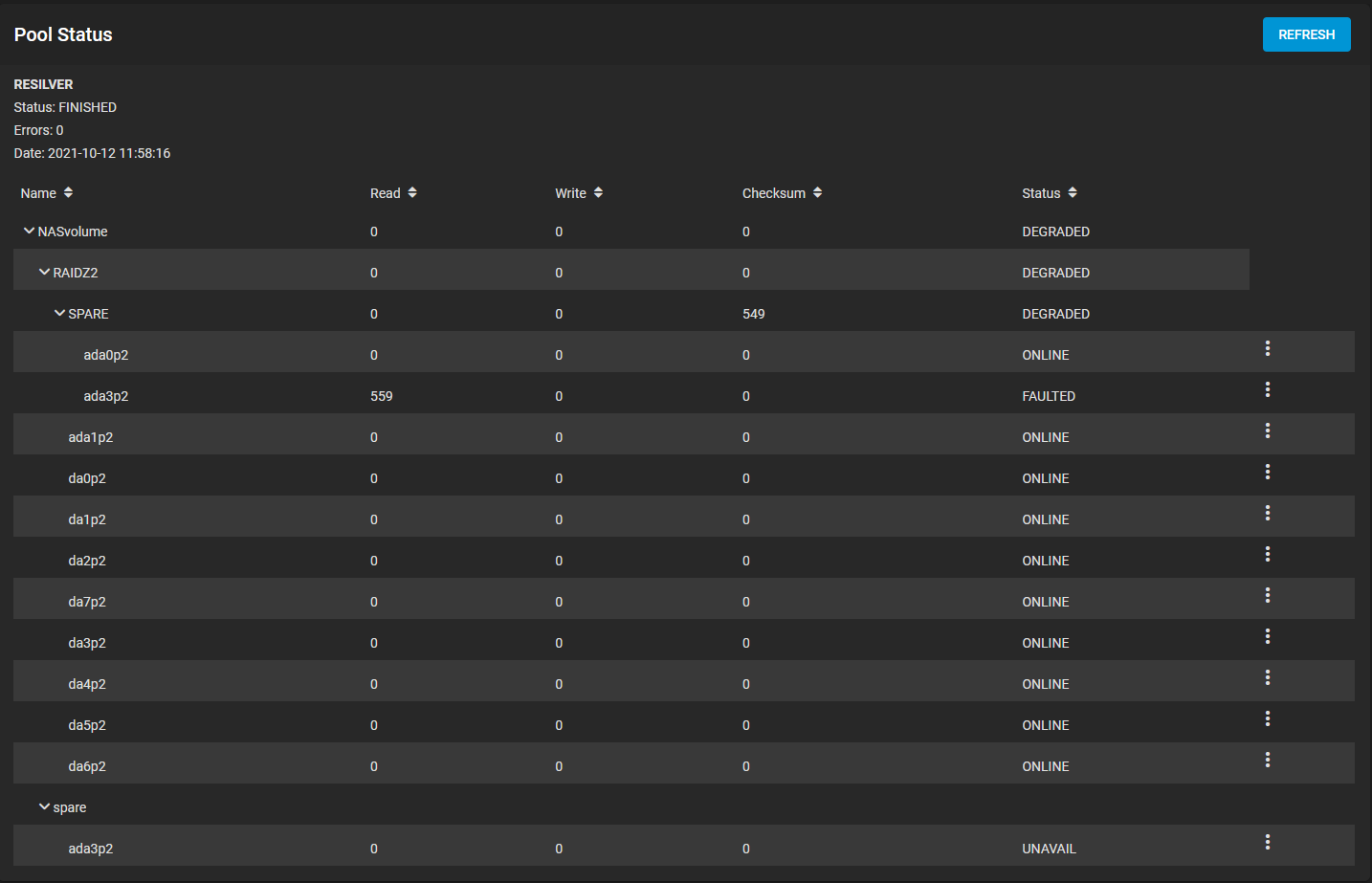

4 days ago I had a drive failure and the hot-spare kicked in. I replaced the defective drive and it resilvered. I read in another thread that the hot-spare should automatically go back to being a hot-spare once the replaced drive is resilvered. The resilivering finished 4 days ago with 0 Errors but the hot-spare is still in my pool. If I try to remove it I get the following error:

If I try and detach it I get the following error:

Please help me. I have 2 other drives throwing up SMART errors and would like to get my pool in top health before I lose data. Any help would be greatly appreciated. Here is my zpool status:

I should also mention that some really weird stuff happened after the drive failure. The hot spare I installed had been thoroughly tested to be a known good drive and was even having short and long SMART tests being run on it every month. When it kicked in to take over for the bad drive it suddenly showed 559 READ errors (see picture below). After restarting the TrueNAS machine those errors were gone. I have no idea whats going on. I've had drives fail before but this is the first time I tried having a hot-spare. It seems like it's more headache than its worth

libzfs.ZFSException: Pool busy; removal may already be in progress

If I try and detach it I get the following error:

AttributeError: 'NoneType' object has no attribute 'type'

Please help me. I have 2 other drives throwing up SMART errors and would like to get my pool in top health before I lose data. Any help would be greatly appreciated. Here is my zpool status:

Code:

root@freenas[~]# zpool status

pool: NASvolume

state: ONLINE

status: Some supported features are not enabled on the pool. The pool can

still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(5) for details.

scan: resilvered 644K in 00:00:04 with 0 errors on Thu Oct 14 03:35:58 2021

config:

NAME STATE READ WRITE CKSUM

NASvolume ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

spare-0 ONLINE 0 0 0

gptid/2b0f7b56-2b75-11ec-a0b9-0cc47a710628 ONLINE 0 0 0

gptid/fea4b15e-c410-11ea-a06c-0cc47a710628 ONLINE 0 0 0

gptid/0d02354d-c3eb-11ea-a06c-0cc47a710628 ONLINE 0 0 0

gptid/d056aee7-5ffc-11e9-b7a3-0cc47a710628 ONLINE 0 0 0

gptid/d606c37c-5ffc-11e9-b7a3-0cc47a710628 ONLINE 0 0 0

gptid/dbf993cb-5ffc-11e9-b7a3-0cc47a710628 ONLINE 0 0 0

gptid/9b05a82e-0f5e-11eb-9d64-0cc47a710628 ONLINE 0 0 0

gptid/e797fdc4-5ffc-11e9-b7a3-0cc47a710628 ONLINE 0 0 0

gptid/ed3fc731-5ffc-11e9-b7a3-0cc47a710628 ONLINE 0 0 0

gptid/f32cef26-5ffc-11e9-b7a3-0cc47a710628 ONLINE 0 0 0

gptid/f8ffbe3e-5ffc-11e9-b7a3-0cc47a710628 ONLINE 0 0 0

spares

gptid/fea4b15e-c410-11ea-a06c-0cc47a710628 INUSE currently in use

errors: No known data errors

pool: freenas-boot

state: ONLINE

status: Some supported features are not enabled on the pool. The pool can

still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(5) for details.

scan: scrub repaired 0B in 00:01:19 with 0 errors on Tue Oct 12 03:46:19 2021

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

ada2p2 ONLINE 0 0 0

errors: No known data errors

root@freenas[~]#I should also mention that some really weird stuff happened after the drive failure. The hot spare I installed had been thoroughly tested to be a known good drive and was even having short and long SMART tests being run on it every month. When it kicked in to take over for the bad drive it suddenly showed 559 READ errors (see picture below). After restarting the TrueNAS machine those errors were gone. I have no idea whats going on. I've had drives fail before but this is the first time I tried having a hot-spare. It seems like it's more headache than its worth