Hi, I decide to make this post after a couple of hours searching on google. Maybe my google-fu is not good, please forgive me if my question is dumb.

So I started to build my freenas server with two expensive nvme SSD which are put in lz4 compressed mirror without SLOG. I export them as NFS via 10GE nic to anther server and trying to copy 2GB file from the local disk of the second server to my freenas.

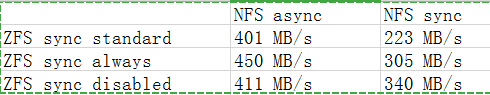

For different configurations, I get table below.

So my first question is that why ZFS with sync standard performs worst in both cases ? Isn't it supposed to be at least comparable to ZFS sync always ?

The second question is that how do I understand difference in the second row? My understanding is that when ZFS is set to sync always it returns write committed only if it finishes actually write in the ZIL, no matter if NFS has explicitly requested sync or not. So why it still performs better when NFS is set to async? What kind of role the NFS sync option plays here ?

So I started to build my freenas server with two expensive nvme SSD which are put in lz4 compressed mirror without SLOG. I export them as NFS via 10GE nic to anther server and trying to copy 2GB file from the local disk of the second server to my freenas.

For different configurations, I get table below.

So my first question is that why ZFS with sync standard performs worst in both cases ? Isn't it supposed to be at least comparable to ZFS sync always ?

The second question is that how do I understand difference in the second row? My understanding is that when ZFS is set to sync always it returns write committed only if it finishes actually write in the ZIL, no matter if NFS has explicitly requested sync or not. So why it still performs better when NFS is set to async? What kind of role the NFS sync option plays here ?