HeloJunkie

Patron

- Joined

- Oct 15, 2014

- Messages

- 300

I am beating my head against the wall here and could use some help. I have two separate FreeNAS servers running FreeNAS-9.10.2-U3 (e1497f269) with hardware as listed in my sig, but here are the basics:

Server 1 (Name: PlexNAS)

Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

LSI3008 SAS Controller

4 x 6 Drive RAIDZ2 VDEVs

Chelsio T580-SO-CR Dual 40Gbe NIC (Replication Connection to backup FreeNAS server (PlexNAS-ii))

Chelsio T520-SO-CR Dual 10Gbe NIC (Data connection to Plex server & media management server)

109TB RAW

Server 2 (Name: PlexNAS-ii)

2 x Intel Xeon E5603 4 Core Processors

96GB (12 x 8GB) DDR3 PC10600 (1333) REG ECC Memory

LSI SAS3081E-R

4 x 9 Drive RAIDZ2 VDEVs

Chelsio T580-SO-CR Dual 40Gbe NIC (Replication Connection to primary FreeNAS server)

Chelsio T520-SO-CR Dual 10Gbe NIC (Data connection to Plex server & media management server)

108TB RAW

Each of these machines is connected to each other via a 40Gbe dedicated link, no switch involved. Each of these machines is connected to my Plex server (Name: CinaPLEX) via a dedicated 10Gbe dedicated link, no switch involved. Each of these machines is connected to my media management server (Name: Bender) via a dedicated 10Gbe link, no switch involved. Each of these machines is connected to my internet vlan via 1Gbe and a Cisco 3560.

Please see a complete network diagram HERE.

I recently downgraded from Corral to 9.10.2 utilizing a completely fresh install from scratch, not any type of upgrade. After the upgrade, I was doing some replications and noticed absolutely dismal speeds between the two FreeNAS servers that are directly connected via a Chelsio 40Gbe T580-SO-CR card. I did not have this issue running Corral, or at least not to this extent.

Once I noticed the speed issue, I decided to fall back and do a bunch of testing. Here are the tests that I ran:

IPERF

First I did an iperf test between the two freenas servers. These servers have host entries to make sure they are talking to each other across the 40Gbe link and not across another interface. Pinging each other from the command line shows the correct IP address and I ran iperf bound to the specific address on the /30 on which they communicate:

Next, I ran iperf tests between each freenas server and the other two servers (Bender and Cinaplex). Again, host entries make sure that these system talk on the dedicated networks I have created for them and not another interface:

Now, I realize that iperf is a simple network test done in memory and does not take into account the speed of the drives, so then I ran the following tests:

(All data tranfer tests were done across an NFS share)

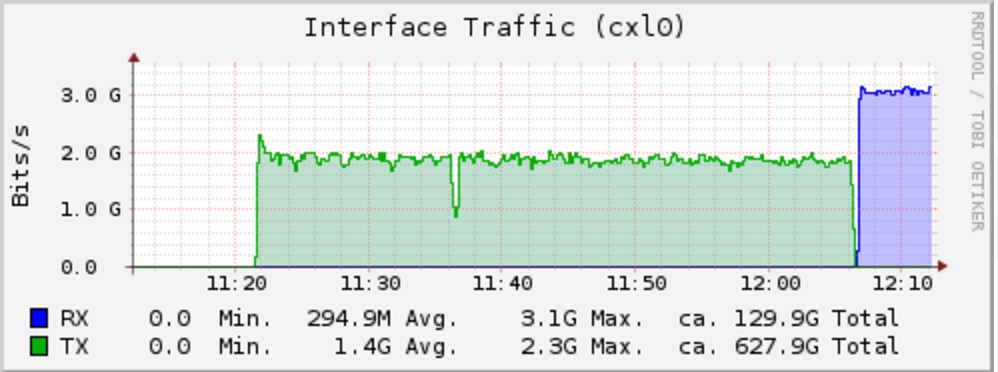

[Writing data TO PlexNAS from CinaPLEX (10Gbe Link)]

[Writing data TO PlexNASii from CinaPLEX (10Gbe Link)]

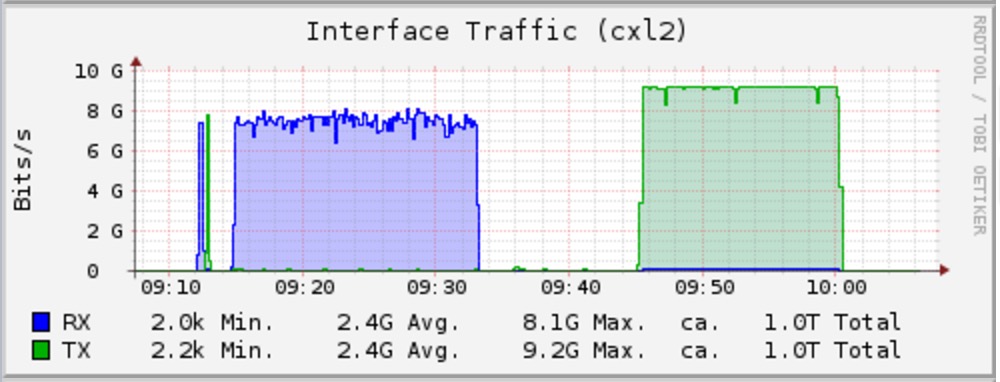

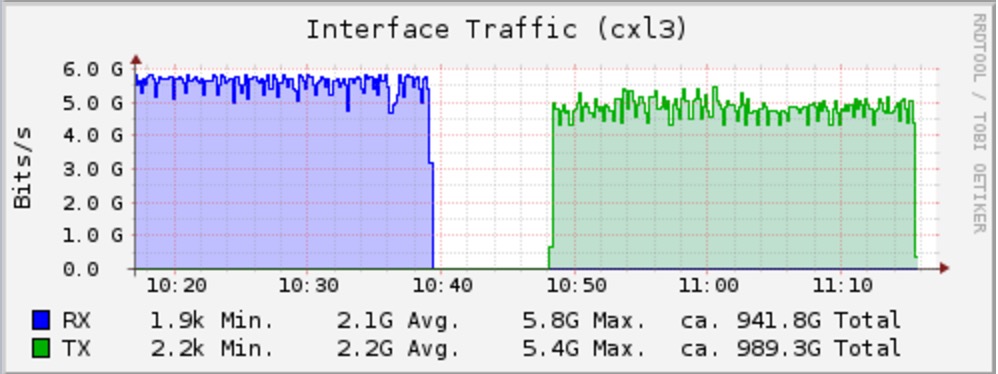

[Writing from PlexNAS TO PlexNASii (40Gbe Link)]

So, I concluded that the PlexNAS and PlexNAS-ii systems have the capability to read and write from the pools with a speed at least that which they do across the 10Gbe interfaces. I concluded that the slower transfer speed across the 10Gbe links (shown in both the iperf and the actual data transfer) is as a result of a slower overall system.

However, what I cannot figure out is why the data transfer speed across the 40Gbe link is slower than across the 10Gbe link? If reading and writing to the pool are constrained by the pool configuration (ie I am maxing out the read/write speeds of the vdevs themselves), then I would expect AT LEAST the same speed across the 40Gbe link as across the 10Gbe links.

I have tried different MTU, ie jumbo, no jumbo and there is no change in the speeds of the iperf test results nor the file copy results.

Anyway, I am hoping that someone here has run into the same issue or may have a suggestion as to what to try next.

Many Thanks

Server 1 (Name: PlexNAS)

Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

LSI3008 SAS Controller

4 x 6 Drive RAIDZ2 VDEVs

Chelsio T580-SO-CR Dual 40Gbe NIC (Replication Connection to backup FreeNAS server (PlexNAS-ii))

Chelsio T520-SO-CR Dual 10Gbe NIC (Data connection to Plex server & media management server)

109TB RAW

Server 2 (Name: PlexNAS-ii)

2 x Intel Xeon E5603 4 Core Processors

96GB (12 x 8GB) DDR3 PC10600 (1333) REG ECC Memory

LSI SAS3081E-R

4 x 9 Drive RAIDZ2 VDEVs

Chelsio T580-SO-CR Dual 40Gbe NIC (Replication Connection to primary FreeNAS server)

Chelsio T520-SO-CR Dual 10Gbe NIC (Data connection to Plex server & media management server)

108TB RAW

Each of these machines is connected to each other via a 40Gbe dedicated link, no switch involved. Each of these machines is connected to my Plex server (Name: CinaPLEX) via a dedicated 10Gbe dedicated link, no switch involved. Each of these machines is connected to my media management server (Name: Bender) via a dedicated 10Gbe link, no switch involved. Each of these machines is connected to my internet vlan via 1Gbe and a Cisco 3560.

Please see a complete network diagram HERE.

I recently downgraded from Corral to 9.10.2 utilizing a completely fresh install from scratch, not any type of upgrade. After the upgrade, I was doing some replications and noticed absolutely dismal speeds between the two FreeNAS servers that are directly connected via a Chelsio 40Gbe T580-SO-CR card. I did not have this issue running Corral, or at least not to this extent.

Once I noticed the speed issue, I decided to fall back and do a bunch of testing. Here are the tests that I ran:

IPERF

First I did an iperf test between the two freenas servers. These servers have host entries to make sure they are talking to each other across the 40Gbe link and not across another interface. Pinging each other from the command line shows the correct IP address and I ran iperf bound to the specific address on the /30 on which they communicate:

- plexnas-ii to plexnas = 16.4 Gbits/sec

- plexnas to plexnas-ii = 15.7Gbits/sec

Next, I ran iperf tests between each freenas server and the other two servers (Bender and Cinaplex). Again, host entries make sure that these system talk on the dedicated networks I have created for them and not another interface:

- plexnas-ii to bender = 6.32Gbits/sec

- bender to plexnas-ii = 6.52Gbits/sec

- plexnas to bender = 9.41Gbits/sec

- bender to plexnas = 9.42Gbits/sec

- plexnas-ii to cinaplex = 6.33Gbits/sec

- cinaplex to plexnas-ii = 6.52Gbits/sec

- plexnas to cinaplex = 9.41Gbits/sec

- cinaplex to plexnas = 9.42Gbits/sec

Now, I realize that iperf is a simple network test done in memory and does not take into account the speed of the drives, so then I ran the following tests:

(All data tranfer tests were done across an NFS share)

[Writing data TO PlexNAS from CinaPLEX (10Gbe Link)]

Code:

root@cinaplex:~# dd if=/dev/zero of=/mount/media/testfile.00 bs=1M count=1000000 1000000+0 records in 1000000+0 records out 1048576000000 bytes (1.0 TB, 977 GiB) copied, 1098.51 s, 955 MB/s [Reading data FROM PlexNAS to CinaPLEX (10Gbe Link)] root@cinaplex:~# dd of=/dev/null if=/mount/media/testfile.00 bs=1M count=1000000 1000000+0 records in 1000000+0 records out 1048576000000 bytes (1.0 TB, 977 GiB) copied, 901.278 s, 1.2 GB/s

[Writing data TO PlexNASii from CinaPLEX (10Gbe Link)]

Code:

root@cinaplex:~# dd if=/dev/zero of=/mount/media_backup/testfile.00 bs=1M count=1000000 1000000+0 records in 1000000+0 records out 1048576000000 bytes (1.0 TB, 977 GiB) copied, 1415.89 s, 741 MB/s [Reading data FROM PlexNASii to CinaPLEX (10Gbe Link)] root@cinaplex:~# dd of=/dev/null if=/mount/media_backup/testfile.00 bs=1M count=1000000 1000000+0 records in 1000000+0 records out 1048576000000 bytes (1.0 TB, 977 GiB) copied, 1634.72 s, 641 MB/s

[Writing from PlexNAS TO PlexNASii (40Gbe Link)]

Code:

[root@plexnas] /mnt# dd if=/dev/zero of=/mnt/test/testfile.00 bs=1M count=1000000 610339+0 records in 610338+0 records out 639985778688 bytes transferred in 2685.119670 secs (238345347 bytes/sec) [Reading from PlexNASii TO PlexNAS (40Gbe Link)] [root@plexnas] /mnt# dd of=/dev/zero if=/mnt/test/testfile.00 bs=1M count=1000000 610339+0 records in 610339+0 records out 639986827264 bytes transferred in 1650.875549 secs (387665095 bytes/sec)

So, I concluded that the PlexNAS and PlexNAS-ii systems have the capability to read and write from the pools with a speed at least that which they do across the 10Gbe interfaces. I concluded that the slower transfer speed across the 10Gbe links (shown in both the iperf and the actual data transfer) is as a result of a slower overall system.

However, what I cannot figure out is why the data transfer speed across the 40Gbe link is slower than across the 10Gbe link? If reading and writing to the pool are constrained by the pool configuration (ie I am maxing out the read/write speeds of the vdevs themselves), then I would expect AT LEAST the same speed across the 40Gbe link as across the 10Gbe links.

I have tried different MTU, ie jumbo, no jumbo and there is no change in the speeds of the iperf test results nor the file copy results.

Anyway, I am hoping that someone here has run into the same issue or may have a suggestion as to what to try next.

Many Thanks