Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

I've been a FreeNAS/TrueNAS user since 2011, check my first setup. This is my 3rd build and I'm going to share my findings, so others can get an idea of the components used.

Server Specs

Picking the server base canvas was easy, I'm a firm believer that using true enterprise server hardware pays off in the long term. Not to mention it is way cheaper, versus building your own server. I've had great results with my 2nd Dell C2100 build and its excellent reliability, so I decided to purchase a Dell R720xd from buildmyserver.com with following specs:

I did a lot of research, related to server sellers and decided to go with buildmyserver.com. If you call them directly, they will definitely go an extra length to accommodate you by offering a better overall price and additional server customizations not available on their website. When I went to pickup the server in NYC, I was impressed by the warehouse size and how well they test the products you purchase from them.

Server Power Consumption

Picking CPUs and other server components with low power consumption was important, since I run my NAS 24/7, with no disk spin downs or other "power saving" settings which actually hurt overall server performance.

Server Storage

I used CMR enterprise grade hard drives and consumer grade SSDs. I will probably upgrade to Samsung PM883 SSD series, in the future.

JBOD Storage

I also did a lot of research, related to future storage expandability. Originally I was looking at Dell PowerVault and Compellent but decided to go with NetApp because their excellent reliability, low price and very low noise level. I was also pleasantly surprised to notice the DS4246 do not increase the noise level on R720xd, which is already very quiet.

Network Integration

I'm currently using Unifi products for my home network, eventually I will upgrade to 10GB. I also use the free Cloudflare service as frontend combined with several Raspberry Pi's (DietPi OS), allowing SSL encrypted world access to my homelab and local DNS servers.

Scale Configuration

I use the default Scale settings, with no customizations. The only service configuration change I applied is the

Applications Backup and Updates

I'm using the @truecharts applications instead of official ones, because of their consistency related to initial application settings. I like being welcomed by the same setup process, for any new application I decide to deploy. I set all application names to lower-case alphanumeric values to avoid possible backup issues, explained below (e.g.

As we all know, there is no current application backup process implemented in Scale, due to the nature of Kubernetes. So I created my own backup system, using a shell script I run manually, for now. Basically, the script stops the application, copies the files from a legacy mount point, then triggers a

Disclaimer

IN NO EVENT SHALL "MYSELF (Daisuke)" BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES, INCLUDING LOST PROFITS, ARISING OUT OF THE USE OF THIS SOFTWARE AND ITS DOCUMENTATION, EVEN IF "MYSELF (Daisuke)" HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. THE SOFTWARE AND ACCOMPANYING DOCUMENTATION, IF ANY, PROVIDED HEREUNDER IS PROVIDED "AS IS". "MYSELF (Daisuke)" HAVE NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

The scripts are attached, below. If there is enough interest, I will create a public GitHub repo, allowing scripts being imported as submodule. This will also allow collaborators to provide script enhancements for the community. What I provided here covers my homelab needs.

backup.tool

Is important you initially backup and restore a test application, just to get a gist of the overall process. Once you are comfortable and you validated your application is restored and operates as expected, then you can proceed backing/restoring up another application. Always validate your backup/restore process is successful, for each application. Kubernetes is very sensitive to volume permissions, etc.

update.tool (simple automation alternative to truetool)

To leverage an application API as example, the script presumes you have

update.tool Update

I released a small update to the script, which fixes the updates failing for some TrueCharts apps.

Server Specs

Picking the server base canvas was easy, I'm a firm believer that using true enterprise server hardware pays off in the long term. Not to mention it is way cheaper, versus building your own server. I've had great results with my 2nd Dell C2100 build and its excellent reliability, so I decided to purchase a Dell R720xd from buildmyserver.com with following specs:

- 2x E5-2620 v2 @ 2.10GHz CPU

- 16x 16GB 2Rx4 PC3-12800R DDR3-1600 ECC, for a total of 256GB RAM

- 2x 750W Platinum Power Supply

- 1x 2-Bay 2.5" Rear FlexBay, for SATA SSDs

- 1x 2 10GB RJ-45 + 2 1GB RJ-45 Network Daughter Card, for 10GB planned connectivity

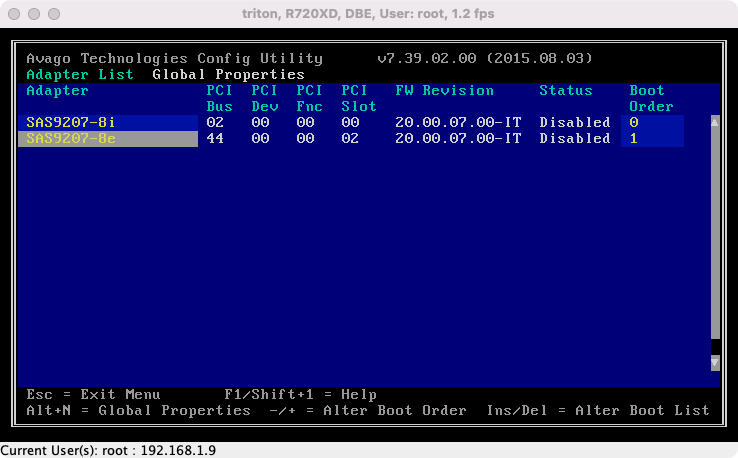

- 1x PERC H710 Mini, flashed to LSI 9207i firmware

- 1x PERC H810 PCIe, flashed to LSI 9207e firmware

- 1x NVIDIA Tesla P4 GPU, for Plex transcoding

- 1x DRAC7 Enterprise License, from eBay

Flashed PERC cards output in BIOS:

I did a lot of research, related to server sellers and decided to go with buildmyserver.com. If you call them directly, they will definitely go an extra length to accommodate you by offering a better overall price and additional server customizations not available on their website. When I went to pickup the server in NYC, I was impressed by the warehouse size and how well they test the products you purchase from them.

Server Power Consumption

Picking CPUs and other server components with low power consumption was important, since I run my NAS 24/7, with no disk spin downs or other "power saving" settings which actually hurt overall server performance.

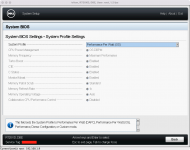

To lower the power consumption further, I changed the System BIOS Settings > System Profile Settings > System Profile to

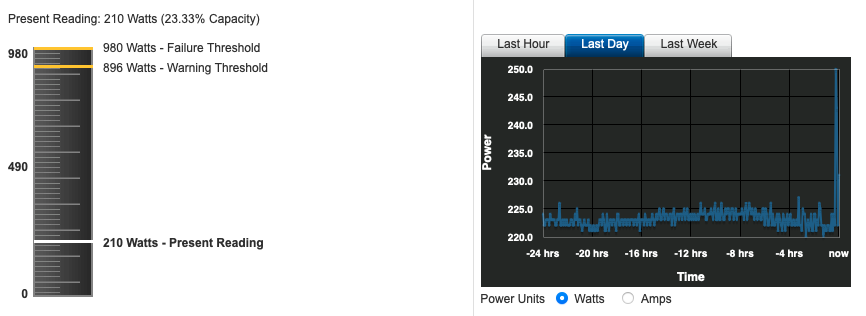

These changes resulted in a power consumption drop from 350 Watts average, to 225 Watts average (the spike you see is when I restarted the server, to take the BIOS screenshots):

Performance Per Watt (OS) and iDRAC Settings > Thermal > Thermal Base Algorithm to Minimum power (Performance per watt optimized).

These changes resulted in a power consumption drop from 350 Watts average, to 225 Watts average (the spike you see is when I restarted the server, to take the BIOS screenshots):

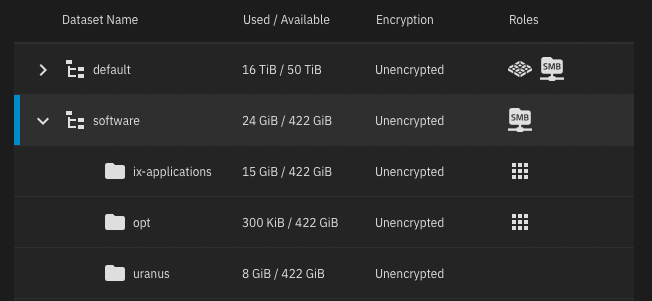

Server Storage

I used CMR enterprise grade hard drives and consumer grade SSDs. I will probably upgrade to Samsung PM883 SSD series, in the future.

- 1x Samsung 870 EVO 250GB SATA SSD for Scale OS, connected to internal USB port with a SKL Tech SATA to USB connector

- 12x HGST Ultrastar He8 Helium (HUH728080ALE601) 8TB for default pool hosting media files

- 2x Samsung 870 EVO 500GB SATA SSD for software pool hosting Kubernetes applications

JBOD Storage

I also did a lot of research, related to future storage expandability. Originally I was looking at Dell PowerVault and Compellent but decided to go with NetApp because their excellent reliability, low price and very low noise level. I was also pleasantly surprised to notice the DS4246 do not increase the noise level on R720xd, which is already very quiet.

- 2x NetApp DS4246 enclosure

- 12x HGST Ultrastar He8 Helium (HUH728080ALE601) 8TB for default pool expandability

- 1x SFF-8436 to SFF-8088 cable, connecting the external PERC H810 to first DS4246 enclosure

- 1x SFF-8436 to SFF-8436 cable, connecting the two DS4246 enclosures

Network Integration

I'm currently using Unifi products for my home network, eventually I will upgrade to 10GB. I also use the free Cloudflare service as frontend combined with several Raspberry Pi's (DietPi OS), allowing SSL encrypted world access to my homelab and local DNS servers.

- 1x UDM SE all-in-one router and security gateway

- 1x USW-Pro-24-Poe switch

- 1x Raspberry Pi 4B running Certbot + Nginx Proxy in upstream mode linked to a Cloudflare SSL domain, for easy access to all applications running on TrueNAS

- 2x Raspberry Pi 4B running Pihole + Unbound, for network-wide protection and local network ad-free experience or through Unifi's integrated VPN

Scale Configuration

I use the default Scale settings, with no customizations. The only service configuration change I applied is the

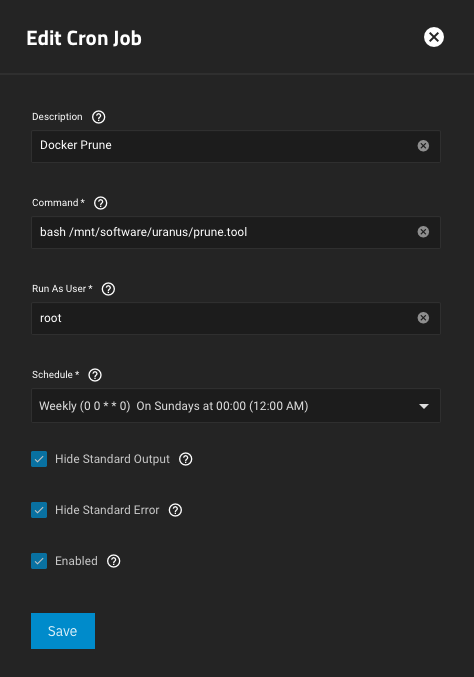

server signing = mandatory into SMB service auxiliary parameters. The ix-applications dataset is deployed into software pool, which has Auto TRIM pool option enabled due to SSDs usage.I have a cron job pruning all Docker leftovers:

Angelfish release:

Bluefin release:

Angelfish release:

Code:

# cat /mnt/software/uranus/prune.tool

#!/usr/bin/env bash

if [ $EUID -gt 0 ]; then

echo 'Run prune.tool as root.'

exit 1

fi

docker container prune -f --filter 'until=5m'

docker image prune -af --filter 'until=1h'

docker system prune -af --volumes

docker volume prune -f

Bluefin release:

Code:

# cat /mnt/software/uranus/prune.tool

#!/usr/bin/env bash

if [ $EUID -gt 0 ]; then

echo 'Run prune.tool as root.'

exit 1

fi

midclt call container.prune '

{

"remove_stopped_containers": true,

"remove_unused_images": true

}

'

Applications Backup and Updates

I'm using the @truecharts applications instead of official ones, because of their consistency related to initial application settings. I like being welcomed by the same setup process, for any new application I decide to deploy. I set all application names to lower-case alphanumeric values to avoid possible backup issues, explained below (e.g.

sabnzbd).As we all know, there is no current application backup process implemented in Scale, due to the nature of Kubernetes. So I created my own backup system, using a shell script I run manually, for now. Basically, the script stops the application, copies the files from a legacy mount point, then triggers a

git push to a private GitHub repository. The restore process is similar. I also created a script which allows me to update all TrueCharts applications, every night, through a cron job.In order to implement the scripts, I created two datasets into software pool:

- opt - stores temporary files used by several applications (e.g. Sabnzbd)

- uranus - stores the application backups, linked to a private GitHub repo (named from my Scale server hostname, to avoid insanities)

Disclaimer

IN NO EVENT SHALL "MYSELF (Daisuke)" BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES, INCLUDING LOST PROFITS, ARISING OUT OF THE USE OF THIS SOFTWARE AND ITS DOCUMENTATION, EVEN IF "MYSELF (Daisuke)" HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. THE SOFTWARE AND ACCOMPANYING DOCUMENTATION, IF ANY, PROVIDED HEREUNDER IS PROVIDED "AS IS". "MYSELF (Daisuke)" HAVE NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

The scripts are attached, below. If there is enough interest, I will create a public GitHub repo, allowing scripts being imported as submodule. This will also allow collaborators to provide script enhancements for the community. What I provided here covers my homelab needs.

backup.tool

Is important you initially backup and restore a test application, just to get a gist of the overall process. Once you are comfortable and you validated your application is restored and operates as expected, then you can proceed backing/restoring up another application. Always validate your backup/restore process is successful, for each application. Kubernetes is very sensitive to volume permissions, etc.

Execute all commands as root user. You need to create your GitHub repo and import into it a new SSH key used for passwordless connectivity, then you can clone your new repo into uranus dataset:

With the SSH key created and imported into GitHub repo, you can now clone the repo locally. If you don't get any errors, that means you imported properly your locally created SSH key.

Add your SSH keys and

Configure

Script Usage

Familiarize yourself with the script options, by running

To create a backup for

To restore an application backup from your local repo, run:

You can create multiple backup versions, by using git branches.

Code:

$ sudo -i # pwd /root # ssh-keygen -t ed25519 -C 'youremail@example.com' # ls -lh /root/.ssh total 16K -rw------- 1 root root 411 Jun 26 13:58 id_ed25519 -rw-r--r-- 1 root root 103 Jun 26 13:58 id_ed25519.pub

With the SSH key created and imported into GitHub repo, you can now clone the repo locally. If you don't get any errors, that means you imported properly your locally created SSH key.

Code:

# cd /mnt/software # git clone git@github.com:yourusername/uranus.git # cd /mnt/software/uranus # git status

Add your SSH keys and

backup.tool file into repo (yes, it should not be an executable file):Code:

# pwd /mnt/software/uranus # install -dm 0700 /mnt/software/uranus/root/.ssh # cp -a /root/.ssh/* /mnt/software/uranus/root/.ssh/ # ls -lh /mnt/software/uranus/root/.ssh/id_ed25519* -rw------- 1 root root 411 Jun 26 13:58 id_ed25519 -rw-r--r-- 1 root root 103 Jun 26 13:58 id_ed25519.pub # ls -lh backup.tool -rw-r--r-- 1 root root 3.8K Jul 22 10:21 backup.tool

Configure

.gitignore to avoid backing useless files, and push the changes:Code:

# cat > /mnt/software/uranus/.gitignore << 'EOF' .DS_Store *.pid logs/ EOF # git push

Script Usage

Familiarize yourself with the script options, by running

bash backup.tool --help.To create a backup for

sabnzbd application, run:Code:

# pwd /mnt/software/uranus # bash backup.tool --help # bash backup.tool -d uranus -p software -u apps -a sabnzbd

To restore an application backup from your local repo, run:

Code:

# pwd /mnt/software/uranus # bash backup.tool -d uranus -p software -u apps -a sabnzbd -s

You can create multiple backup versions, by using git branches.

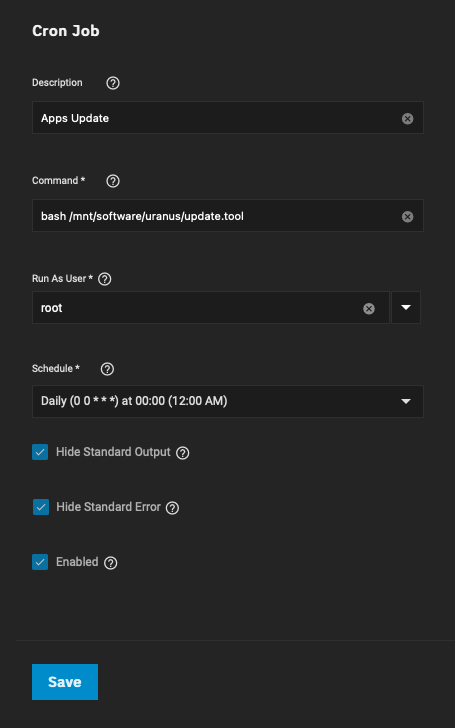

update.tool (simple automation alternative to truetool)

To leverage an application API as example, the script presumes you have

sabnzbd installed, amongst other TrueCharts applications. If you don't use this application, you can ignore any file customizations or simply edit the script to use another application API. Once the script is executed either manually or with a cron job, it will start updating all applications with updates available and if sabnzbd application has any downloads in progress, it will wait until they are finished, then update the application. The script also deletes any file leftovers present into /mnt/software/opt/downloads directories.Update the

Script Usage

Manually test the update process:

To automate the update process, create a cron job in Scale:

domain and apikey settings, then copy update.tool file into repo (yes, it should not be an executable file):Code:

# pwd /mnt/software/uranus # ls -lh update.tool -rw-r--r-- 1 root root 1.2K Jul 22 11:38 update.tool # git push

Script Usage

Manually test the update process:

Code:

# bash /mnt/software/uranus/update.tool

To automate the update process, create a cron job in Scale:

update.tool Update

I released a small update to the script, which fixes the updates failing for some TrueCharts apps.

Applied changes:

Re-download the

Code:

$ diff -Naur update-old.tool update.tool

--- update-old.tool 2022-12-02 09:42:15.000000000 -0500

+++ update.tool 2022-12-02 09:49:34.000000000 -0500

@@ -22,6 +22,7 @@

mapfile -t apps < <(cli -m csv -c 'app chart_release query name,update_available' | grep true | cut -d ',' -f 1)

if [ ${#apps[@]} -gt 0 ]; then

for i in "${apps[@]}"; do

+ i="${i%%[[:cntrl:]]}"

echo -n "Updating ${i}... "

if [ "${i,,}" == "sabnzbd" ]; then

while true; do

@@ -32,7 +33,7 @@

sleep 10

done

fi

- cli -c "app chart_release upgrade release_name=$i" > /dev/null 2>&1

+ cli -c 'app chart_release upgrade release_name="'$i'"' > /dev/null 2>&1

echo 'OK'

done

fi

Re-download the

update.tool.zip package.Attachments

Last edited: