Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Hi all,

A few days ago, I had some very unpleasant events with my TrueNAS server. When I woke up, before even touching my NAS or computer, I suddenly noticed that my NAS rebooted out of itself. So I quickly turned on my computer to login the IPMI and check what was happening and a few minutes later (I think it was before I managed to login to the IPMI), the NAS again rebooted out of itself. Later I found in the logs that the NAS had rebooted 6 times out of itself that morning. While this has never happened before since about a year of usage (and monthly scrubs)...

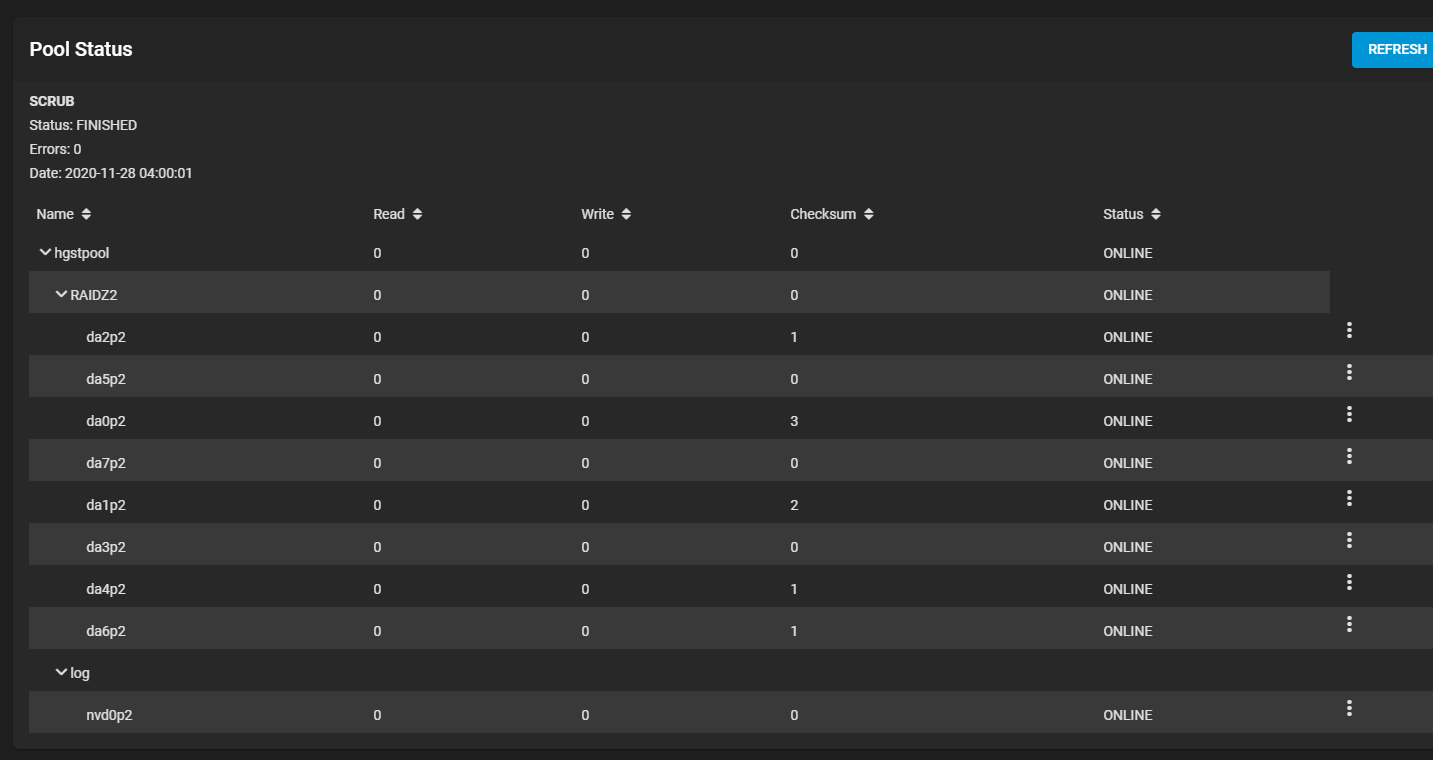

Also, at first it claimed the pool was healthy, but then it suddenly claimed the pool was unhealthy and a (few) day(s) later (after perhaps some more graceful reboots) the pool is suddenly healthy again?? When it temporarily was unhealthy, I was able to grab this screenshot:

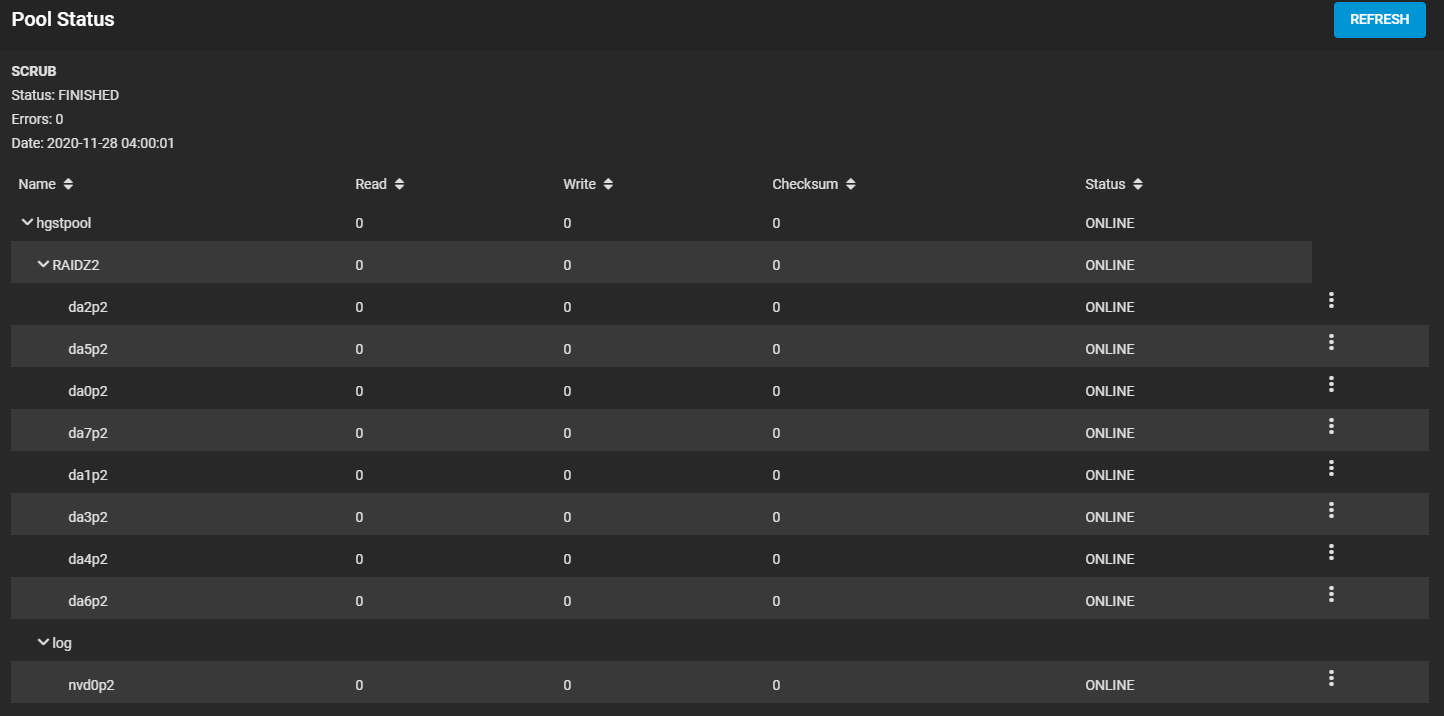

However, when I look at the status of this same pool (without "changing" or "fixing" anything, it now says

Also in the alerts, there first was first some critical error of my pool being unhealthy, which, from itself, disappeared again a (few) day(s) later??

I did do proper burn-in testing. Since then I did perform 2 changes to my hardware:

Does anyone know which log files or screens I should check to dig deeper? Or how I could best "prepare" (like setting a verbose flag somewhere) a test-scrub to see if the issues re-occur?

Thanks!

A few days ago, I had some very unpleasant events with my TrueNAS server. When I woke up, before even touching my NAS or computer, I suddenly noticed that my NAS rebooted out of itself. So I quickly turned on my computer to login the IPMI and check what was happening and a few minutes later (I think it was before I managed to login to the IPMI), the NAS again rebooted out of itself. Later I found in the logs that the NAS had rebooted 6 times out of itself that morning. While this has never happened before since about a year of usage (and monthly scrubs)...

Also, at first it claimed the pool was healthy, but then it suddenly claimed the pool was unhealthy and a (few) day(s) later (after perhaps some more graceful reboots) the pool is suddenly healthy again?? When it temporarily was unhealthy, I was able to grab this screenshot:

However, when I look at the status of this same pool (without "changing" or "fixing" anything, it now says

Also in the alerts, there first was first some critical error of my pool being unhealthy, which, from itself, disappeared again a (few) day(s) later??

- The checksum errors occur on multiple disks, so it seems unlikely to me that the HDDs themselves are dying

- No SMART errors on any of the disks. No issues after a short self test either.

- I couldn't find any logs of the scrub. I know it starts at 4h00 for hgstpool, but I don't know until which time it runs or if it resumes after an (un)graceful reboot or not. Why isn't there a log of the scrub process??

- When looking at my alert emails, the reboots occured at 8h21, 9h18, 10h39, 10h44. However, when looking in /var/log/messages, I can see ungraceful reboots at 8h17, 8h21, 9h14, 9h18, 10h39 and 10h44.

- When looking at my alert emails, I can see messages like "Pool hgstpool state is ONLINE: One or more devices has experienced an unrecoverable error. An attempt was made to correct the error. Applications are unaffected." in emails at 9h35, which was then cleared at both 10h40 and 10h45 (no idea how it got cleared, I certainly didn't want it to get cleared, I wanted to investigate it! Also no idea how it can get cleared twice??). Then the same message as a "new alert" at 12h48 (I suppose this means that the scrub was still running?), which got cleared at 16h07. And then one more new alert with the same message at 16h12, for which I never received a cleared email.

- I've checked my IPMI event log, but it doesn't contain anything around those reboots

- I've thoroughly studied my /var/log/messages and /var/log/console.log, but couldn't really find anything in there that is not in there during a normal or graceful boot. However, there is nothing in there regarding the shutdown around those times (which tells me that it was not a graceful reboot).

- /var/log/messages does contain following recurring error messages (also with graceful reboots), but it doubt this is related

- In /var/log/middlewared.log I did find multiple entries of failures to send emails with a time that's 14 seconds before the first entries of /var/log/messages (so I guess before the interface was brought up, so no wonder it fails... Could this be a bug perhaps?)

Code:[2020/11/28 08:17:24] (DEBUG) middlewared.setup():1641 - Timezone set to Europe/Brussels[2020/11/28 08:17:25] (DEBUG) middlewared.setup():2834 - Certificate setup for System complete [2020/11/28 08:17:25] (WARNING) MailService.send_raw():432 - Failed to send email: [Errno 8] Name does not resolve Traceback (most recent call last): File "/usr/local/lib/python3.8/site-packages/middlewared/plugins/mail.py", line 407, in send_raw server = self._get_smtp_server(config, message['timeout'], local_hostname=local_hostname) File "/usr/local/lib/python3.8/site-packages/middlewared/plugins/mail.py", line 454, in _get_smtp_server server = smtplib.SMTP( File "/usr/local/lib/python3.8/smtplib.py", line 253, in __init__ (code, msg) = self.connect(host, port) File "/usr/local/lib/python3.8/smtplib.py", line 339, in connect self.sock = self._get_socket(host, port, self.timeout) File "/usr/local/lib/python3.8/smtplib.py", line 308, in _get_socket return socket.create_connection((host, port), timeout, File "/usr/local/lib/python3.8/socket.py", line 787, in create_connection for res in getaddrinfo(host, port, 0, SOCK_STREAM): File "/usr/local/lib/python3.8/socket.py", line 918, in getaddrinfo for res in _socket.getaddrinfo(host, port, family, type, proto, flags): socket.gaierror: [Errno 8] Name does not resolve [2020/11/28 08:17:25] (ERROR) middlewared.job.run():373 - Job <bound method accepts.<locals>.wrap.<locals>.nf of <middlewared.plugins.mail.MailService object at 0x81cb7afa0>> failed Traceback (most recent call last): File "/usr/local/lib/python3.8/site-packages/middlewared/plugins/mail.py", line 407, in send_raw server = self._get_smtp_server(config, message['timeout'], local_hostname=local_hostname) File "/usr/local/lib/python3.8/site-packages/middlewared/plugins/mail.py", line 454, in _get_smtp_server server = smtplib.SMTP( File "/usr/local/lib/python3.8/smtplib.py", line 253, in __init__ (code, msg) = self.connect(host, port) File "/usr/local/lib/python3.8/smtplib.py", line 339, in connect self.sock = self._get_socket(host, port, self.timeout) File "/usr/local/lib/python3.8/smtplib.py", line 308, in _get_socket return socket.create_connection((host, port), timeout, File "/usr/local/lib/python3.8/socket.py", line 787, in create_connection for res in getaddrinfo(host, port, 0, SOCK_STREAM): File "/usr/local/lib/python3.8/socket.py", line 918, in getaddrinfo for res in _socket.getaddrinfo(host, port, family, type, proto, flags): socket.gaierror: [Errno 8] Name does not resolve During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/local/lib/python3.8/site-packages/middlewared/job.py", line 361, in run await self.future File "/usr/local/lib/python3.8/site-packages/middlewared/job.py", line 399, in __run_body rv = await self.middleware.run_in_thread(self.method, *([self] + args)) File "/usr/local/lib/python3.8/site-packages/middlewared/utils/run_in_thread.py", line 10, in run_in_thread return await self.loop.run_in_executor(self.run_in_thread_executor, functools.partial(method, *args, **kwargs)) File "/usr/local/lib/python3.8/site-packages/middlewared/utils/io_thread_pool_executor.py", line 25, in run result = self.fn(*self.args, **self.kwargs) File "/usr/local/lib/python3.8/site-packages/middlewared/schema.py", line 977, in nf return f(*args, **kwargs) File "/usr/local/lib/python3.8/site-packages/middlewared/plugins/mail.py", line 276, in send return self.send_raw(job, message, config) File "/usr/local/lib/python3.8/site-packages/middlewared/schema.py", line 977, in nf return f(*args, **kwargs) File "/usr/local/lib/python3.8/site-packages/middlewared/plugins/mail.py", line 436, in send_raw raise CallError(f'Failed to send email: {e}') middlewared.service_exception.CallError: [EFAULT] Failed to send email: [Errno 8] Name does not resolve

I did do proper burn-in testing. Since then I did perform 2 changes to my hardware:

- I've replaced my 32GB of ECC RAM with 64GB ECC RAM and re-did Memtest86 testing (This was about 1 month ago, so this might be the first scrub with the new RAM. I did however test ECC functionality of this RAM using memtest86, so it should properly report ECC errors in /var/log/messages when they occur - and it didn't report any)

- I've added an Optane drive that I use as SLOG (this was done some months ago, so the server certainly has undergone some scrubs since then)

Does anyone know which log files or screens I should check to dig deeper? Or how I could best "prepare" (like setting a verbose flag somewhere) a test-scrub to see if the issues re-occur?

Thanks!

Last edited: