As I've had some crashes / reboots during a scrub with (repaired) CKSUM errors, I wanted to know if the scrub errors occurred around the same time as the reboots. For this, I've created some very basic scripts that does a 'zpool status' every x seconds and log this with timestamps to a logfile.

More details on the issues I had (caused by a broken CPU apparently) you can find here:

Features:

/root/bin/start_scrub_with_logging.bash

This script initiates the scrub with logging. It accepts 1 argument: "The name of the pool". It internally calls /root/bin/log_scrub.bash to do the logging.

/root/bin/log_scrub.bash

This script does the actual logging. By adding it as a post-init script, it will also resume logging after a crash / reboot. It also accepts 1 argument: "The name of the pool"

Resume logging after reboot

To resume logging after reboot, add the log_scrub.bash script as a post init task.

Command:

Start a logged scrub

To start a logged scrub, execute the below command:

Command:

Log output example

More details on the issues I had (caused by a broken CPU apparently) you can find here:

Multiple ungraceful reboots and a temporarily unhealthy pool on "scrub-day"

Hi all, A few days ago, I had some very unpleasant events with my TrueNAS server. When I woke up, before even touching my NAS or computer, I suddenly noticed that my NAS rebooted out of itself. So I quickly turned on my computer to login the IPMI and check what was happening and a few minutes...

www.truenas.com

Features:

- Runs 'zpool status' regularly (default = every 5 seconds) and compares it with the previous run

- Logs unexpected changes (for example CKSUM errors) with a timestamp

- Does not log status changes continiously (like how much % is completed)

- Does log status changes at fixed intervals (default = every 10 minutes)

- Logs the scrub start and completion with a timestamp

- Resumes logging after a crash / reboot. Adds a log entry with a timestamp when this happens. Scrub actually restarts the scrub when this happens.

/root/bin/start_scrub_with_logging.bash

This script initiates the scrub with logging. It accepts 1 argument: "The name of the pool". It internally calls /root/bin/log_scrub.bash to do the logging.

Code:

#!/bin/bash

declare POOL="$1"

declare LATEST_ZPOOL_STATUS_FILE="/root/bin/latest_zpool_status"

rm -f "${LATEST_ZPOOL_STATUS_FILE}" # Cleanup old zpool status outputs

zpool scrub "${POOL}" >/dev/null 2>&1 || { echo "'${POOL}' is not a valid pool name."; exit 1; } # Initiate the scrub

/root/bin/log_scrub.bash "${POOL}" # Call the logging script

/root/bin/log_scrub.bash

This script does the actual logging. By adding it as a post-init script, it will also resume logging after a crash / reboot. It also accepts 1 argument: "The name of the pool"

Code:

#!/bin/bash

declare POOL="$1"

declare REFRESH_SPEED="5"

declare COUNTER="0"

declare STATUS_EVERY="120"

declare TIMESTAMP

declare SCRIPT_RESUMED="false"

declare LATEST_ZPOOL_STATUS_FILE="/root/bin/latest_zpool_status"

declare LATEST_ZPOOL_STATUS

declare PREVIOUS_ZPOOL_STATUS

# Check if the script was called by start_scrub_with_logging.bash (which performs a cleanup of zpool status files) or by post-init

[[ -f "${LATEST_ZPOOL_STATUS_FILE}" ]] && SCRIPT_RESUMED="true"

# Do the below until the scrub is completed

while true; do

TIMESTAMP=$(date +"%Y-%m-%d %H:%M:%S") # Store a timestamp

LATEST_ZPOOL_STATUS="$(zpool status "${POOL}" 2>&1)"

if [[ "${SCRIPT_RESUMED}" == "true" ]] && echo "${LATEST_ZPOOL_STATUS}" | grep -q ": no such pool"; then

((${COUNTER} % ${STATUS_EVERY})) || echo "${TIMESTAMP} Pool is not yet online. Perhaps you still need to unlock it?"

elif echo "${LATEST_ZPOOL_STATUS}" | grep -q ": no such pool"; then

echo "'${POOL}' is not a valid pool name."

exit 1

else

[[ -f "${LATEST_ZPOOL_STATUS_FILE}" ]] && PREVIOUS_ZPOOL_STATUS="$(cat "${LATEST_ZPOOL_STATUS_FILE}")" # rotate the zpool status file if it exists

echo "${LATEST_ZPOOL_STATUS}" >"${LATEST_ZPOOL_STATUS_FILE}" # create a new zpool status file

if [[ ! -z "${PREVIOUS_ZPOOL_STATUS}" ]]; then

# if the loop is entered not the first time or the script is resuming

[[ "${SCRIPT_RESUMED}" == "true" ]] && { SCRIPT_RESUMED="false"; echo "${TIMESTAMP} Script resumed! (after reboot?)"; echo "${LATEST_ZPOOL_STATUS}" | sed -re 's/^/\t\t /;'; } # if the script resumed, then add this to the log

echo "${LATEST_ZPOOL_STATUS}" | grep -q 'scan: *scrub in progress' || break # if the scrub is completed, then 'break' the loop

# echo "begin1 '${LATEST_ZPOOL_STATUS}' end1"

# echo "begin2 '${PREVIOUS_ZPOOL_STATUS}' end2"

if ! diff <(echo "${LATEST_ZPOOL_STATUS}" | egrep -v 'scanned at.*issued at.*total' | sed -re 's/[0-9]?[0-9]\.[0-9][0-9]% done, (.* to go|no estimated completion time)$//g') \

<(echo "${PREVIOUS_ZPOOL_STATUS}" | egrep -v 'scanned at.*issued at.*total' | sed -re 's/[0-9]?[0-9]\.[0-9][0-9]% done, (.* to go|no estimated completion time)$//g') >/dev/null; then

# if there is a unexpected difference between the zpool status outputs

echo "${TIMESTAMP} Something happened!"

echo "${LATEST_ZPOOL_STATUS}" | sed -re 's/^/\t\t /;'

fi

# Log a status update every amount of time

((${COUNTER} % ${STATUS_EVERY})) || echo "${TIMESTAMP} Status = $(echo "${LATEST_ZPOOL_STATUS}" | egrep 'scanned at.*issued at.*total'),$(echo "${LATEST_ZPOOL_STATUS}" | egrep 'repaired.*done.*')"

else

# if the loop is entered the first time and the script is not resuming

echo "${LATEST_ZPOOL_STATUS}" | grep -q 'scan: *scrub in progress' || { echo "${TIMESTAMP} Scrub not running. Nothing to log..."; rm -f "${LATEST_ZPOOL_STATUS_FILE}"; exit 1; } # Exit if scrub is not running

echo "${TIMESTAMP} Scrub initiated!"

echo "${LATEST_ZPOOL_STATUS}" | sed -re 's/^/\t\t /;'

fi

fi

((COUNTER++)) # increase the counter

sleep "${REFRESH_SPEED}" # sleep

done

# Scrub completion

echo "${TIMESTAMP} Scrub completed!"

cat "${LATEST_ZPOOL_STATUS_FILE}" | sed -re 's/^/\t\t /;'

rm -f "${LATEST_ZPOOL_STATUS_FILE}"

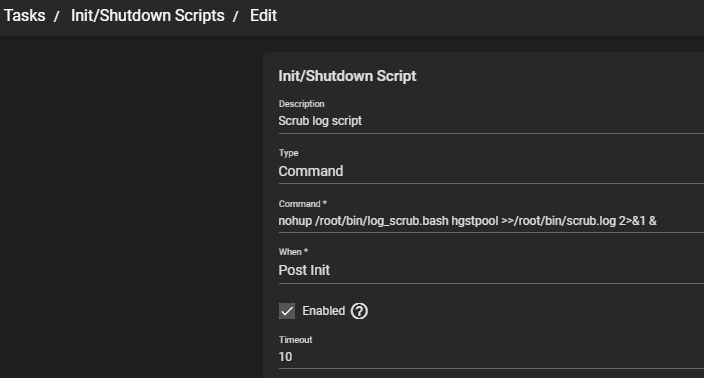

Resume logging after reboot

To resume logging after reboot, add the log_scrub.bash script as a post init task.

Command:

Code:

nohup /root/bin/log_scrub.bash name_of_your_pool >>/root/bin/scrub.log 2>&1 &

Start a logged scrub

To start a logged scrub, execute the below command:

Command:

Code:

nohup /root/bin/start_scrub_with_logging.bash name_of_your_pool >>/root/bin/scrub.log 2>&1 & tail -f /root/bin/scrub.log

Log output example

Code:

2020-12-07 15:06:36 Scrub initiated!

pool: hgstpool

state: ONLINE

scan: scrub in progress since Mon Dec 7 15:06:35 2020

123G scanned at 123G/s, 1.41M issued at 1.41M/s, 47.5T total

0B repaired, 0.00% done, no estimated completion time

config:

NAME STATE READ WRITE CKSUM

hgstpool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/ef5b1297-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f00c5450-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/efd8967a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f04a1e2c-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f01fd8e3-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/efde27c0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f03634f0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

logs

gptid/cf3dbeb8-c5dc-11ea-8b09-d05099d3fdfe ONLINE 0 0 0

errors: No known data errors

2020-12-07 15:16:39 Status = 5.39T scanned at 9.13G/s, 919G issued at 1.52G/s, 47.5T total, 0B repaired, 1.89% done, 08:42:33 to go

2020-12-07 15:26:42 Status = 6.30T scanned at 5.34G/s, 1.82T issued at 1.54G/s, 47.5T total, 0B repaired, 3.83% done, 08:24:37 to go

2020-12-07 15:36:46 Status = 7.05T scanned at 3.98G/s, 2.75T issued at 1.56G/s, 47.5T total, 0B repaired, 5.80% done, 08:10:21 to go

2020-12-07 15:46:49 Status = 7.90T scanned at 3.35G/s, 3.67T issued at 1.55G/s, 47.5T total, 0B repaired, 7.72% done, 08:00:49 to go

2020-12-07 15:56:53 Status = 8.05T scanned at 2.73G/s, 4.56T issued at 1.55G/s, 47.5T total, 0B repaired, 9.61% done, 07:53:10 to go

2020-12-07 16:06:56 Status = 8.41T scanned at 2.38G/s, 5.39T issued at 1.52G/s, 47.5T total, 0B repaired, 11.36% done, 07:50:56 to go

2020-12-07 16:16:59 Status = 9.19T scanned at 2.23G/s, 6.05T issued at 1.47G/s, 47.5T total, 0B repaired, 12.74% done, 08:02:24 to go

2020-12-07 16:27:02 Status = 9.66T scanned at 2.05G/s, 6.80T issued at 1.44G/s, 47.5T total, 0B repaired, 14.32% done, 08:01:30 to go

2020-12-07 16:37:06 Status = 10.8T scanned at 2.04G/s, 7.58T issued at 1.43G/s, 47.5T total, 0B repaired, 15.98% done, 07:55:57 to go

2020-12-07 16:47:09 Status = 11.8T scanned at 1.99G/s, 8.42T issued at 1.43G/s, 47.5T total, 0B repaired, 17.74% done, 07:46:20 to go

2020-12-07 16:57:12 Status = 12.6T scanned at 1.94G/s, 9.22T issued at 1.42G/s, 47.5T total, 0B repaired, 19.41% done, 07:39:09 to go

2020-12-07 17:07:15 Status = 12.6T scanned at 1.78G/s, 9.88T issued at 1.40G/s, 47.5T total, 0B repaired, 20.82% done, 07:39:02 to go

2020-12-07 17:17:18 Status = 12.6T scanned at 1.65G/s, 10.5T issued at 1.38G/s, 47.5T total, 0B repaired, 22.20% done, 07:38:04 to go

2020-12-07 17:27:22 Status = 12.6T scanned at 1.53G/s, 11.2T issued at 1.36G/s, 47.5T total, 0B repaired, 23.69% done, 07:33:22 to go

2020-12-07 17:37:25 Status = 12.6T scanned at 1.43G/s, 11.8T issued at 1.34G/s, 47.5T total, 0B repaired, 24.88% done, 07:35:19 to go

2020-12-07 17:47:28 Status = 12.6T scanned at 1.34G/s, 12.3T issued at 1.30G/s, 47.5T total, 0B repaired, 25.89% done, 07:40:26 to go

2020-12-07 17:57:31 Status = 17.3T scanned at 1.73G/s, 12.8T issued at 1.28G/s, 47.5T total, 0B repaired, 26.92% done, 07:44:01 to go

2020-12-07 18:07:35 Status = 18.2T scanned at 1.71G/s, 13.4T issued at 1.26G/s, 47.5T total, 0B repaired, 28.19% done, 07:41:07 to go

2020-12-07 18:17:38 Status = 18.2T scanned at 1.62G/s, 14.0T issued at 1.25G/s, 47.5T total, 0B repaired, 29.46% done, 07:37:27 to go

2020-12-07 18:27:42 Status = 18.9T scanned at 1.61G/s, 14.6T issued at 1.24G/s, 47.5T total, 0B repaired, 30.69% done, 07:34:15 to go

2020-12-07 18:37:45 Status = 20.2T scanned at 1.63G/s, 15.2T issued at 1.23G/s, 47.5T total, 0B repaired, 32.07% done, 07:27:17 to go

2020-12-07 18:47:48 Status = 20.2T scanned at 1.55G/s, 15.9T issued at 1.22G/s, 47.5T total, 0B repaired, 33.43% done, 07:20:35 to go

2020-12-07 18:57:51 Status = 20.8T scanned at 1.53G/s, 16.5T issued at 1.22G/s, 47.5T total, 0B repaired, 34.84% done, 07:12:32 to go

2020-12-07 19:07:54 Status = 21.9T scanned at 1.55G/s, 17.2T issued at 1.21G/s, 47.5T total, 0B repaired, 36.18% done, 07:05:45 to go

2020-12-07 19:17:57 Status = 21.9T scanned at 1.49G/s, 17.8T issued at 1.21G/s, 47.5T total, 0B repaired, 37.59% done, 06:57:17 to go

2020-12-07 19:28:01 Status = 23.0T scanned at 1.50G/s, 18.6T issued at 1.21G/s, 47.5T total, 0B repaired, 39.09% done, 06:47:16 to go

2020-12-07 19:38:04 Status = 24.0T scanned at 1.51G/s, 19.2T issued at 1.21G/s, 47.5T total, 0B repaired, 40.48% done, 06:39:14 to go

2020-12-07 19:48:07 Status = 24.0T scanned at 1.46G/s, 19.9T issued at 1.20G/s, 47.5T total, 0B repaired, 41.86% done, 06:31:03 to go

2020-12-07 19:58:10 Status = 25.1T scanned at 1.47G/s, 20.5T issued at 1.20G/s, 47.5T total, 0B repaired, 43.16% done, 06:23:56 to go

2020-12-07 20:08:13 Status = 25.1T scanned at 1.42G/s, 21.2T issued at 1.20G/s, 47.5T total, 0B repaired, 44.68% done, 06:13:30 to go

2020-12-07 20:18:17 Status = 25.1T scanned at 1.37G/s, 22.0T issued at 1.20G/s, 47.5T total, 0B repaired, 46.24% done, 06:02:19 to go

2020-12-07 20:28:20 Status = 25.1T scanned at 1.33G/s, 22.7T issued at 1.20G/s, 47.5T total, 0B repaired, 47.72% done, 05:52:33 to go

2020-12-07 20:38:24 Status = 25.1T scanned at 1.29G/s, 23.3T issued at 1.20G/s, 47.5T total, 0B repaired, 49.05% done, 05:44:39 to go

2020-12-07 20:48:27 Status = 25.1T scanned at 1.25G/s, 23.9T issued at 1.19G/s, 47.5T total, 0B repaired, 50.31% done, 05:37:38 to go

2020-12-07 20:58:31 Status = 25.1T scanned at 1.22G/s, 24.5T issued at 1.19G/s, 47.5T total, 0B repaired, 51.51% done, 05:31:15 to go

2020-12-07 21:08:34 Status = 25.1T scanned at 1.18G/s, 25.0T issued at 1.18G/s, 47.5T total, 0B repaired, 52.66% done, 05:25:24 to go

2020-12-07 21:18:37 Status = 30.5T scanned at 1.40G/s, 25.7T issued at 1.18G/s, 47.5T total, 0B repaired, 54.04% done, 05:16:25 to go

2020-12-07 21:28:41 Status = 30.5T scanned at 1.36G/s, 26.4T issued at 1.18G/s, 47.5T total, 0B repaired, 55.55% done, 05:05:46 to go

2020-12-07 21:38:44 Status = 31.5T scanned at 1.37G/s, 27.1T issued at 1.18G/s, 47.5T total, 0B repaired, 57.01% done, 04:55:40 to go

2020-12-07 21:48:47 Status = 32.6T scanned at 1.38G/s, 27.7T issued at 1.18G/s, 47.5T total, 0B repaired, 58.39% done, 04:46:39 to go

2020-12-07 21:58:50 Status = 32.6T scanned at 1.35G/s, 28.4T issued at 1.18G/s, 47.5T total, 0B repaired, 59.89% done, 04:36:05 to go

2020-12-07 21:59:46 Something happened!

pool: hgstpool

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub in progress since Mon Dec 7 15:06:35 2020

32.6T scanned at 1.35G/s, 28.5T issued at 1.18G/s, 47.5T total

24K repaired, 60.04% done, 04:35:02 to go

config:

NAME STATE READ WRITE CKSUM

hgstpool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/ef5b1297-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f00c5450-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/efd8967a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f04a1e2c-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 1 (repairing)

gptid/f01fd8e3-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/efde27c0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f03634f0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

logs

gptid/cf3dbeb8-c5dc-11ea-8b09-d05099d3fdfe ONLINE 0 0 0

errors: No known data errors

2020-12-07 22:08:54 Status = 33.7T scanned at 1.36G/s, 29.2T issued at 1.18G/s, 47.5T total, 24K repaired, 61.42% done, 04:25:19 to go

2020-12-07 22:18:57 Status = 34.8T scanned at 1.37G/s, 29.9T issued at 1.18G/s, 47.5T total, 24K repaired, 62.90% done, 04:15:02 to go

2020-12-07 22:29:00 Status = 34.8T scanned at 1.34G/s, 30.5T issued at 1.18G/s, 47.5T total, 24K repaired, 64.32% done, 04:05:22 to go

2020-12-07 22:39:03 Status = 35.8T scanned at 1.35G/s, 31.2T issued at 1.18G/s, 47.5T total, 24K repaired, 65.74% done, 03:55:46 to go

2020-12-07 22:49:06 Status = 36.8T scanned at 1.36G/s, 31.9T issued at 1.18G/s, 47.5T total, 24K repaired, 67.17% done, 03:46:01 to go

2020-12-07 22:56:14 Something happened!

pool: hgstpool

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub in progress since Mon Dec 7 15:06:35 2020

36.8T scanned at 1.34G/s, 32.4T issued at 1.18G/s, 47.5T total

48K repaired, 68.18% done, 03:39:12 to go

config:

NAME STATE READ WRITE CKSUM

hgstpool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/ef5b1297-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f00c5450-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 1 (repairing)

gptid/efd8967a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f04a1e2c-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 1 (repairing)

gptid/f01fd8e3-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/efde27c0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f03634f0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

logs

gptid/cf3dbeb8-c5dc-11ea-8b09-d05099d3fdfe ONLINE 0 0 0

errors: No known data errors

2020-12-07 22:59:09 Status = 36.8T scanned at 1.33G/s, 32.6T issued at 1.18G/s, 47.5T total, 48K repaired, 68.60% done, 03:36:19 to go

2020-12-07 23:09:13 Status = 37.8T scanned at 1.34G/s, 33.2T issued at 1.18G/s, 47.5T total, 48K repaired, 70.01% done, 03:26:41 to go

2020-12-07 23:19:16 Status = 37.8T scanned at 1.31G/s, 33.9T issued at 1.18G/s, 47.5T total, 48K repaired, 71.51% done, 03:16:15 to go

2020-12-07 23:29:20 Status = 37.8T scanned at 1.28G/s, 34.7T issued at 1.18G/s, 47.5T total, 48K repaired, 73.02% done, 03:05:47 to go

2020-12-07 23:39:23 Status = 37.8T scanned at 1.26G/s, 35.4T issued at 1.18G/s, 47.5T total, 48K repaired, 74.63% done, 02:54:20 to go

2020-12-07 23:49:26 Status = 37.8T scanned at 1.23G/s, 36.2T issued at 1.18G/s, 47.5T total, 48K repaired, 76.19% done, 02:43:23 to go

2020-12-07 23:59:29 Status = 37.8T scanned at 1.21G/s, 36.9T issued at 1.18G/s, 47.5T total, 48K repaired, 77.70% done, 02:32:56 to go

2020-12-08 00:09:33 Status = 37.8T scanned at 1.19G/s, 37.6T issued at 1.18G/s, 47.5T total, 48K repaired, 79.15% done, 02:23:01 to go

2020-12-08 00:19:37 Status = 41.9T scanned at 1.29G/s, 38.1T issued at 1.17G/s, 47.5T total, 48K repaired, 80.20% done, 02:16:33 to go

2020-12-08 00:27:19 Something happened!

pool: hgstpool

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub in progress since Mon Dec 7 15:06:35 2020

42.9T scanned at 1.30G/s, 38.6T issued at 1.17G/s, 47.5T total

72K repaired, 81.30% done, 02:08:58 to go

config:

NAME STATE READ WRITE CKSUM

hgstpool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/ef5b1297-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f00c5450-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 1 (repairing)

gptid/efd8967a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f04a1e2c-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 2 (repairing)

gptid/f01fd8e3-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/efde27c0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f03634f0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

logs

gptid/cf3dbeb8-c5dc-11ea-8b09-d05099d3fdfe ONLINE 0 0 0

errors: No known data errors

2020-12-08 00:29:20 Something happened!

pool: hgstpool

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub in progress since Mon Dec 7 15:06:35 2020

42.9T scanned at 1.30G/s, 38.7T issued at 1.17G/s, 47.5T total

96K repaired, 81.60% done, 02:06:51 to go

config:

NAME STATE READ WRITE CKSUM

hgstpool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/ef5b1297-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f00c5450-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 2 (repairing)

gptid/efd8967a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f04a1e2c-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 2 (repairing)

gptid/f01fd8e3-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/efde27c0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f03634f0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

logs

gptid/cf3dbeb8-c5dc-11ea-8b09-d05099d3fdfe ONLINE 0 0 0

errors: No known data errors

2020-12-08 00:29:40 Status = 42.9T scanned at 1.30G/s, 38.8T issued at 1.17G/s, 47.5T total, 96K repaired, 81.66% done, 02:06:28 to go

2020-12-08 00:39:44 Status = 43.8T scanned at 1.31G/s, 39.6T issued at 1.18G/s, 47.5T total, 96K repaired, 83.41% done, 01:53:59 to go

2020-12-08 00:49:48 Status = 44.3T scanned at 1.30G/s, 40.4T issued at 1.18G/s, 47.5T total, 96K repaired, 85.16% done, 01:41:40 to go

2020-12-08 00:59:51 Status = 44.8T scanned at 1.29G/s, 41.2T issued at 1.18G/s, 47.5T total, 96K repaired, 86.76% done, 01:30:32 to go

2020-12-08 01:09:54 Status = 46.1T scanned at 1.30G/s, 42.0T issued at 1.19G/s, 47.5T total, 96K repaired, 88.49% done, 01:18:30 to go

2020-12-08 01:19:58 Status = 46.7T scanned at 1.30G/s, 42.8T issued at 1.19G/s, 47.5T total, 96K repaired, 90.24% done, 01:06:22 to go

2020-12-08 01:30:02 Status = 47.3T scanned at 1.29G/s, 43.7T issued at 1.20G/s, 47.5T total, 96K repaired, 92.01% done, 00:54:07 to go

2020-12-08 01:40:05 Status = 47.4T scanned at 1.28G/s, 44.4T issued at 1.20G/s, 47.5T total, 96K repaired, 93.58% done, 00:43:28 to go

2020-12-08 01:50:09 Status = 47.4T scanned at 1.26G/s, 45.1T issued at 1.20G/s, 47.5T total, 96K repaired, 94.97% done, 00:34:05 to go

2020-12-08 02:00:12 Status = 47.4T scanned at 1.24G/s, 45.8T issued at 1.20G/s, 47.5T total, 96K repaired, 96.53% done, 00:23:30 to go

2020-12-08 02:10:16 Status = 47.4T scanned at 1.22G/s, 46.3T issued at 1.19G/s, 47.5T total, 96K repaired, 97.61% done, 00:16:13 to go

2020-12-08 02:20:19 Status = 47.4T scanned at 1.20G/s, 46.8T issued at 1.19G/s, 47.5T total, 96K repaired, 98.64% done, 00:09:16 to go

2020-12-08 02:30:23 Status = 47.4T scanned at 1.18G/s, 47.3T issued at 1.18G/s, 47.5T total, 96K repaired, 99.58% done, 00:02:51 to go

2020-12-08 02:35:30 Scrub completed!

pool: hgstpool

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub repaired 96K in 11:28:53 with 0 errors on Tue Dec 8 02:35:28 2020

config:

NAME STATE READ WRITE CKSUM

hgstpool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/ef1cec0a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/ef5b1297-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f00c5450-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 2

gptid/efd8967a-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f04a1e2c-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 2

gptid/f01fd8e3-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/efde27c0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

gptid/f03634f0-cdd4-11ea-a82a-d05099d3fdfe.eli ONLINE 0 0 0

logs

gptid/cf3dbeb8-c5dc-11ea-8b09-d05099d3fdfe ONLINE 0 0 0

errors: No known data errors