-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Misaligned Pools and Lost Space...

- Thread starter Bidule0hm

- Start date

- Status

- Not open for further replies.

jgreco

Resident Grinch

- Joined

- May 29, 2011

- Messages

- 18,680

With 8 disks in RAIDZ2:

"Capacity: 21.82 TiB" (before volume creation)

"Available: 20.0 TiB" (after volume creation)

Giving 1.82 TiB of "lost space". Better but too much.

Since there are many cases where you will not actually be able to store 20 TiB of data on the filer, is this just nerd rage at the fickleness of a nondeterministic storage system? Or do you actually have a point?

Bidule0hm

Server Electronics Sorcerer

- Joined

- Aug 5, 2013

- Messages

- 3,710

With the 7 drives RAID-Z3 you should lose 0.23 TiB so there's a 0.32 TiB difference, that seems fine.

With the 8 drives RAID-Z2 you should lose 1.53 TiB so there's a 0.29 TiB difference, that seems fine too.

With the 8 drives RAID-Z1 you should lose 1.39 TiB so there's a 0.47 TiB difference, that seems fine too even if a bit on the high side.

With the 8 drives RAID-Z2 you should lose 1.53 TiB so there's a 0.29 TiB difference, that seems fine too.

With the 8 drives RAID-Z1 you should lose 1.39 TiB so there's a 0.47 TiB difference, that seems fine too even if a bit on the high side.

snicke

Explorer

- Joined

- May 5, 2015

- Messages

- 74

I don't understand what you mean. Of course I want to be able to store as much as possible should I want to. It should not differ 1,6 TiB from the theoretical number. It is not small numbers. Like half a drive. Also a good thing to post the differences between different RAIDZ configurations for someone who actually wants to try to help me figuring out where my 2.18 TiB went. The posts are made to try to solve the problem of course. It's certainly not just nerd rage. Have better things to do than that. What is the point of your posts if you don't try answer my questions in a precise and helpful way? Waste of space and time?Since there are many cases where you will not actually be able to store 20 TiB of data on the filer, is this just nerd rage at the fickleness of a nondeterministic storage system? Or do you actually have a point?

Do you know how the "available space" is calculated so you actually can tell for sure that this is just due to "a nondeterministic storage system" or how do you _know_ it's not some real issue? Really want to know that before placing real data in my pool. Please explain why all these big differences in "lost space" can make sense on empty pools. You haven't convinced me so far with vague arguments like this is all due to a "nondeterministic storage system" etc. In that case it should be a big fat bug report on the calculation of "available space" saying that the calculation should at least be consistent between pool configurations or otherwise removed if it is just a very bad guess. But is it really just a very bad guess? You get the same result using "zfs list" from CLI.

I'm going to store data on my volume up until 80% of the available space is used but 80% of what? 80% of a wild guess? 80% of a buggy number? 80% of a true number that just needs to be explained (where did my 2.18 TiB go?)? Right now it is just very fuzzy and a perfect task for a sane forum to tackle.

Last edited:

snicke

Explorer

- Joined

- May 5, 2015

- Messages

- 74

Well if "everyone is obsessing over this" is everyone wrong then or is the system/documentation wrong? Maybe everyone needs a precise and good explanation. @Bidule0hm is really trying. Thank you for that. That's what we all should do in a sane forum instead of stating that everyone has bad luck while thinking.I'm kinda wondering why everyone is obsessing over this, since RAIDZ is not likely to allocate space so neatly as to be totally predictable. It is at best an intelligent guess and at worst so far off as to be a ridiculous number.

On topic and hope of continuing discussing and hopefully solve the issue: Why do I see 1.6TiB less space (huge number!) than @Bidule0hm when we have the exact same RaidZ3 configuration except from that I use 4TB drives and he 3Tb drives? We both have 8 HDDs in RAIDZ3.

Last edited:

Bidule0hm

Server Electronics Sorcerer

- Joined

- Aug 5, 2013

- Messages

- 3,710

You get the same result using "zfs list" from CLI

That's because the GUI is very very probably parsing the output of zfs list (that's also how they get the compression ratio value for example).

@Bidule0hm is really trying. Thank you for that.

You're welcome ;) I don't say the calculation is okay 100% sure but I'm pretty confident it is. However the missing space you see is maybe because of a 4th overhead (there's a 3th overhead we ignored here because it's very small: the swap overhead, 2 GB per drive) I don't know of. That may also explain why there's always an about 2 % difference (but maybe that is just the rounding in the GUI).

snicke

Explorer

- Joined

- May 5, 2015

- Messages

- 74

Yes, my conclusion too. What's your output from zfs list -p for your pool?That's because the GUI is very very probably parsing the output of zfs list (that's also how they get the compression ratio value for example).

Bidule0hm

Server Electronics Sorcerer

- Joined

- Aug 5, 2013

- Messages

- 3,710

Code:

[root@freenas] ~# zfs list -p NAME USED AVAIL REFER MOUNTPOINT freenas-boot 546845184 114913955328 31744 none freenas-boot/ROOT 539249664 114913955328 25600 none freenas-boot/ROOT/Initial-Install 1024 114913955328 532214272 legacy freenas-boot/ROOT/default 539223040 114913955328 532976128 legacy freenas-boot/grub 7113728 114913955328 7113728 legacy tank 2918554604672 10293905648512 317696 /mnt/tank tank/.system 2522580992 10293905648512 179227264 legacy tank/.system/configs-6c245a2d045b4b77b1c9f77d48e8c7fb 336384 10293905648512 336384 legacy tank/.system/cores 40637056 10293905648512 22182656 legacy tank/.system/rrd-6c245a2d045b4b77b1c9f77d48e8c7fb 585177344 10293905648512 34339200 legacy tank/.system/samba4 47691776 10293905648512 4316928 legacy tank/.system/syslog-6c245a2d045b4b77b1c9f77d48e8c7fb 20538112 10293905648512 1532416 legacy tank/ch*** 192125936512 10293905648512 192048839168 /mnt/tank/ch*** tank/fi*** 689418120320 10293905648512 689413569792 /mnt/tank/fi*** tank/fr*** 225405069056 10293905648512 221892678144 /mnt/tank/fr*** tank/jails 7935569536 10293905648512 551296 /mnt/tank/jails tank/jails/.warden-template-standard--x64 3693169280 10293905648512 3579116416 /mnt/tank/jails/.warden-template-standard--x64 tank/jails/minidlna 4238391680 10293905648512 3762669952 /mnt/tank/jails/minidlna tank/scripts 1298816 10293905648512 364416 /mnt/tank/scripts tank/se*** 1800893788160 10293905648512 1800597919744 /mnt/tank/se*** [root@freenas] ~#

Code:

[root@freenas] ~# zfs list -o name,used,avail,compressratio,lrefer,refer,refcompressratio,usedbysnapshots,recsize NAME USED AVAIL RATIO LREFER REFER REFRATIO USEDSNAP RECSIZE freenas-boot 522M 107G 2.02x 15.5K 31K 1.00x 0 128K freenas-boot/ROOT 514M 107G 2.02x 12.5K 25K 1.00x 0 128K freenas-boot/ROOT/Initial-Install 1K 107G 1.00x 1008M 508M 2.01x 0 128K freenas-boot/ROOT/default 514M 107G 2.02x 1010M 508M 2.01x 5.96M 128K freenas-boot/grub 6.78M 107G 1.64x 11.1M 6.78M 1.64x 0 128K tank 2.65T 9.36T 1.02x 18K 310K 1.00x 2.09M 128K tank/.system 2.35G 9.36T 2.24x 179M 171M 1.05x 1.54G 128K tank/.system/configs-6c245a2d045b4b77b1c9f77d48e8c7fb 328K 9.36T 1.00x 12.5K 328K 1.00x 0 128K tank/.system/cores 38.8M 9.36T 7.86x 78.2M 21.2M 4.49x 17.6M 128K tank/.system/rrd-6c245a2d045b4b77b1c9f77d48e8c7fb 558M 9.36T 9.14x 140M 32.8M 8.80x 525M 128K tank/.system/samba4 45.5M 9.36T 4.84x 15.4M 4.12M 5.23x 41.4M 128K tank/.system/syslog-6c245a2d045b4b77b1c9f77d48e8c7fb 19.6M 9.36T 4.97x 3.17M 1.46M 6.38x 18.1M 128K tank/ch*** 179G 9.36T 1.11x 217G 179G 1.11x 74.3M 1M tank/fi*** 642G 9.36T 1.00x 702G 642G 1.00x 4.34M 1M tank/fr*** 210G 9.36T 1.19x 269G 207G 1.19x 3.27G 1M tank/jails 7.39G 9.36T 2.18x 23K 538K 1.02x 3.30M 128K tank/jails/.warden-template-standard--x64 3.44G 9.36T 2.09x 1.78G 3.33G 2.11x 109M 128K tank/jails/minidlna 3.95G 9.36T 2.24x 2.12G 3.50G 2.23x 3.65G 128K tank/scripts 1.24M 9.36T 1.11x 27.5K 356K 1.30x 912K 128K tank/se*** 1.64T 9.36T 1.00x 1.79T 1.64T 1.00x 282M 1M [root@freenas] ~#

jgreco

Resident Grinch

- Joined

- May 29, 2011

- Messages

- 18,680

I don't understand what you mean. Of course I want to be able to store as much as possible should I want to. It should not differ 1,6 TiB from the theoretical number. It is not small numbers. Like half a drive. Also a good thing to post the differences between different RAIDZ configurations for someone who actually wants to try to help me figuring out where my 2.18 TiB went. The posts are made to try to solve the problem of course. It's certainly not just nerd rage. Have better things to do than that. What is the point of your posts if you don't try answer my questions in a precise and helpful way?

Because I think I already pointed out the issues that make up what I'm talking about, and I have a limit as to the amount of verbosity I am willing to engage in when typing on a cellphone.

The very nature of the beast makes this an inexact figure for the typical ZFS system that is storing typical data. While it may be possible to devise a configuration and contrived data that actually allows storage of the maximum, it seems to me like worrying about the theoretical number is kinda pointless for any real application.

snicke

Explorer

- Joined

- May 5, 2015

- Messages

- 74

Well you have pointed out your opinion which I have pointed out that I disagree with. So what's the point of continuing posting things that I, the one asking for help, and others, who tries find an answer to the question at hand, find pointless since it is not helpful and just cluttering the thread? BTW this forum is full of "I'm not going to help but I just want to make some noise", RTFM and, not to forget, "use the search functionality!" from a few people that thinks they are more special than others. The "use the search functionality" is the most ironic "tip" since when you try to (googling with site:freenas.org <your question>) you often find countless of other threads where the same persons again have posted RTFM and "use the search functionality!" or just posted opinions that are not helping the OP. Some kind of recursive joke. A tip to those people: Answer the question (again and again and again!)! It takes much less time compared to the other usual ranting that is tested for like 5 years here and failed and it for sure doesn't help the OP. And if you cannot answer the question, don't post! It's just natural in a forum that the same questions reappear. Deal with it. Otherwise I suggest that we delete this forum totally because it becomes pointless. A forum is existing for the sole purpose of helping people (and yes, help them take shortcuts) otherwise it is useless.Because I think I already pointed out the issues that make up what I'm talking about, and I have a limit as to the amount of verbosity I am willing to engage in when typing on a cellphone.

As I said, I and others don't find it pointless, thereof the question and discussion. Inexact is one thing. 2.18 TiB (!) is not just inexact; it's off the map. And if someone else with the same number of drives and same RAIDZ3 configuration gets totally different numbers I, and many others, want to find the root cause. If you don't, then fine. You don't have to but please stop cluttering the thread if you're not going to help.The very nature of the beast makes this an inexact figure for the typical ZFS system that is storing typical data. While it may be possible to devise a configuration and contrived data that actually allows storage of the maximum, it seems to me like worrying about the theoretical number is kinda pointless for any real application.

You have helped me before, which I appreciate, and I hope we can help each other in the future but this time (and last time with all the text about the simple SAS to SATA cable question without answering the question) I don't think that you are helping anyone.

I will start a new thread regarding this issue since this one is becoming TL;DR due to all off topic philosophic noise.

jgreco

Resident Grinch

- Joined

- May 29, 2011

- Messages

- 18,680

I don't see how I *can* help you. You seem intent on trying not to understand that RAIDZ does not give you a fixed amount of storage, therefore any number that is generated is at best a bad estimate, and at worst, a software engineer's nightmare scenario.

You seem to expect a little much. I'm not magically able to read foreign languages to identify how cables might be different. You seem to want the answer handed to you, because even if I make an attempt to impart what I know, that's not good enough. It's not reasonable for you to demand a certain level of service from other community participants.

Some of us have been here answering the same questions over and over again for many years. Perhaps it'd be nice for you to be a little less offensive and see instead if you can make do with the assistance people have tried to provide you. Otherwise, the log out button is readily available.

You seem to expect a little much. I'm not magically able to read foreign languages to identify how cables might be different. You seem to want the answer handed to you, because even if I make an attempt to impart what I know, that's not good enough. It's not reasonable for you to demand a certain level of service from other community participants.

It's just natural in a forum that the same questions reappear. Deal with it. Otherwise I suggest that we delete this forum totally because it becomes pointless. A forum is existing for the sole purpose of helping people (and yes, help them take shortcuts) otherwise it is useless.

Some of us have been here answering the same questions over and over again for many years. Perhaps it'd be nice for you to be a little less offensive and see instead if you can make do with the assistance people have tried to provide you. Otherwise, the log out button is readily available.

David Dyer-Bennet

Patron

- Joined

- Jul 13, 2013

- Messages

- 286

I gotta say, over the years, people here (I think I remember jgreco specifically being one of them, even) have been very patient with me, and extremely helpful!

A big ZFS pool is in fact less deterministic than a partition on one physical disk. This doesn't actually surprise me that much, even when I find it inconvenient.

A big ZFS pool is in fact less deterministic than a partition on one physical disk. This doesn't actually surprise me that much, even when I find it inconvenient.

bbox

Dabbler

- Joined

- Mar 29, 2016

- Messages

- 17

Thank You @Bidule0hm. Great and gradual explanation. With some basic math one can really understand the concept.

Should the space availability number change in GUI after changing dataset's recordsize? In my case it did not (sorry for reposting):

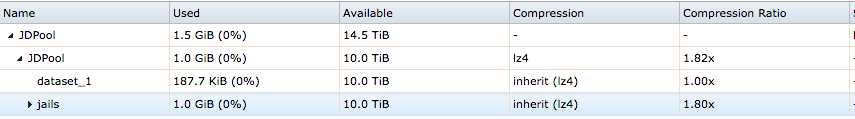

And how about recordsize on different datasets? In this case, if I understood correctly, 'JDPool' is the main dataset of the pool, for which the recordsize was set to 1M. Thus I should save around 5.27% of a total lost space, right? What happens if 'jails' or 'dataset_1' dataset recordsize is set to 128k by default?

Apparently, they're all on the same vdev.

Should the space availability number change in GUI after changing dataset's recordsize? In my case it did not (sorry for reposting):

And how about recordsize on different datasets? In this case, if I understood correctly, 'JDPool' is the main dataset of the pool, for which the recordsize was set to 1M. Thus I should save around 5.27% of a total lost space, right? What happens if 'jails' or 'dataset_1' dataset recordsize is set to 128k by default?

Apparently, they're all on the same vdev.

Bidule0hm

Server Electronics Sorcerer

- Joined

- Aug 5, 2013

- Messages

- 3,710

You're welcome :)

The available space in the GUI include all the overheads and assume recordsize = 128 k so even when you change the recordsize to 1 M the available space will not change (however the used space will diminish as it is the real used space and not assuming anything). Also you'll need to copy the data somewhere and then back to the dataset to see any changes, the data already on the dataset will not be affected by the recordsize change, only new data will.

Yes.

How did you change the recordsize on the main dataset? In a general way it's best to leave the main dataset alone and do whatever you want to do only on the sub-datasets.

I don't know your pool layout so I can't confirm that.

Well, 'jails' and 'dataset_1' will have a recordsize of 128 k so you'll not reclaim any space on those, even if their parent dataset has its recordsize set to 1 M.

I guess you mean pool instead of vdev; if you don't know/remember here's a simple illustration of the ZFS structure.

Should the space availability number change in GUI after changing dataset's recordsize?

The available space in the GUI include all the overheads and assume recordsize = 128 k so even when you change the recordsize to 1 M the available space will not change (however the used space will diminish as it is the real used space and not assuming anything). Also you'll need to copy the data somewhere and then back to the dataset to see any changes, the data already on the dataset will not be affected by the recordsize change, only new data will.

In this case, if I understood correctly, 'JDPool' is the main dataset of the pool

Yes.

'JDPool' is the main dataset of the pool, for which the recordsize was set to 1M.

How did you change the recordsize on the main dataset? In a general way it's best to leave the main dataset alone and do whatever you want to do only on the sub-datasets.

Thus I should save around 5.27% of a total lost space, right?

I don't know your pool layout so I can't confirm that.

What happens if 'jails' or 'dataset_1' dataset recordsize is set to 128k by default?

Well, 'jails' and 'dataset_1' will have a recordsize of 128 k so you'll not reclaim any space on those, even if their parent dataset has its recordsize set to 1 M.

Apparently, they're all on the same vdev.

I guess you mean pool instead of vdev; if you don't know/remember here's a simple illustration of the ZFS structure.

bbox

Dabbler

- Joined

- Mar 29, 2016

- Messages

- 17

Thanks for illustration. Very helpful. Sorry for slow pace, but I really enjoy how the whole ZFS concept gradually sits in with every remark of Yours. I kinda had it upside down for too long...

Well, dataset tree in GUI is misleading me a little bit, so I used same available GUI options as sub-datasets have. I thought that all datasets were equal slices of a pool, so why not to start with one from the top? The thing is if I try to identify main dataset on your diagram, I see it somewhere between datasets and the pool, or as an integrated part of a pool, or as a means to display compression ratio and/or available space after formating, or maybe container of system files and folders. Correct me if I am missing something, please.

The rest is clear. I went through thread again and compared configuration of Your zfs list. It is all there, recordsize changes only on sub-datasets.

Though I got another one:

Does it make any sense to increase recordsize of 6-disk raidz2 configuration?

How did you change the recordsize on the main dataset? In a general way it's best to leave the main dataset alone and do whatever you want to do only on the sub-datasets.

Well, dataset tree in GUI is misleading me a little bit, so I used same available GUI options as sub-datasets have. I thought that all datasets were equal slices of a pool, so why not to start with one from the top? The thing is if I try to identify main dataset on your diagram, I see it somewhere between datasets and the pool, or as an integrated part of a pool, or as a means to display compression ratio and/or available space after formating, or maybe container of system files and folders. Correct me if I am missing something, please.

The rest is clear. I went through thread again and compared configuration of Your zfs list. It is all there, recordsize changes only on sub-datasets.

Though I got another one:

Does it make any sense to increase recordsize of 6-disk raidz2 configuration?

Last edited:

Bidule0hm

Server Electronics Sorcerer

- Joined

- Aug 5, 2013

- Messages

- 3,710

Well, dataset tree in GUI is misleading me a little bit, so I used same available GUI options as sub-datasets have. I thought that all datasets were equal slices of a pool, so why not to start with one from the top?

Yeah it's a dataset but the thing is it's created by FreeNAS and, as with anything managed by FreeNAS itself, you don't want to tinker with it. In this case I don't think it'll make any harm but just keep that in mind for the future.

The thing is if I try to identify main dataset on your diagram, I see it somewhere between datasets and the pool, or as an integrated part of a pool, or as a means to display compression ratio and/or available space after formating, or maybe container of system files and folders. Correct me if I am missing something, please.

My diagram is for any ZFS pool (ZFS on Linux, Oracle' ZFS, ...), with FreeNAS you can look at the rightmost dataset in the pool on the diagram: it'll be the root dataset created by FreeNAS; then, inside it, you'll have your datasets, etc...

Does it make any sense to increase recordsize of 6-disk raidz2 configuration?

No because you'll have 48 sectors (32 data + 16 parity, for recordsize = 128k and sector = 4k) and 48 is an integer multiple of 3 ((RAID-Z)2 + 1 = 3) so no padding so no lost space ;)

danb35

Hall of Famer

- Joined

- Aug 16, 2011

- Messages

- 15,504

I don't think it's FreeNAS as such that creates the root dataset, but ZFS. Still wouldn't be inclined to do pool-wide stuff, but I don't think this is an example of "don't mess with GUI stuff at the CLI."Yeah it's a dataset but the thing is it's created by FreeNAS

- Status

- Not open for further replies.

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Misaligned Pools and Lost Space..."

Similar threads

- Replies

- 1

- Views

- 3K

- Replies

- 67

- Views

- 216K