Keven

Contributor

- Joined

- Aug 10, 2016

- Messages

- 114

Hi,

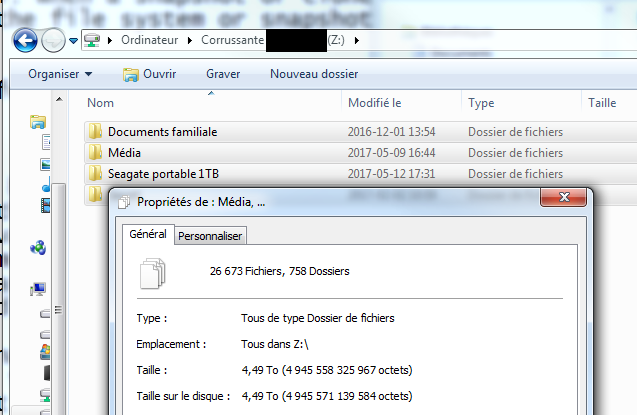

after noticing that one of my share was using 6.1 TiB on my server I checked what was using all that space. I right click on the root folder to check the space it's using: 4.49TiB

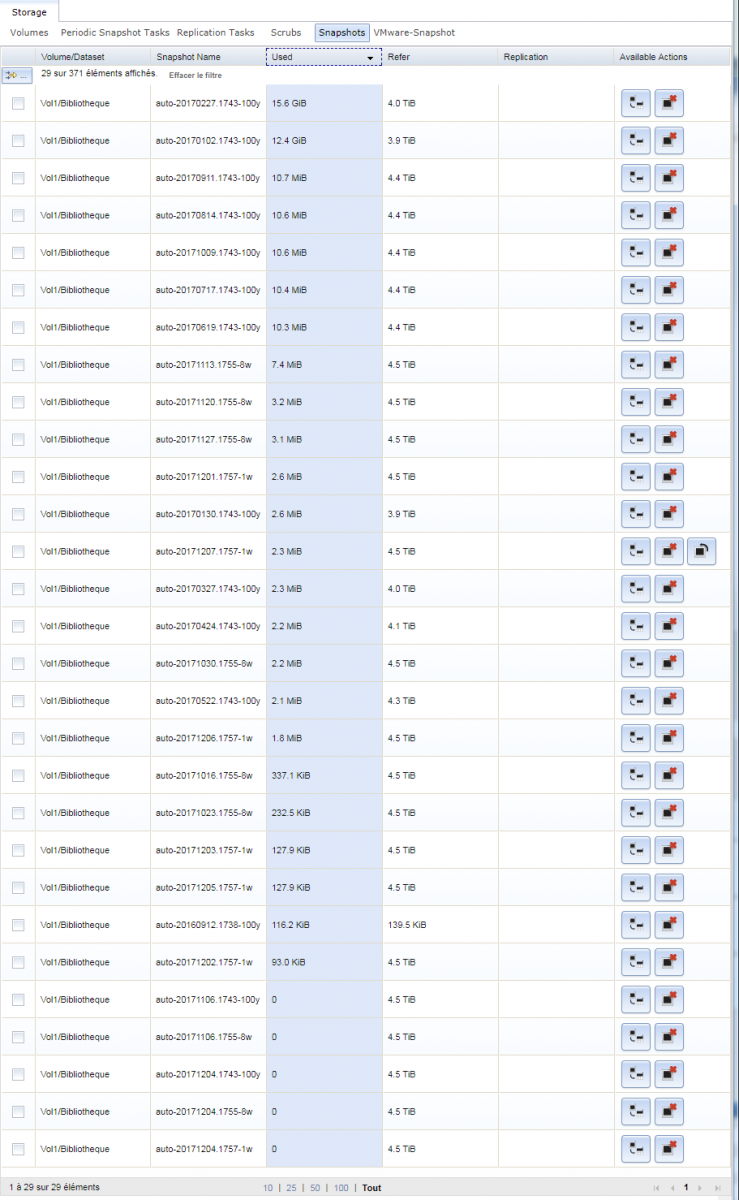

I am doing snapshot on that share so maybe it's that. I looked at all snapshot from that dataset and when I add up the used space of those snapshot I get 28 GiB

so 4.49 TiB + 28 GiB = 4.51 TiB

so am I calculating the space required of the snapshot wrong?

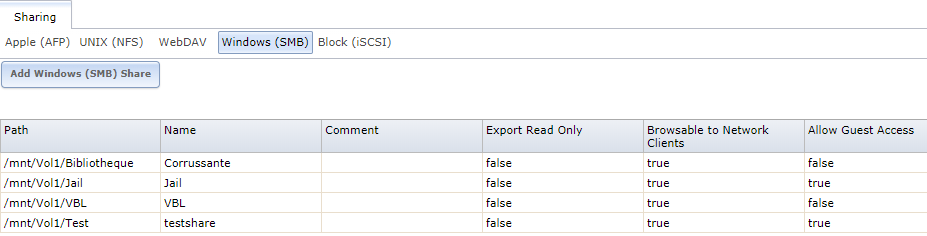

Here is how the dataset/share are setup

Dataset: "Bibliotheque" =6.1TiB (60%)

SMB Share of "Bibliotheque" is called "Corrussante"

SnapShot are filtered for "bibliotheque" so it wont show non revelant snapshot from other dataset

after noticing that one of my share was using 6.1 TiB on my server I checked what was using all that space. I right click on the root folder to check the space it's using: 4.49TiB

I am doing snapshot on that share so maybe it's that. I looked at all snapshot from that dataset and when I add up the used space of those snapshot I get 28 GiB

so 4.49 TiB + 28 GiB = 4.51 TiB

so am I calculating the space required of the snapshot wrong?

Here is how the dataset/share are setup

Dataset: "Bibliotheque" =6.1TiB (60%)

SMB Share of "Bibliotheque" is called "Corrussante"

SnapShot are filtered for "bibliotheque" so it wont show non revelant snapshot from other dataset