ChubbyBunny

Cadet

- Joined

- Dec 19, 2018

- Messages

- 3

Hello, the company I'm working at is having a problem with the freenas replication.

It starts the replication task and although the file is small if I watch the free space on the replication target machine it becomes smaller and smaller until there's nothing left at which point the backup fails. There's more than 2 TiB available so it shouldn't have this issue.

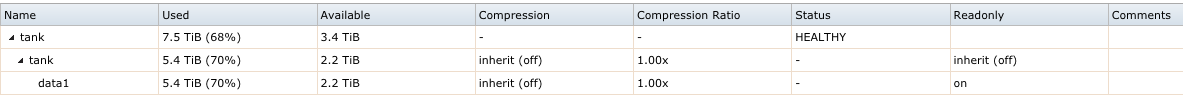

This is a screenshot of the target machine's tank with the remaining space. I took this while the new replication is happening, it should have more available space like somewhere in the neighbourhood of 2.8 TiB but it's been running for a while now.

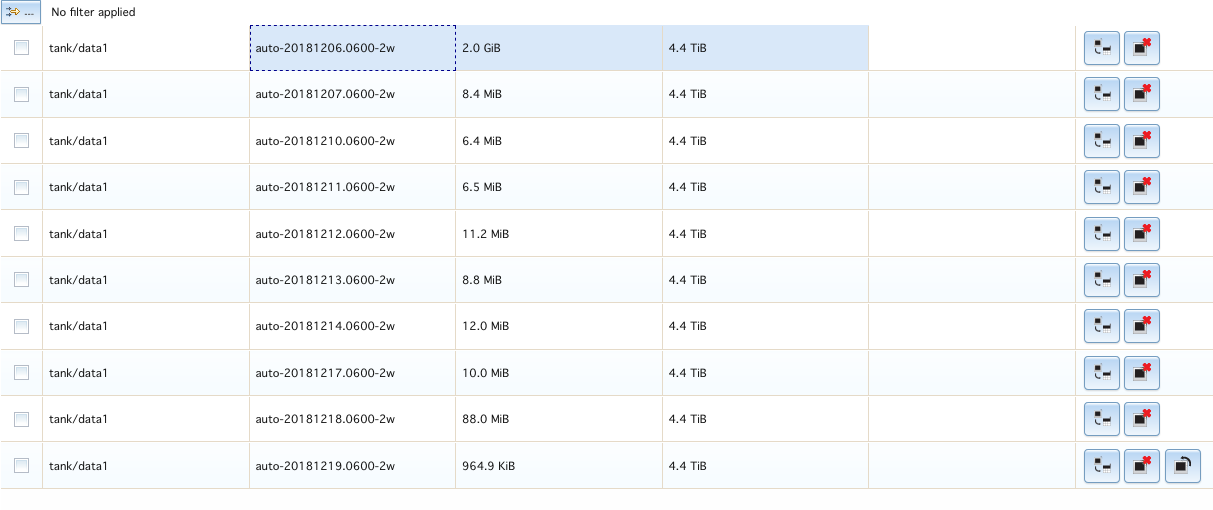

Here are the emails it sends while it's doing this:

The capacity for the volume 'tank' is currently at 94%, while the recommended value is below 80%.

The capacity for the volume 'tank' is currently at 95%, while the recommended value is below 80%.

The capacity for the volume 'tank' is currently at 96%, while the recommended value is below 80%.

Hello,

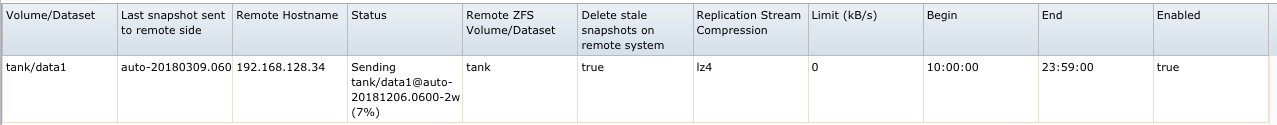

The replication failed for the local ZFS tank/data1 while attempting to

send snapshot auto-20181115.0600-2w to 192.168.128.34

I deleted a 900MiB snapshot that I thought was the issue but sadly it has become hung up again on a different snapshot.

System Information

Build FreeNAS-9.10.2-U6 (561f0d7a1)

Platform Intel(R) Core(TM) i5-3470T CPU @ 2.90GHz

Memory 16252MB

System Time Wed Dec 19 13:31:53 PST 2018

Uptime 1:31PM up 20 days, 22:14, 0 users

Load Average 1.69, 1.81, 2.23

I've only set up the email system yesterday and got emails about a snapshot from November which means that the freenas servers have been taking bandwidth on the local network for days now...

I've inherited this freenas system from a previous employee who's no longer here so I'm new to these issues and systems. Let me know what steps or direction I should take.

It starts the replication task and although the file is small if I watch the free space on the replication target machine it becomes smaller and smaller until there's nothing left at which point the backup fails. There's more than 2 TiB available so it shouldn't have this issue.

This is a screenshot of the target machine's tank with the remaining space. I took this while the new replication is happening, it should have more available space like somewhere in the neighbourhood of 2.8 TiB but it's been running for a while now.

Here are the emails it sends while it's doing this:

The capacity for the volume 'tank' is currently at 94%, while the recommended value is below 80%.

The capacity for the volume 'tank' is currently at 95%, while the recommended value is below 80%.

The capacity for the volume 'tank' is currently at 96%, while the recommended value is below 80%.

Hello,

The replication failed for the local ZFS tank/data1 while attempting to

send snapshot auto-20181115.0600-2w to 192.168.128.34

I deleted a 900MiB snapshot that I thought was the issue but sadly it has become hung up again on a different snapshot.

System Information

Build FreeNAS-9.10.2-U6 (561f0d7a1)

Platform Intel(R) Core(TM) i5-3470T CPU @ 2.90GHz

Memory 16252MB

System Time Wed Dec 19 13:31:53 PST 2018

Uptime 1:31PM up 20 days, 22:14, 0 users

Load Average 1.69, 1.81, 2.23

I've only set up the email system yesterday and got emails about a snapshot from November which means that the freenas servers have been taking bandwidth on the local network for days now...

I've inherited this freenas system from a previous employee who's no longer here so I'm new to these issues and systems. Let me know what steps or direction I should take.

Last edited: