HARDWARE USED:

Transferring data TO the FN server initially starts normally ... ramping up from 150MB/s and quickly reaching 500-600MB/s...

After perhaps 10 seconds however it will start falling until reaching 20KB/s where it'll stay, or as the cycles iterate, will drop to 0KB/s.

I experimented with pausing the transfer via Win10 to see what occurred upon resuming:

Pausing the transfer never occurs quickly, and sometimes takes 2+ minutes to completely respond to the pause command.

During this time, especially if the transfer rate slowed to under 1MB or down to 0KB/s even ...

-- I CAN ping the IP and get normal-latency for replies ...

-- after noticing the CPU utilization wasn't updating I attempted to reload FreeNAS (reload web page IP address) ...

Often it wouldn't load and would eventually reply:

Connecting to FreeNAS ... Make sure the FreeNAS system is powered on and connected to the network.

Once the transfer completes pausing on Windows, the FreeNAS server again, becomes responsive.

I noticed getting even higher peak transfer speeds upon resuming if I waited a little longer after it paused the transfer.

After the transfer was resumed...

The slowest 20% of transfers peaked out at 225MB/s ... though, only for a few seconds before falling again.

The slowest 50% of transfers peaked out at 300MB/s ... though, "" "" same as above.... "" ""

Peak avg. ±10% of transfers peaked out at 375MB/s ... though, "" "" same as above.... "" ""

The highest 50% of transfers peaked out at 450MB/s ... though, "" "" same as above.... "" ""

The highest 15% of transfers peaked out at 550MB/s ... though, "" "" same as above.... "" ""

The highest 2% of transfers peaked out at 1.2 GB/s ... though, "" "" same as above.... "" ""

That's just to provide an overview of how massive the range is, despite the file sizes all being large ...

Though like everyone, I'd love the fastest of the bunch to be the default -- anything over 300MB/s sustained would've been great, and totally acceptable.

But over the course no more than 10 seconds after resuming, it'd follow this trend ... before resuming to just a few kb or even 0.

100MB/s, 200MB/s, 300MB/s, 200MB/s 300MB/s 500MB/s, 200MB/s 50MB/s ... 50KB/s ... 0 ....

While the transfer rates dropped to their lowest,

Local Read // Write test on (source) NVMe still yielded: 2,600MB/s ... for 16GB Tests...

ping tests yielded the same results as when it was working ... BUT:

Because the CPU's utilization wasn't refreshing quickly I reloaded the web-page ... on to get:

Connecting to FreeNAS ... Make sure the FreeNAS system is powered on and connected to the network.

The IP // web page would resume responding once Windows Pause-command was successfully implemented ...

Time where the the webpage was accessible while waiting for the transfer to complete pausing,

the dropping CPU utilization (below 5-10%) would coincide with the transfer pausing...

And as mentioned above ... the FreeNAS server pings just fine, even when it can't be reached, even before the transfer rate hits zero!

(though, it's difficult to orchestrate some of these tests to determine causation.)

Last night the transfer behavior was a little different.

It'd start quick, slow down (as mentioned) ... but it'd power through 3-15GB files (even if only at 1-2MB/s) ...

But would start each NEW file ... by jumping up to 500-600MB/s for a few seconds before running through the remainder of the file slowly.

I was awake long enough to catch two issues which required my skipping the file for some reason, but when I woke up, it'd completed.

The files I did in the top description were slightly smaller (like 300MB - 1.5GB) ...

in those brief periods of peaks between ~500MB/s --> 1.2GB/s the CPU utilization would range from 45-60%...

However, the histograph for temperature and IO or disk temperatures all looked like 'pretty low' utilization and low temperatures shown to be FLAT in the histograph.

I feel badly having such an absurdly RIDICULOUS set of symptoms and questions. I cannot IMAGINE practically searching for someone who's had similar results ... and I'm skeptical that anyone's ever had this same exact issue. :) I'll be AMAZED if anyone has any idea WTH this is.

Thanks to any and everyone who's taken any time to read any of this. I really have done my best to describe it ... but so WIERD to say the least.

I'll continue checking to see if it's another layer at fault

(the switch, a cable, a CPU, cooling, something... I have no idea)...

...and will update this thread accordingly. Thanks!

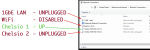

| • FreeNAS 11.3 U4.1 -- Dell T620 PowerEdge | Networking Equipment between them... | Client: Windows 10 Pro |

| • Xeon E5 1.8GHz QC v2 | • L3 Routing + Wireless AP: Airport Extreme | • Intel i7-8700k |

| • 48GB ECC 1333 | • L2 10Gb Switch: D-Link SFP+ | • 32GB DDR4 2600MHz RAM |

| • 8x 10TB IBM-HGST 7.2k | • SFP+ (Cisco) DAC Cable | • WD Black: NVMe (easily sustains 2+ GBps) |

| • NIC: Chelsio: T520-SO-CR (SFP+) | • NIC: Chelsio: T520-SO-CR (SFP+) also ... | |

Transferring data TO the FN server initially starts normally ... ramping up from 150MB/s and quickly reaching 500-600MB/s...

After perhaps 10 seconds however it will start falling until reaching 20KB/s where it'll stay, or as the cycles iterate, will drop to 0KB/s.

I experimented with pausing the transfer via Win10 to see what occurred upon resuming:

Pausing the transfer never occurs quickly, and sometimes takes 2+ minutes to completely respond to the pause command.

During this time, especially if the transfer rate slowed to under 1MB or down to 0KB/s even ...

-- I CAN ping the IP and get normal-latency for replies ...

-- after noticing the CPU utilization wasn't updating I attempted to reload FreeNAS (reload web page IP address) ...

Often it wouldn't load and would eventually reply:

Connecting to FreeNAS ... Make sure the FreeNAS system is powered on and connected to the network.

Once the transfer completes pausing on Windows, the FreeNAS server again, becomes responsive.

I noticed getting even higher peak transfer speeds upon resuming if I waited a little longer after it paused the transfer.

After the transfer was resumed...

The slowest 20% of transfers peaked out at 225MB/s ... though, only for a few seconds before falling again.

The slowest 50% of transfers peaked out at 300MB/s ... though, "" "" same as above.... "" ""

Peak avg. ±10% of transfers peaked out at 375MB/s ... though, "" "" same as above.... "" ""

The highest 50% of transfers peaked out at 450MB/s ... though, "" "" same as above.... "" ""

The highest 15% of transfers peaked out at 550MB/s ... though, "" "" same as above.... "" ""

The highest 2% of transfers peaked out at 1.2 GB/s ... though, "" "" same as above.... "" ""

That's just to provide an overview of how massive the range is, despite the file sizes all being large ...

Though like everyone, I'd love the fastest of the bunch to be the default -- anything over 300MB/s sustained would've been great, and totally acceptable.

But over the course no more than 10 seconds after resuming, it'd follow this trend ... before resuming to just a few kb or even 0.

100MB/s, 200MB/s, 300MB/s, 200MB/s 300MB/s 500MB/s, 200MB/s 50MB/s ... 50KB/s ... 0 ....

While the transfer rates dropped to their lowest,

Local Read // Write test on (source) NVMe still yielded: 2,600MB/s ... for 16GB Tests...

ping tests yielded the same results as when it was working ... BUT:

Because the CPU's utilization wasn't refreshing quickly I reloaded the web-page ... on to get:

Connecting to FreeNAS ... Make sure the FreeNAS system is powered on and connected to the network.

The IP // web page would resume responding once Windows Pause-command was successfully implemented ...

Time where the the webpage was accessible while waiting for the transfer to complete pausing,

the dropping CPU utilization (below 5-10%) would coincide with the transfer pausing...

And as mentioned above ... the FreeNAS server pings just fine, even when it can't be reached, even before the transfer rate hits zero!

(though, it's difficult to orchestrate some of these tests to determine causation.)

Last night the transfer behavior was a little different.

It'd start quick, slow down (as mentioned) ... but it'd power through 3-15GB files (even if only at 1-2MB/s) ...

But would start each NEW file ... by jumping up to 500-600MB/s for a few seconds before running through the remainder of the file slowly.

I was awake long enough to catch two issues which required my skipping the file for some reason, but when I woke up, it'd completed.

The files I did in the top description were slightly smaller (like 300MB - 1.5GB) ...

in those brief periods of peaks between ~500MB/s --> 1.2GB/s the CPU utilization would range from 45-60%...

However, the histograph for temperature and IO or disk temperatures all looked like 'pretty low' utilization and low temperatures shown to be FLAT in the histograph.

I feel badly having such an absurdly RIDICULOUS set of symptoms and questions. I cannot IMAGINE practically searching for someone who's had similar results ... and I'm skeptical that anyone's ever had this same exact issue. :) I'll be AMAZED if anyone has any idea WTH this is.

Thanks to any and everyone who's taken any time to read any of this. I really have done my best to describe it ... but so WIERD to say the least.

I'll continue checking to see if it's another layer at fault

(the switch, a cable, a CPU, cooling, something... I have no idea)...

...and will update this thread accordingly. Thanks!

Last edited: