Hello, this is my first time using TrueNAS.

I'm currently using the version TrueNAS-SCALE-21.08-BETA.2 (latest version at the moment.) kernel 5.10.42+truenas

My setup consists of a MSI mag B550m mortar wifi with a r7 3700x.

The motherboard has 1 PCIx16 (gen4/gen3) configured as x4x4x4x4 with a nvme x4 adapter, those 4 nvmes are set up in a pool. It also has a PCI x8 gen3 where I have a GTX 690 and two PCIx1 gen3, one of them occupied by a Quadro p2000 (I've tried both PCIx1 ports).

What I would like to do is to use the Quadro for the host so the different containers have access to it and passtrough the GTX to a VM.

Now the issue:

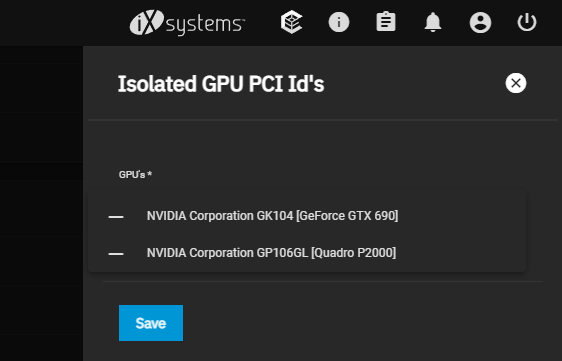

On the advanced menu, in the isolation section, the GPUs are correctly listed.

The isolation seems to work as executing

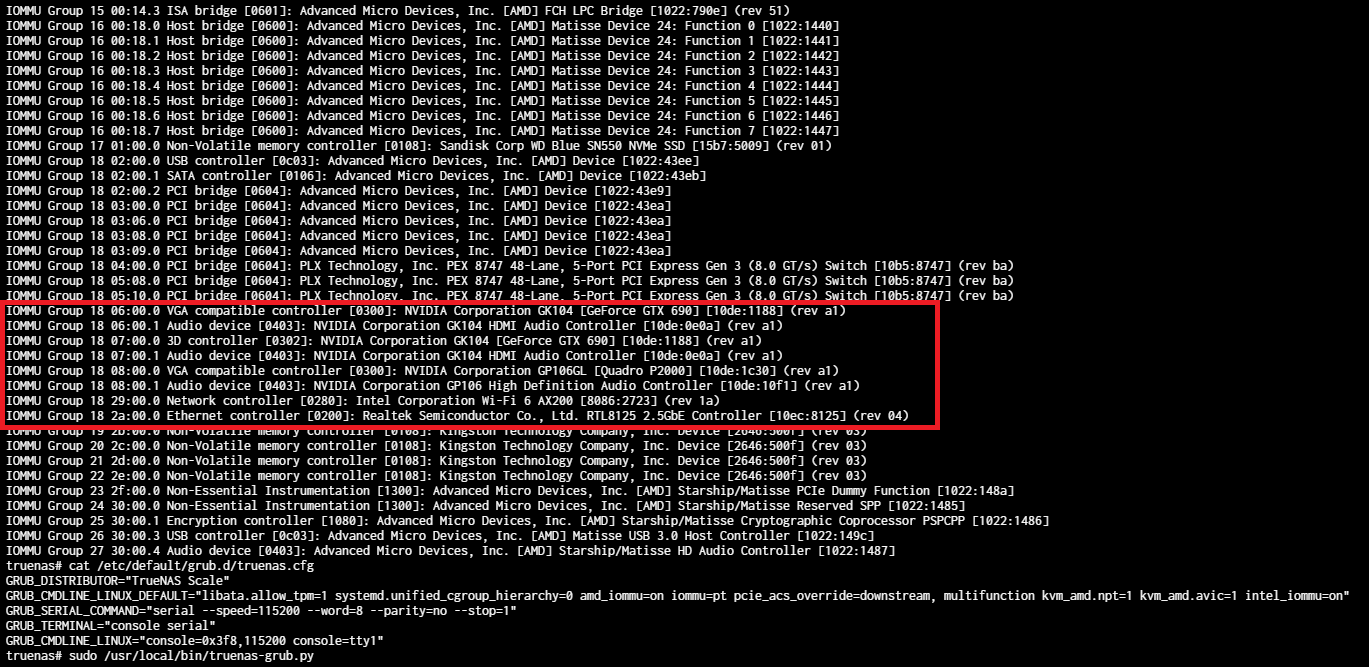

However, we can see that both GPUs, with a lot of other random things, are in the same IOMMU group.

(the gtx 690 appears twice becasue its a dual chip GPU)

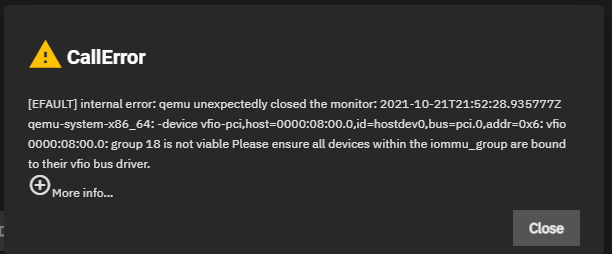

And both GPUs being in the same IOMMU group 18 is giving me this error when trying to use the isolated GPU on a VM:

Full error code:

I've tried to break down the IOMMU groups adding

After rebooting the flag is added correctly to the grub file "/etc/default/grub.d/truenas.cfg", but the IOMMU groups do not change.

Is there something I'm doing wrong?

Is there something else that I can try to break down the IOMMU groups?

I've used Manjaro in this very same motherboard in the past and I don't remember having so many things in the same IOMMU group, at the time I was using a GTX 1080 and a Quadro p620 I was able to passtrough one GPU to a VM.

I hope someone can bring some light to this.

Thanks in advance!

Lucas VT.

I'm currently using the version TrueNAS-SCALE-21.08-BETA.2 (latest version at the moment.) kernel 5.10.42+truenas

My setup consists of a MSI mag B550m mortar wifi with a r7 3700x.

The motherboard has 1 PCIx16 (gen4/gen3) configured as x4x4x4x4 with a nvme x4 adapter, those 4 nvmes are set up in a pool. It also has a PCI x8 gen3 where I have a GTX 690 and two PCIx1 gen3, one of them occupied by a Quadro p2000 (I've tried both PCIx1 ports).

What I would like to do is to use the Quadro for the host so the different containers have access to it and passtrough the GTX to a VM.

Now the issue:

On the advanced menu, in the isolation section, the GPUs are correctly listed.

The isolation seems to work as executing

nvidia-smi shows only the non isolated GPUs, so I understand that the IOMMU group is ready to passtrough to a VM.However, we can see that both GPUs, with a lot of other random things, are in the same IOMMU group.

(the gtx 690 appears twice becasue its a dual chip GPU)

And both GPUs being in the same IOMMU group 18 is giving me this error when trying to use the isolated GPU on a VM:

Full error code:

Code:

Error: Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor_base.py", line 174, in start

if self.domain.create() < 0:

File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create

raise libvirtError('virDomainCreate() failed')

libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2021-10-21T21:55:36.338385Z qemu-system-x86_64: -device vfio-pci,host=0000:08:00.0,id=hostdev0,bus=pci.0,addr=0x6: vfio 0000:08:00.0: group 18 is not viable

Please ensure all devices within the iommu_group are bound to their vfio bus driver.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 150, in call_method

result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self,

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1262, in _call

return await methodobj(*prepared_call.args)

File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1182, in nf

return await func(*args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1092, in nf

res = await f(*args, **kwargs)

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 47, in start

await self.middleware.run_in_thread(self._start, vm['name'])

File "/usr/lib/python3/dist-packages/middlewared/utils/run_in_thread.py", line 10, in run_in_thread

return await self.loop.run_in_executor(self.run_in_thread_executor, functools.partial(method, *args, **kwargs))

File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 62, in _start

self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name))

File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor_base.py", line 183, in start

raise CallError('\n'.join(errors))

middlewared.service_exception.CallError: [EFAULT] internal error: qemu unexpectedly closed the monitor: 2021-10-21T21:55:36.338385Z qemu-system-x86_64: -device vfio-pci,host=0000:08:00.0,id=hostdev0,bus=pci.0,addr=0x6: vfio 0000:08:00.0: group 18 is not viable

Please ensure all devices within the iommu_group are bound to their vfio bus driver.I've tried to break down the IOMMU groups adding

pcie_acs_override=downstream,pcie_acs_override=multifunction and pcie_acs_override=downstream,multifunction (not at the same time) to the Python script "/usr/local/bin/truenas-grub.py".After rebooting the flag is added correctly to the grub file "/etc/default/grub.d/truenas.cfg", but the IOMMU groups do not change.

Is there something I'm doing wrong?

Is there something else that I can try to break down the IOMMU groups?

I've used Manjaro in this very same motherboard in the past and I don't remember having so many things in the same IOMMU group, at the time I was using a GTX 1080 and a Quadro p620 I was able to passtrough one GPU to a VM.

I hope someone can bring some light to this.

Thanks in advance!

Lucas VT.