danb35

Hall of Famer

- Joined

- Aug 16, 2011

- Messages

- 15,504

NIC arrived today--and in addition to the part numbers that were shown in the seller's photos, there's also a model number that wasn't visible: MCQH29-XFR. Searching that led me to a manual:

www.manualslib.com

www.manualslib.com

It appears this card was initially sold for the C6100, a predecessor to the C6220. But the manual appear to confirm that the card does Ethernet at 10 Gbit/sec, which is a good sign. Let's see if I can make it work.

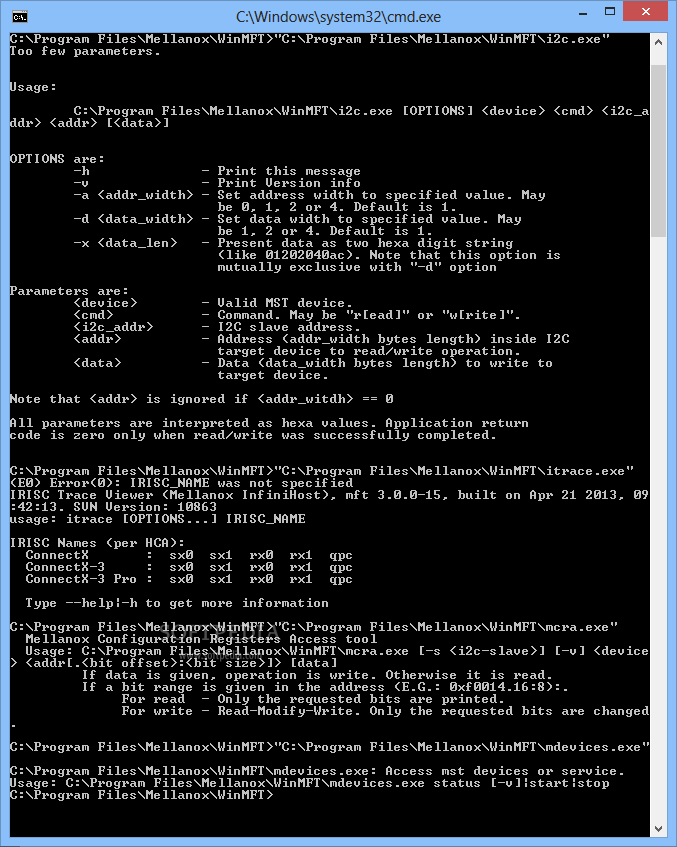

Edit: First, it physically fits, which is a good start. Second, Windows recognizes it:

I have a QSFP+-to-SFP+ adapter on the way, so I'll check for network connection when it gets here.

DELL CONNECTX MCQH29-XDR USER MANUAL Pdf Download

View and Download Dell ConnectX MCQH29-XDR user manual online. Dual Port I/O Card for Dell C6100. ConnectX MCQH29-XDR i/o systems pdf manual download.

It appears this card was initially sold for the C6100, a predecessor to the C6220. But the manual appear to confirm that the card does Ethernet at 10 Gbit/sec, which is a good sign. Let's see if I can make it work.

Edit: First, it physically fits, which is a good start. Second, Windows recognizes it:

I have a QSFP+-to-SFP+ adapter on the way, so I'll check for network connection when it gets here.

Last edited: