So I'm completely new to FreeNAS and other than some exposure to the community edition of Nexenta, I haven't touched a Unix variant in 15 years. It's simply a result of being in senior management and having every bit of technical expertise sucked right out of my head through budget spreadsheets and strategic plans. I embarked upon this task because I have come to loathe EMC. They make a great product, and it works wonderfully well, but we have over 100k tied up in EMC and to expand our storage to meet our needs over the next 2-4 years will cost us another 100k. I'm also proving to my technical team that the boss still has some skills left and isn't simple a pencil pusher. (This is the fun part of the exercise)

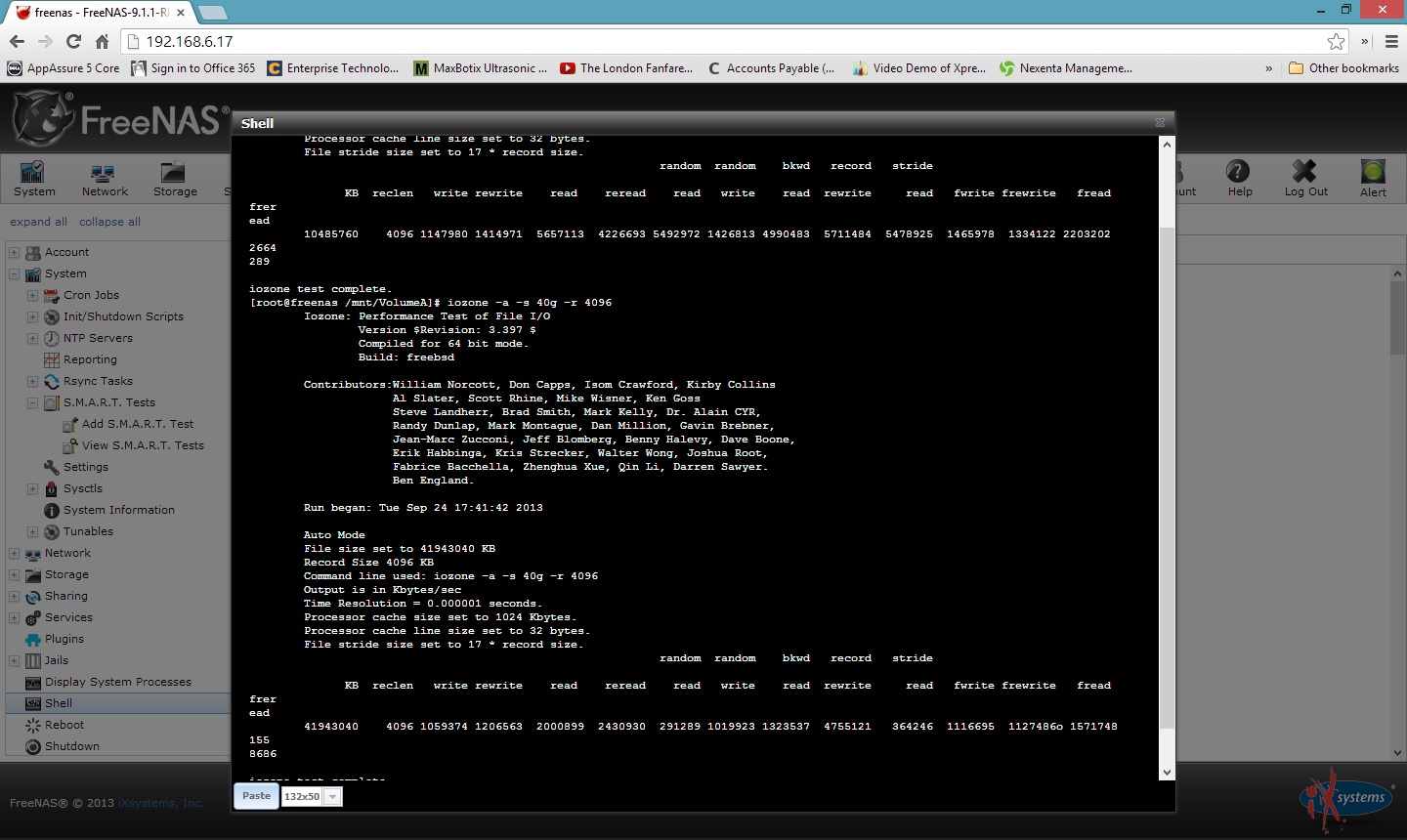

What I am wanting to build is a high performance datastore that will host our VMWare View desktops for significantly less than the cost up expanding our EMC SAN. I think we may have something that could work, but wanted the community opinion. The system specs are below, and the screen shot shows two tests from running iozone (the first was for 10GB and the second for 40).

what do you think? Can this handle 80 typical windows desktops running in View? What other tests would give me a better feel for the systems capabilities?

1 Dual Sockets Server Board X9DRi-F

2 Intel Xeon E5-2609 4-Core 2.4 GHz 10 MB LGA2011

8 4GB 1600MHz DDR3 RDIMM ECC [Total 32GB]

4 Crucial M500 480GB 2.5" SATA 6Gb/s

8 Seagate Constellation ES.3 3TB 3.5" SATA 6Gb/s 7200RPM Hard Drive

1 LSI Nytro MegaRAID 8100-4I RAID Controller with 100GB SLC NAND Flash

1 Intel X540-T2 10GbE 2-Port RJ45 Copper Card

We're using the on board SLC SSD's in the MegaRAID for the Slog device and the four crucial SSD's for 1.7TB of L2ARC (This part is ridiculous I know)

6 of the 3TB drives are setup in raidz2 and we have two spares at the moment. (I wanted to put all 8 in the raid, but freenas barked that it was not an optimal configuration so they are setup this way for testing).

THanks

Steve

What I am wanting to build is a high performance datastore that will host our VMWare View desktops for significantly less than the cost up expanding our EMC SAN. I think we may have something that could work, but wanted the community opinion. The system specs are below, and the screen shot shows two tests from running iozone (the first was for 10GB and the second for 40).

what do you think? Can this handle 80 typical windows desktops running in View? What other tests would give me a better feel for the systems capabilities?

1 Dual Sockets Server Board X9DRi-F

2 Intel Xeon E5-2609 4-Core 2.4 GHz 10 MB LGA2011

8 4GB 1600MHz DDR3 RDIMM ECC [Total 32GB]

4 Crucial M500 480GB 2.5" SATA 6Gb/s

8 Seagate Constellation ES.3 3TB 3.5" SATA 6Gb/s 7200RPM Hard Drive

1 LSI Nytro MegaRAID 8100-4I RAID Controller with 100GB SLC NAND Flash

1 Intel X540-T2 10GbE 2-Port RJ45 Copper Card

We're using the on board SLC SSD's in the MegaRAID for the Slog device and the four crucial SSD's for 1.7TB of L2ARC (This part is ridiculous I know)

6 of the 3TB drives are setup in raidz2 and we have two spares at the moment. (I wanted to put all 8 in the raid, but freenas barked that it was not an optimal configuration so they are setup this way for testing).

THanks

Steve