Greetings, all.

I am a first time poster, years-long lurker...

My FreeNAS environment is intended to host family media services as well as various work-related projects with which I am continually engaged. I am, for example, thrilled that I can get a RancherOS node + RancherUI spun up in minutes without tons of setup.

Here's the setup right now:

FreeNas Box:

Dell R710

2 x X5670 CPU

144GB DDR3 ECC

Redundant 870W PSUs

2 x 128GB SSD (currently mirrored for boot only, since I can't sub-partition in FreeNAS without the FreeNAS kid gloves wrecking my config, would love to use these as L2ARC and the NVMe drive as SLOG)

1 x 128GB Corsair MP500 NVMe drive - L2ARC

1 x 6Gb SAS HBA (4 ports) for future external enclosure

1 x QLogic QLE8152 CNA for future 10Gb network

1 x QLogic QLE2562 (probably replaced with a 2564 soon) for FC connectivity to servers (yes, yes... I know...)

6 x 2TB Enterprise SATA disks

Supported by Liebert UPS capable of running the box for a theoretical ~60 minutes.

ESXi Hosts:

1 x R610 - 2 Procs, 144GB Memory, local mirrored boot disks, 4Gb FC card currently connected with one port.

1 x R710 - 2 Procs, 144GB Memory, local mirrored boot disks, 4Gb FC card currently connected with one port.

Neither of these is battery-backed.

Before anyone asks, I've spent very little on this setup, it's all been EOL gear either donated for picked up for a song... except for the solid state drives, of course. It's also not particularly loud in a house with an energetic Great Dane and a 4-year-old little girl who thinks she's constantly "on stage" or "an adventure". :)

One interesting tidbit is that I recently rebuilt this box because the PCIe SSD-- Not an NVMe card... an SLC NAND card with an ATA controller on it, ugh --I had been using completely shit the bed and took the box with it. Lost a little data but was able to bounce back.

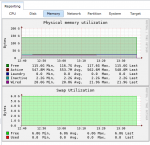

I'm posting because I can't seem to crack the code to get (what I think is) appropriate performance figures out of the box. I am perfectly fine with upwards of 100GB of memory being devoted to write caching, the NVMe drive being thrashed into oblivion and needing to be replaced in a year or less and willing to accept the risk of in-flight data loss in the event of hardware failure. I may even start backing this box up to my Azure or GCP accounts.

Is anyone willing to help me work through these configuration challenges to try and squeeze some more performance out of this gear?

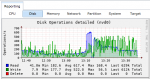

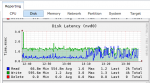

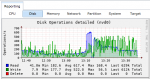

I am not sure which benchmarks or metrics are most relevant here, so I will start with some basic storage guy test stuff... Here are the charts from a 4K random writes, LBA-aligned, 128QD iometer session running on a W10 VM. This VM is living on a datastore derived from a zdev mounted up over 4Gb FibreChannel. I started the test probably 20 minutes ago. Prior to that, I was doing a similar test with a 50/50 R/W ratio. I am getting a very underwhelming 200-400 IOPs at latencies that are just...awful: 2-200ms

I have the pool defaulted to `sync: disabled` and `compression: LZ4`. The FC volume is not overriding that.

I've also included screenshots of the tunables page and my init scripts. Note that the autotuner is not currently enabled. The values there have been overridden by me in a couple spots. (whoops, I'll put those on a reply)

Thanks in advance!

I am a first time poster, years-long lurker...

My FreeNAS environment is intended to host family media services as well as various work-related projects with which I am continually engaged. I am, for example, thrilled that I can get a RancherOS node + RancherUI spun up in minutes without tons of setup.

Here's the setup right now:

FreeNas Box:

Dell R710

2 x X5670 CPU

144GB DDR3 ECC

Redundant 870W PSUs

2 x 128GB SSD (currently mirrored for boot only, since I can't sub-partition in FreeNAS without the FreeNAS kid gloves wrecking my config, would love to use these as L2ARC and the NVMe drive as SLOG)

1 x 128GB Corsair MP500 NVMe drive - L2ARC

1 x 6Gb SAS HBA (4 ports) for future external enclosure

1 x QLogic QLE8152 CNA for future 10Gb network

1 x QLogic QLE2562 (probably replaced with a 2564 soon) for FC connectivity to servers (yes, yes... I know...)

6 x 2TB Enterprise SATA disks

Supported by Liebert UPS capable of running the box for a theoretical ~60 minutes.

ESXi Hosts:

1 x R610 - 2 Procs, 144GB Memory, local mirrored boot disks, 4Gb FC card currently connected with one port.

1 x R710 - 2 Procs, 144GB Memory, local mirrored boot disks, 4Gb FC card currently connected with one port.

Neither of these is battery-backed.

Before anyone asks, I've spent very little on this setup, it's all been EOL gear either donated for picked up for a song... except for the solid state drives, of course. It's also not particularly loud in a house with an energetic Great Dane and a 4-year-old little girl who thinks she's constantly "on stage" or "an adventure". :)

One interesting tidbit is that I recently rebuilt this box because the PCIe SSD-- Not an NVMe card... an SLC NAND card with an ATA controller on it, ugh --I had been using completely shit the bed and took the box with it. Lost a little data but was able to bounce back.

I'm posting because I can't seem to crack the code to get (what I think is) appropriate performance figures out of the box. I am perfectly fine with upwards of 100GB of memory being devoted to write caching, the NVMe drive being thrashed into oblivion and needing to be replaced in a year or less and willing to accept the risk of in-flight data loss in the event of hardware failure. I may even start backing this box up to my Azure or GCP accounts.

Is anyone willing to help me work through these configuration challenges to try and squeeze some more performance out of this gear?

I am not sure which benchmarks or metrics are most relevant here, so I will start with some basic storage guy test stuff... Here are the charts from a 4K random writes, LBA-aligned, 128QD iometer session running on a W10 VM. This VM is living on a datastore derived from a zdev mounted up over 4Gb FibreChannel. I started the test probably 20 minutes ago. Prior to that, I was doing a similar test with a 50/50 R/W ratio. I am getting a very underwhelming 200-400 IOPs at latencies that are just...awful: 2-200ms

I have the pool defaulted to `sync: disabled` and `compression: LZ4`. The FC volume is not overriding that.

I've also included screenshots of the tunables page and my init scripts. Note that the autotuner is not currently enabled. The values there have been overridden by me in a couple spots. (whoops, I'll put those on a reply)

Thanks in advance!

Last edited: