Good morning community ... I'm new to the FreeNAS ZFS world, so please take it easy on me as I ask for some advice. I will preface this by saying I have spend the last several days doing all sorts of performance testing, along with digging in to the forums here to try to gain some insight in to my questions, but I'm coming up empty at this point, so I am reaching out.

First, a quick punch list of my FreeNAS build.

Dell C6100

2 x Intel E5672 Xeon Procs

96GB RAM

1015M controller card flashed with V20

XX2X2 daughter card flashed with V20

10 x Seagate ES.2 3TB 7200 SATA Enterprise Drives

- 8 Drives setup as RAIDZ2 (connected to 1015M controller card)

- 2 Drives setup as Hot Spares (connected to ports 1-2 of XX2X2 daughter card)

2 x Intel S3700 100GB SSD (connected to ports 3-4 of XX2X2 daughter card)

- 10GB Partition on Each, Mirrored ZFS Partition for SLOG

- 12GB Partition on Each, FreeBSD Swap Partitions for SWAP

- 78GB Partition on Each, ZFS Partitions for L2ARC

2 x Intel NIC Interfaces, setup with LACP, terminated to a Cisco switch

Okay, that should do it ... Now you have at least a very basic baseline of where I'm coming from.

Some other high level info about my environment. I have about 10 ESXi 5.5 hosts, each of which have 2 NICs (active/passive) for the guest network traffic, and then 2 NICs setup as active/active, with MPIO configured.

For the past several years, my storage backends have been based on Windows 2012 Storage Server, providing iSCSI to the hosts. The two storage servers I have are both using LSI 9265 RAID Controllers, and the drive arrays are setup in RAID6 on one box, with a hot spare, and RAID 60 on the other, no hot spare. Both systems have 12 of the same drives in them (Seagate ES.2) so the drive type is apples to apples with the new FreeNAS build I have created.

In both the windows storages servers, as well as the FreeNAS box, i have a port-channel defined on the switch. LACP is the protocol. The windows storage servers, as well as the FreeNAS box are both setup with LACP.

Again, as a note, on the ESXi host side, I have MPIO configured properly, round robin setup, and iops set to 1 second.

Okay, sorry for being so long winded, but I wanted to make sure that all the details of my current configuration were out there!

Down to the problem ... I have a test guest VM (Windows 8.1, 6GB RAM, 4 Procs, 2 x 40GB Hard Disks) and have installed CrystalMark5, ATTO Disk Benchmark, and HD Tune Pro for benchmarking. Guest ethernet connectivity is turned off, so we are just getting basic benchmark activity on the machines.

(Side background, I have 3 ESIi hosts, all running 96GB of RAM, all with E5672 procs, all idle except for this VM build, again, making sure we are apples to apple. The ONLY thing that isn't apples to apples is the FreeNAS box has zero traffic hitting it, as it's a new build, where as my other two Windows Storage Servers are serving up VM's for about 25 guests, clearly meaning there results could be degraded, which I understand going in to testing).

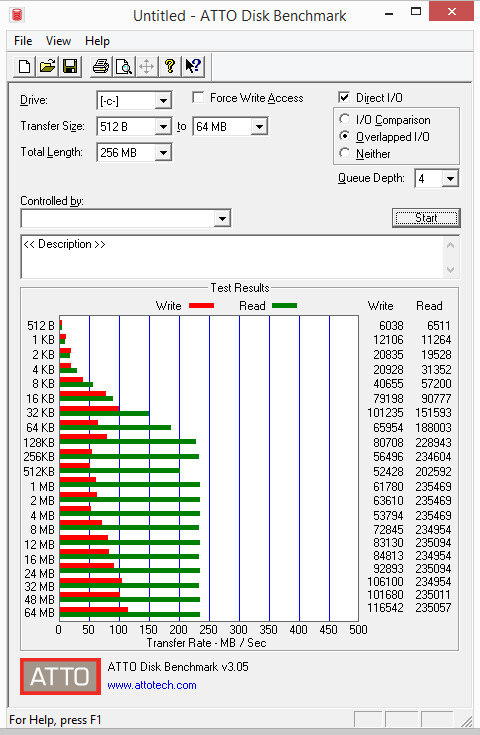

Okay, so here are the performance numbers I am seeing from ATTO. What you will see is that while READ access to FreeNAS+iSCSI is able to sustain about 235MB/sec, which is expected, because the network transit to the box is only 2Gbit, the write performance is only hitting between 60-80MB/sec.

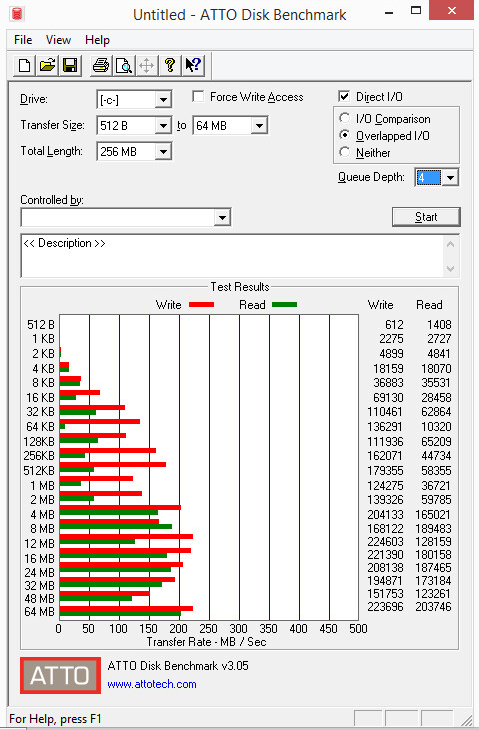

For comparison purposes, here is the results from one of my two guests running on the Windows Storage Servers iSCSI. I'm only including one of the two, since results are similar.

I have been doing forum digging, and honestly I can't seem to find anything that would indicate where my problem might reside, or if I even "have" a problem. I can only bases my suspicions on the results I'm seeing.

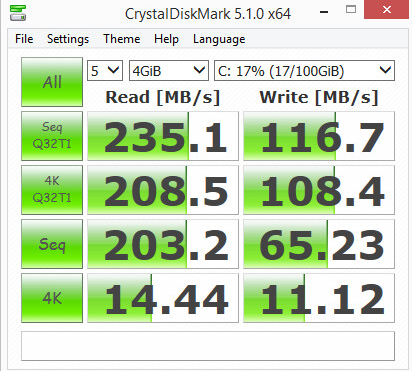

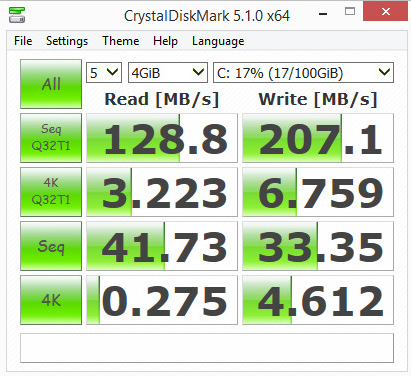

On a side note, I've also run CrystalDiskMark on both machines, and see some strange results.

These results are a little strange, because they show some read results all over the map for the Windows Storage Server setup. Probably showing there are other issues on the windows storage server platform, but again, not here asking for help on that. Simply trying to isolate performance on FreeNAS. Again, looking at the first line, you can see we are getting write performance on Windows of 207MB/sec, but on FreeNAS, I can only get 108MB/sec, and remember, that's an idle array, doing nothing.

And then, in trying to determine of the underlying file system is the bottleneck, I've actually tried running a basic test directly on the FreeNAS box itself. The results here might be showing me that the underlying file system is where the write bottleneck is.

[root@freenas] /mnt/VOL-01# iozone -M -e -+u -T -t 32 -r 128k -s 40960 -i 0 -i 1 -i 2 -i 8 -+p 70 -C

Iozone: Performance Test of File I/O

Version $Revision: 3.420 $

Compiled for 64 bit mode.

Build: freebsd

Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins

Al Slater, Scott Rhine, Mike Wisner, Ken Goss

Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR,

Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner,

Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy, Dave Boone,

Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root,

Fabrice Bacchella, Zhenghua Xue, Qin Li, Darren Sawyer,

Vangel Bojaxhi, Ben England, Vikentsi Lapa.

Run began: Mon Mar 28 06:15:07 2016

Machine = FreeBSD freenas.onlinespamsolutions.com 10.3-RELEASE FreeBSD 10.3-RE Include fsync in write timing

Include fsync in write timing

CPU utilization Resolution = 0.000 seconds.

CPU utilization Excel chart enabled

Record Size 128 KB

File size set to 40960 KB

Percent read in mix test is 70

Command line used: iozone -M -e -+u -T -t 32 -r 128k -s 40960 -i 0 -i 1 -i 2 -i 8 -+p 70 -C

Output is in Kbytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 Kbytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

Throughput test with 32 threads

Each thread writes a 40960 Kbyte file in 128 Kbyte records

Children see throughput for 32 initial writers = 881455.50 KB/sec

Parent sees throughput for 32 initial writers = 175462.69 KB/sec

Min throughput per thread = 17009.57 KB/sec

Max throughput per thread = 58323.66 KB/sec

Avg throughput per thread = 27545.48 KB/sec

Min xfer = 11520.00 KB

CPU Utilization: Wall time 3.526 CPU time 37.525 CPU utilization 1064.15 %

Children see throughput for 32 rewriters = 481988.43 KB/sec

Parent sees throughput for 32 rewriters = 357642.57 KB/sec

Min throughput per thread = 11176.98 KB/sec

Max throughput per thread = 135210.06 KB/sec

Avg throughput per thread = 15062.14 KB/sec

Min xfer = 40960.00 KB

CPU utilization: Wall time 3.665 CPU time 53.569 CPU utilization 1461.76 %

Children see throughput for 32 readers = 20502240.03 KB/sec

Parent sees throughput for 32 readers = 19000155.28 KB/sec

Min throughput per thread = 1178280.88 KB/sec

Max throughput per thread = 1309782.25 KB/sec

Avg throughput per thread = 640695.00 KB/sec

Min xfer = 36608.00 KB

CPU utilization: Wall time 0.502 CPU time 13.718 CPU utilization 2732.44 %

Children see throughput for 32 re-readers = 20769397.02 KB/sec

Parent sees throughput for 32 re-readers = 19369242.03 KB/sec

Min throughput per thread = 1311409.00 KB/sec

Max throughput per thread = 1325271.75 KB/sec

Avg throughput per thread = 649043.66 KB/sec

Min xfer = 25216.00 KB

CPU utilization: Wall time 0.498 CPU time 7.064 CPU utilization 1419.41 %

Children see throughput for 32 random readers = 20194862.50 KB/sec

Parent sees throughput for 32 random readers = 16348396.82 KB/sec

Min throughput per thread = 0.00 KB/sec

Max throughput per thread = 2476420.25 KB/sec

Avg throughput per thread = 631089.45 KB/sec

Min xfer = 0.00 KB

CPU utilization: Wall time 0.252 CPU time 2.535 CPU utilization 1003.85 %

Children see throughput for 32 mixed workload = 2077540.47 KB/sec

Parent sees throughput for 32 mixed workload = 296201.30 KB/sec

Min throughput per thread = 94.94 KB/sec

Max throughput per thread = 1006437.50 KB/sec

Avg throughput per thread = 64923.14 KB/sec

Min xfer = 128.00 KB

CPU utilization: Wall time 2.258 CPU time 20.343 CPU utilization 900.91 %

Children see throughput for 32 random writers = 290536.01 KB/sec

Parent sees throughput for 32 random writers = 104919.13 KB/sec

Min throughput per thread = 2751.82 KB/sec

Max throughput per thread = 90470.99 KB/sec

Avg throughput per thread = 9079.25 KB/sec

Min xfer = 8960.00 KB

CPU utilization: Wall time 6.612 CPU time 51.601 CPU utilization 780.38 %

Based on this result, I'm almost thinking the write performance is actually a problem on the local file system. Can anyone shed some light on this?

First, a quick punch list of my FreeNAS build.

Dell C6100

2 x Intel E5672 Xeon Procs

96GB RAM

1015M controller card flashed with V20

XX2X2 daughter card flashed with V20

10 x Seagate ES.2 3TB 7200 SATA Enterprise Drives

- 8 Drives setup as RAIDZ2 (connected to 1015M controller card)

- 2 Drives setup as Hot Spares (connected to ports 1-2 of XX2X2 daughter card)

2 x Intel S3700 100GB SSD (connected to ports 3-4 of XX2X2 daughter card)

- 10GB Partition on Each, Mirrored ZFS Partition for SLOG

- 12GB Partition on Each, FreeBSD Swap Partitions for SWAP

- 78GB Partition on Each, ZFS Partitions for L2ARC

2 x Intel NIC Interfaces, setup with LACP, terminated to a Cisco switch

Okay, that should do it ... Now you have at least a very basic baseline of where I'm coming from.

Some other high level info about my environment. I have about 10 ESXi 5.5 hosts, each of which have 2 NICs (active/passive) for the guest network traffic, and then 2 NICs setup as active/active, with MPIO configured.

For the past several years, my storage backends have been based on Windows 2012 Storage Server, providing iSCSI to the hosts. The two storage servers I have are both using LSI 9265 RAID Controllers, and the drive arrays are setup in RAID6 on one box, with a hot spare, and RAID 60 on the other, no hot spare. Both systems have 12 of the same drives in them (Seagate ES.2) so the drive type is apples to apples with the new FreeNAS build I have created.

In both the windows storages servers, as well as the FreeNAS box, i have a port-channel defined on the switch. LACP is the protocol. The windows storage servers, as well as the FreeNAS box are both setup with LACP.

Again, as a note, on the ESXi host side, I have MPIO configured properly, round robin setup, and iops set to 1 second.

Okay, sorry for being so long winded, but I wanted to make sure that all the details of my current configuration were out there!

Down to the problem ... I have a test guest VM (Windows 8.1, 6GB RAM, 4 Procs, 2 x 40GB Hard Disks) and have installed CrystalMark5, ATTO Disk Benchmark, and HD Tune Pro for benchmarking. Guest ethernet connectivity is turned off, so we are just getting basic benchmark activity on the machines.

(Side background, I have 3 ESIi hosts, all running 96GB of RAM, all with E5672 procs, all idle except for this VM build, again, making sure we are apples to apple. The ONLY thing that isn't apples to apples is the FreeNAS box has zero traffic hitting it, as it's a new build, where as my other two Windows Storage Servers are serving up VM's for about 25 guests, clearly meaning there results could be degraded, which I understand going in to testing).

Okay, so here are the performance numbers I am seeing from ATTO. What you will see is that while READ access to FreeNAS+iSCSI is able to sustain about 235MB/sec, which is expected, because the network transit to the box is only 2Gbit, the write performance is only hitting between 60-80MB/sec.

**** FreeNAS + iSCSI Benchmark Results ****

For comparison purposes, here is the results from one of my two guests running on the Windows Storage Servers iSCSI. I'm only including one of the two, since results are similar.

***** Windows Storage Server + iSCSI Benchmark Results *****

As you can see, the results of writes is a boat load better to the Windows Storage Server. The reads are better on FreeNAS, but that is clearly expected due to the fact that the array for FreeNAS is idle. I fully expected to cap out at the full bandwidth of the network interface, which is the results I see. But on the write side of things, as you can see, it is much slower. And based on these results, I definitely don't see it being a network LACP issue, because if we were getting stuck with transit of only a single interface, I would still think I would see writes consistently capped out at about 110MB/sec, and that isn't what I'm seeing.

I have been doing forum digging, and honestly I can't seem to find anything that would indicate where my problem might reside, or if I even "have" a problem. I can only bases my suspicions on the results I'm seeing.

On a side note, I've also run CrystalDiskMark on both machines, and see some strange results.

**** FreeNAS 9.10 + iSCSI Results ****

**** Windows Storage Server + iSCSI Results ****

**** Windows Storage Server + iSCSI Results ****

These results are a little strange, because they show some read results all over the map for the Windows Storage Server setup. Probably showing there are other issues on the windows storage server platform, but again, not here asking for help on that. Simply trying to isolate performance on FreeNAS. Again, looking at the first line, you can see we are getting write performance on Windows of 207MB/sec, but on FreeNAS, I can only get 108MB/sec, and remember, that's an idle array, doing nothing.

And then, in trying to determine of the underlying file system is the bottleneck, I've actually tried running a basic test directly on the FreeNAS box itself. The results here might be showing me that the underlying file system is where the write bottleneck is.

[root@freenas] /mnt/VOL-01# iozone -M -e -+u -T -t 32 -r 128k -s 40960 -i 0 -i 1 -i 2 -i 8 -+p 70 -C

Iozone: Performance Test of File I/O

Version $Revision: 3.420 $

Compiled for 64 bit mode.

Build: freebsd

Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins

Al Slater, Scott Rhine, Mike Wisner, Ken Goss

Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR,

Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner,

Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy, Dave Boone,

Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root,

Fabrice Bacchella, Zhenghua Xue, Qin Li, Darren Sawyer,

Vangel Bojaxhi, Ben England, Vikentsi Lapa.

Run began: Mon Mar 28 06:15:07 2016

Machine = FreeBSD freenas.onlinespamsolutions.com 10.3-RELEASE FreeBSD 10.3-RE Include fsync in write timing

Include fsync in write timing

CPU utilization Resolution = 0.000 seconds.

CPU utilization Excel chart enabled

Record Size 128 KB

File size set to 40960 KB

Percent read in mix test is 70

Command line used: iozone -M -e -+u -T -t 32 -r 128k -s 40960 -i 0 -i 1 -i 2 -i 8 -+p 70 -C

Output is in Kbytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 Kbytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

Throughput test with 32 threads

Each thread writes a 40960 Kbyte file in 128 Kbyte records

Children see throughput for 32 initial writers = 881455.50 KB/sec

Parent sees throughput for 32 initial writers = 175462.69 KB/sec

Min throughput per thread = 17009.57 KB/sec

Max throughput per thread = 58323.66 KB/sec

Avg throughput per thread = 27545.48 KB/sec

Min xfer = 11520.00 KB

CPU Utilization: Wall time 3.526 CPU time 37.525 CPU utilization 1064.15 %

Children see throughput for 32 rewriters = 481988.43 KB/sec

Parent sees throughput for 32 rewriters = 357642.57 KB/sec

Min throughput per thread = 11176.98 KB/sec

Max throughput per thread = 135210.06 KB/sec

Avg throughput per thread = 15062.14 KB/sec

Min xfer = 40960.00 KB

CPU utilization: Wall time 3.665 CPU time 53.569 CPU utilization 1461.76 %

Children see throughput for 32 readers = 20502240.03 KB/sec

Parent sees throughput for 32 readers = 19000155.28 KB/sec

Min throughput per thread = 1178280.88 KB/sec

Max throughput per thread = 1309782.25 KB/sec

Avg throughput per thread = 640695.00 KB/sec

Min xfer = 36608.00 KB

CPU utilization: Wall time 0.502 CPU time 13.718 CPU utilization 2732.44 %

Children see throughput for 32 re-readers = 20769397.02 KB/sec

Parent sees throughput for 32 re-readers = 19369242.03 KB/sec

Min throughput per thread = 1311409.00 KB/sec

Max throughput per thread = 1325271.75 KB/sec

Avg throughput per thread = 649043.66 KB/sec

Min xfer = 25216.00 KB

CPU utilization: Wall time 0.498 CPU time 7.064 CPU utilization 1419.41 %

Children see throughput for 32 random readers = 20194862.50 KB/sec

Parent sees throughput for 32 random readers = 16348396.82 KB/sec

Min throughput per thread = 0.00 KB/sec

Max throughput per thread = 2476420.25 KB/sec

Avg throughput per thread = 631089.45 KB/sec

Min xfer = 0.00 KB

CPU utilization: Wall time 0.252 CPU time 2.535 CPU utilization 1003.85 %

Children see throughput for 32 mixed workload = 2077540.47 KB/sec

Parent sees throughput for 32 mixed workload = 296201.30 KB/sec

Min throughput per thread = 94.94 KB/sec

Max throughput per thread = 1006437.50 KB/sec

Avg throughput per thread = 64923.14 KB/sec

Min xfer = 128.00 KB

CPU utilization: Wall time 2.258 CPU time 20.343 CPU utilization 900.91 %

Children see throughput for 32 random writers = 290536.01 KB/sec

Parent sees throughput for 32 random writers = 104919.13 KB/sec

Min throughput per thread = 2751.82 KB/sec

Max throughput per thread = 90470.99 KB/sec

Avg throughput per thread = 9079.25 KB/sec

Min xfer = 8960.00 KB

CPU utilization: Wall time 6.612 CPU time 51.601 CPU utilization 780.38 %

Based on this result, I'm almost thinking the write performance is actually a problem on the local file system. Can anyone shed some light on this?