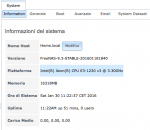

Hello everyone, I am a user to his first time with freenas. I just finished assembling a card with nas asrock xeon + + 16gb of ram. I used as storage 6x 4tb wd red and I put everything in raidz.

The major types of security suits me well because I use a good ups that protects me hard and I have a backup of sensitive data on the external USB I do manually.

I have a lot of confusion about what I do now:

I did the users and shares (2 cifs data and media) and a time machine for afp. I chose cifs because the software on Apple TV no longer supports afp and then I did some tests and also by clients mac I have no problems with the cifs and I left everything well.

I set off with the ups and communication via e-mail.

I created the virtual interface for link aggregation and verified.

I saved the configuration of the system to an external drive.

A rule would be okay, I do not need anything else.

There are other procedures to do?

I have read too many things about the snapshots that now I do not understand whether they are useful or not. I do not care to restore your system to a previous version, I only require that everything is safe and reliable.

The other question is directed to the display of client mac.

Startup see how network resources the nos. The finder I (cmd + K to connect to a server), and use "smb: //home.local" credentials and after I see my two datasets shared and I can access it and work with it. The problem is that on the sidebar of the Finder, I see not just connected a second icon that does not call "Home" but "home.local" and in both I can get in there and work .... both have the same network path ("smb: //home.local "). Do you think why?

Thanks for your time, and sorry my english google!

The major types of security suits me well because I use a good ups that protects me hard and I have a backup of sensitive data on the external USB I do manually.

I have a lot of confusion about what I do now:

I did the users and shares (2 cifs data and media) and a time machine for afp. I chose cifs because the software on Apple TV no longer supports afp and then I did some tests and also by clients mac I have no problems with the cifs and I left everything well.

I set off with the ups and communication via e-mail.

I created the virtual interface for link aggregation and verified.

I saved the configuration of the system to an external drive.

A rule would be okay, I do not need anything else.

There are other procedures to do?

I have read too many things about the snapshots that now I do not understand whether they are useful or not. I do not care to restore your system to a previous version, I only require that everything is safe and reliable.

The other question is directed to the display of client mac.

Startup see how network resources the nos. The finder I (cmd + K to connect to a server), and use "smb: //home.local" credentials and after I see my two datasets shared and I can access it and work with it. The problem is that on the sidebar of the Finder, I see not just connected a second icon that does not call "Home" but "home.local" and in both I can get in there and work .... both have the same network path ("smb: //home.local "). Do you think why?

Thanks for your time, and sorry my english google!