Hi!

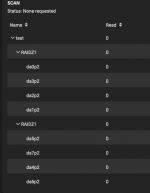

I have a TrueNAS Server with 8x 2TB WD Red EFXR. I had it configured as two 4 drive vdevs as Z1, combining to around 11TB.

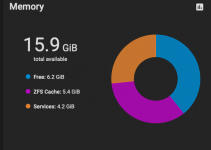

The CPU is a i5-3470T CPU @ 2.90GHz, the Server has 8GB ECC Memory.

It always did well when accessed via Gigabit LAN, around 100MB/s as expected.

Now I've put a qnap 10Gbit/s card in there and connect to it via a 2,5Gbit/s USB Dongle.

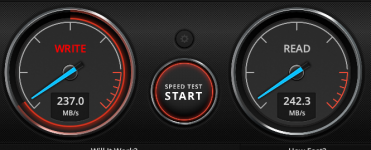

It stays around 100MB/s when reading, around 220MB/s writing. Only if I destroy the Pool and set it up as a complete stripe I can saturate the 2,5Gbit/s.

So I guess it's NOT the drives or the controller, I suspect the CPU - but it just peaks around 50% in the dashboard if I hit the share with BM Disk Speed Test.

Using a SSD as a cache doesn't help anything.

Any suggestion for a strategy to find the bottleneck?

Thanks,

Jörg

-------- SOLUTION ---------

Well, it's been the controller. I changed it out for a controller with LSI 2008 chipset and flashed it to IT mode. Around 240MB/s read and write speed over 2.5 Gbit/s network.Thanks for all the suggestions!

Jörg

I have a TrueNAS Server with 8x 2TB WD Red EFXR. I had it configured as two 4 drive vdevs as Z1, combining to around 11TB.

The CPU is a i5-3470T CPU @ 2.90GHz, the Server has 8GB ECC Memory.

It always did well when accessed via Gigabit LAN, around 100MB/s as expected.

Now I've put a qnap 10Gbit/s card in there and connect to it via a 2,5Gbit/s USB Dongle.

It stays around 100MB/s when reading, around 220MB/s writing. Only if I destroy the Pool and set it up as a complete stripe I can saturate the 2,5Gbit/s.

So I guess it's NOT the drives or the controller, I suspect the CPU - but it just peaks around 50% in the dashboard if I hit the share with BM Disk Speed Test.

Using a SSD as a cache doesn't help anything.

Any suggestion for a strategy to find the bottleneck?

Thanks,

Jörg

-------- SOLUTION ---------

Well, it's been the controller. I changed it out for a controller with LSI 2008 chipset and flashed it to IT mode. Around 240MB/s read and write speed over 2.5 Gbit/s network.Thanks for all the suggestions!

Jörg

Attachments

Last edited: